Secure code review: 8 security code review best practices

April 20, 2020

0 mins read

"It’s always a good idea to check for security issues in code that you review. In case you don’t know what to look for, here’s a handy checklist to give you pointers for your next code reviews!Code reviews are hard to do well. Particularly when you’re not entirely sure about the errors you should be looking for! The DevSecOps approach pushes security testing left so that vulnerabilities can be found and fixed earlier, in the design, development, or CI/CD stages of the workflow. It’s always a good idea to check for security issues in code that you review. In case you don’t know what to look for, here’s a handy checklist to give you pointers for your next code reviews!

Be sure when you’re reviewing code to understand that all code isn’t written equal! Think also about what lies behind the code that you’re reviewing and thus the data and assets you are trying to protect. This working knowledge is something that isn’t easy to add into a checklist. However, using the tips in this cheatsheet, alongside your domain knowledge, will assist you in deciding where you should spend more of your time and where you should expect higher risk and different types of attacks. Note that a great way of determining where your highest risk areas exist is by creating attack trees that will show you where to focus your efforts first/most.

So, let’s get started with our secure code review list of 8 security code review tips that you can check for, when looking at future pull requests!

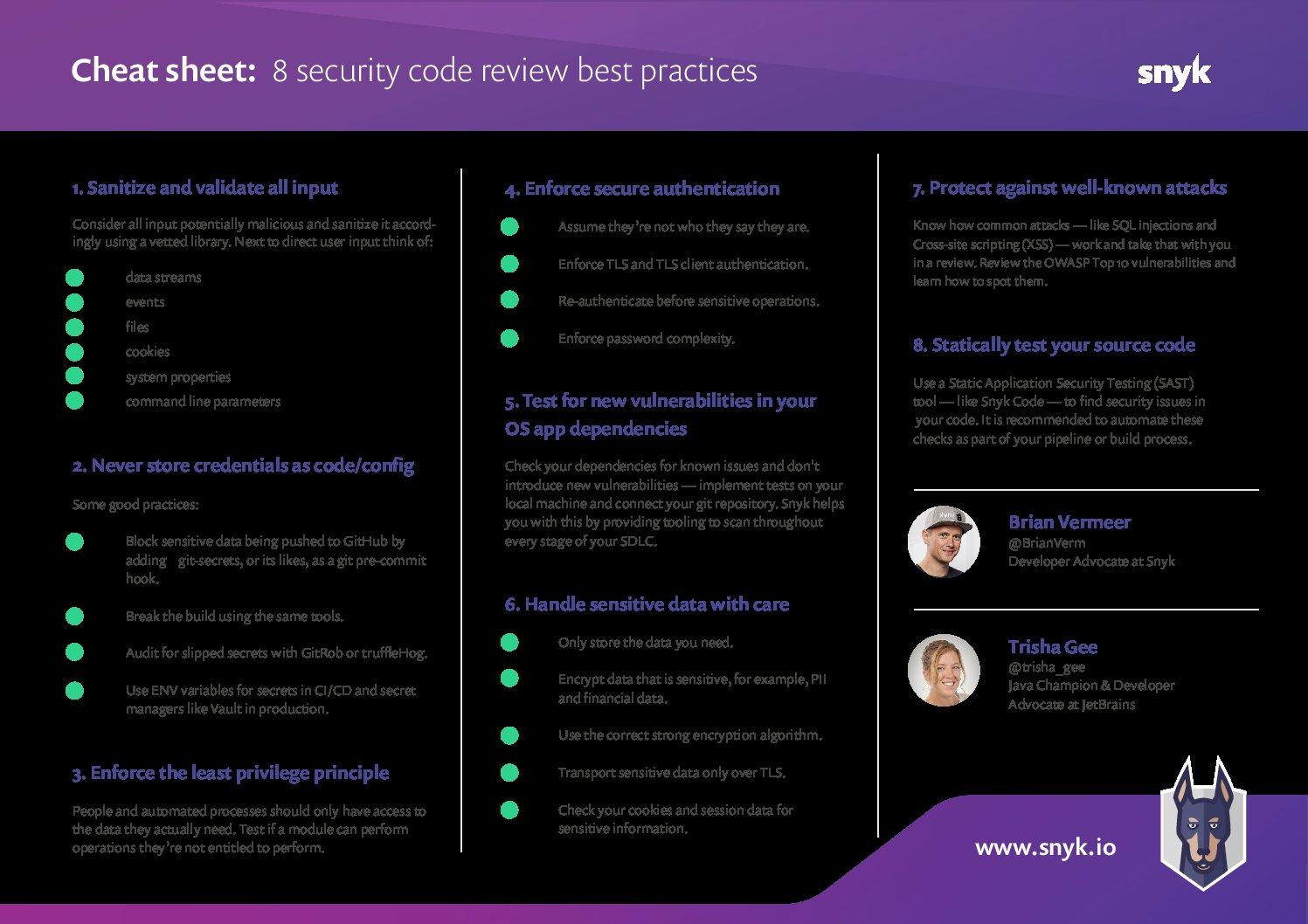

1. Sanitize and validate all input

Modern web applications have to interact with all sorts of third-party input. Although direct input from an end-user in the browser, for instance, is an obvious one. As developers, we all know that a user will insert unexpected things when this is possible. Making sure that direct input from a user is validated and sanitized accordingly is considered a core best practice to ensure that applications are not vulnerable to content injection. However, direct user input is by far not the only thing you should check. Basically every input that comes from the outside boundaries of your system should be considered and treated as potentially harmful. Think about things like:

data feeds

files

events — an event-driven system, such as working closely with platforms like Functions as a Service

data responses from other systems

cookies

On top of all this, input that seems under your control at first sight, might be harmful. Think about it — when a malicious user is able to connect to your database directly, there is a backdoor to insert, for example, malicious code that will be executed in your system. The same principle holds for:

command-line parameters

environment variables

system properties

data storage

All input, even the input that seems to be controlled by you, should be validated and sanitized. Check if the input makes sense. Using the type system in a type-safe language, can help you a lot. In addition, check on the format, range, size, file type, file name and take nothing for granted. User input should be sanitized, preferably using a well-vetted library, before it will be stored or used anywhere.

2. Never store secrets as code/config

It’s all too easy to store credentials, tokens or other secrets as variables or constants, because hey — we’re just testing it to make sure it’s working. But just as easily this code makes its way into your code repository because you forgot to remove it. We urge you to make sure there’s nothing sensitive in the code you look through. If you’re using a git-based code repository, there are a bunch of great tools available, like git-secrets, that can statically analyze your commits, via a pre-commit Git Hook, to ensure you're not trying to push any passwords or sensitive information into your repo. Commits are rejected if the tool matches any of the configured regular expression patterns indicating that sensitive information has been stored improperly. This may slow down pushes a tiny bit, but it’s well worth it.

Having team-wide rules that prevent credentials from being stored as code is a great way to monitor bad actions in the existing developer workflow. Use tools like Vault to help manage your secrets when in production. Lastly, consider using an identity and user management toolchain, like Keycloak (currently maintained by a number of developers in Red Hat) as well as others.

There are many ways to avoid putting credentials into your repository in the first place and it’s best if you tried to implement as many as you can; however, there’s always the chance some sensitive information may sneak in. You should also consider regularly auditing your repos, making use of tools like GitRob or truffleHog, both of which scan through your codebase, searching for sensitive information via pattern matching.

3. Test for new security vulnerabilities introduced by third-party open source dependencies

Modern application development is heavily dependent on third-party libraries. By using package managers like npm, Maven, Gradle PyPI, or any equivalent, we have easy access to publicly available libraries and frameworks. As developers, we want to focus on specific business logic and not so much on creating boilerplate functionality, using frameworks and libraries to do the heavy lifting is an obvious choice..

There’s a good chance you don’t know how many direct dependencies your application uses. When looking at an average project, the amount of your code can be as little as 1% — the rest is imported libraries and frameworks. A lot of code that is put into production is simply not ours, but we do depend on it heavily. It’s also extremely likely you don’t know how many transitive dependencies your application uses. Larger frameworks nowadays are depending on other libraries that also depend on other libraries. By pulling in a single library or framework, chances are that you are pulling in at least a dozen more libraries and/or frameworks, that you are not always aware of. This way dependencies are making up for the majority of your overall application. Attackers target open source dependencies more and more, as their reuse provides a malicious attacker with many victims. For this reason, it’s important to ensure there are no known vulnerabilities in the entire dependency tree of your application.

Let’s use Snyk as an example. Snyk statically analyzes your project to find vulnerable dependencies you may be using and helps you fix them. You can test your repos through Snyk’s UI to find issues, but also to keep users from adding new vulnerable libraries by testing pull requests and failing the test, if a new vulnerability was introduced. Automated fix PR’s are also an option.

Depending on how you like to work, you can choose to connect your repository to the Snyk UI or scan the project on your local machine using the CLI (check the CLI cheat sheet), an integration in your build system, or a plugin in your IDE. From left (the developers’ local machine), to completely right (your system in production), and every step in between, you should analyze your dependencies automatically to ensure quick feedback.

4. Enforce secure authentication

Authentication verifies that a user, service, or entity (internal or external) is who they say they are. This could be as simple as a user passing you their credentials, or a server providing you with its TLS certificate to validate it is indeed the server it claims to be. Authentication doesn’t tell you what the user or service is allowed to do, but rather that they are indeed that user or service. Let’s cover a few authentication best practices you should make note of:

Assume they’re not who they say they are.

You should work under the principle that they’re not who they say they are until they have provided the credentials to prove it. Assuming the user or service shouldn’t have access to your data is, of course, the safest way of behaving. Make sure your code reflects that.

Enforce password complexity

In the case of users, consider being more lenient, in terms of the usernames, particularly when using email addresses. For instance, there’s little value in distinguishing between Patch@snyk.io and patch@snyk.io, whereas it’s important to enforce password complexity (at least 1 uppercase character, 1 lowercase character (a-z), 1 digit (0-9) and 1 special character) and length (NIST SP800-132). I understand, it’s difficult to remember all those random — or not, long, and complicated passwords. But, come on! It’s the year 2020 and password managers are here to save you!

Re-authenticate before sensitive operations

Asking users for their credentials — before transferring monies, or performing sensitive actions — mitigates potential Cross-Site request forgery (CSRF) and session hijacking attacks. An attacker might perform these sensitive tasks without ever having provided the user's credentials. This security measure, while inconvenient to your users, can protect them in long term.

TLS client authentication

TLS Client Authentication, also known as two-way TLS authentication, requires both the browser and server to authenticate, each sending their TLS certificates in a TLS handshake. This is achieved by a user or service obtaining a client certificate from the server and providing it on subsequent interactions. The user may need to install the certificate if using a browser.

5. Enforce the least privilege principle

In addition to authentication comes authorization. They sound similar but are quite different. As we saw in point 4, authentication proves a user or service is indeed who they say they are, while authorization goes further to ensure that person or service is allowed to perform whatever task or action they’re trying to perform. We know we need to check for this and ensure those users, services, or processes are running or exist in a role that has the authority to undertake such an action. However, from a coding point of view, it’s often all too easy to give more access than is actually required.

The principle of least privilege states that every module (such as a process, a user, or a program, depending on the subject) must be able to access only the information and resources that are necessary for its legitimate purpose. So in essence, give people or processes the bare minimum of privileges and permissions they need to achieve their goal.

A great way to test for this is to ensure you write specific automatic unit and integration tests that not only test the happy path but, more importantly, test the unhappy security related cases. These tests should successfully authenticate, but try to perform operations they’re not entitled to perform. These tests should always be added when altering the roles your application runs under or introduces new resources that require you to be in a specific role to perform.

6. Handle sensitive data with care

Exposing sensitive data — like personal information or credit card numbers of your client — can be harmful. But even a more subtle case than this can be equally harmful. For example, the exposure of unique identifiers in your system is harmful, if that identifier can be used in another call to retrieve additional data.

First of all, you need to look closely at the design of your application and determine if you really need the data. On top of that, make sure that you don’t expose sensitive data, perhaps via logging, autocompletion, transmitting data etc.

Storing sensitive data

If you need to persist sensitive data like Personally Identifiable Information (PII) or financial details, be aware that proper encryption is used. You probably want and need to be GDPR compliant but, first and foremost, you don't want your clients data to be compromised. The encryption should either be a strong 2-way encryption algorithm, if you need to retrieve the data in its original form, or a strong cryptographic hashing algorithm, if you need to store passwords. Don't fall into the trap of writing your own encryption — find out what encryption you need to use and use a well-vetted library to handle the encryption for you. For instance, use BCrypt for password hashing and encryption algorithms Triple DES, RSA and AES to encrypt the data you need to retrieve. Most importantly, keep reviewing if the algorithms you use are still secure enough. What is perfectly fine today, might be compromised tomorrow.

Also keep in mind that sensitive data can live in memory. If you change a password in your system prevent temporary storage in an immutable data type. For example, if you use a String in Java to store your password in memory, the original value will be in memory until the garbage collector removes it as String is immutable. A byte array would be a better choice in this case.

Transporting sensitive data

If you need to transfer sensitive data, check if the connection is secure. Sensitive data should only be transferred encrypted and over a TLS. You need to also make sure that the TLS version is up to date. Obviously sending somebody’s credit card details as a query parameter or as plain text in the payload over HTTP is not considered safe at all.

Session data

Last but certainly not least, consider session data also as sensitive data. The advice is not to store sensitive data in cookies at all, but rather use a session identifier and store the data in a server-managed session. Also, make sure that cookies are encrypted and have a decent length (eg. 128 bits). Check if attributes like HttpOnly, Secure, and SameSite on the cookie are set correctly and that they expire in a reasonable amount of time. Note that if a user logs out client-side, the session must be invalidated so it cannot be used elsewhere.

7. Protect against well-known attacks

We can safely assume that attackers will continue to hack our applications using predictable, well-known and recognized attack vectors. The general lack of knowledge about common vulnerabilities and how they can be exploited, often leads to duplicating the same security mistakes over and over in future code. Take a look at the OWASP Top 10 vulnerabilities and understand how these common exploits work. Here are some tips to look out for when trying to avoid some of the most common vulnerability types.

XSS

The core premise behind a Cross-site Scripting (XSS) attack is when a user injects malicious data to your application which ends up in a context — such as an HTML document — that can be executed to share sensitive information or other malicious activity. If you’re planning to allow your users to submit data within your site that is displayed on your page, you need to learn how to defensively prevent XSS from happening. There are a number of ways to reduce the chances of XSS attacks, for example, HTML encoding dangerous characters. Such characters include &, <, >, “ and ‘. Disallowing such characters, or sanitizing them, will prevent an attacker from breaking out from the HTML tag and execute malicious code. To make this harder, we can HTML encode any HTML characters that are passed to us, so that they are not represented in the form that can break out of an HTML tag, as follows:

HTML entity | HTML encoding |

|---|---|

& | & |

< | < |

> | > |

“ | " |

‘ | ' |

Similarly, we can achieve that with tag attributes, event handlers, or even style properties. It’s common to use sanitization libraries to help us whitelist what characters that we should allow. Using existing sanitization libraries also means that we don’t have to be security gurus to make sure we pick up every eventuality. This is particularly good for more junior developers.

SQL and NoSQL injection

One of the reasons why SQL injection is most attractive to an attacker is because it provides them with direct access to the data they inevitably want to gain access to. All too often, a hack is merely just a way for an attacker to learn or gain knowledge about the system that they are trying to breach. This often means that an attacker has to do more work to find out where the data lives and how they can gain access to that data. SQL injection, on the other hand, is a mechanism that, if successfully hacked, can provide an attacker with direct access to sensitive information stored in a database. This does not only hold for SQL database — many NoSQL databases can be compromised in a similar way.

Both XSS and SQL injection are very common attacks. You should know how they work and how to spot them in your code. The lack of sanitization of input anywhere in the system is a big red flag for XSS. For SQL injection you should check if query parameterization is implemented. You need to make a distinction between the query and the parameters. Binding the parameters to a particular type before making them part of the query (and do proper sanitization), will prevent these kinds of attacks.

There are many other vulnerability types that your application might be susceptible to, some more than others. You should take the time to learn about which ones affect your application most, from the code your teams directly produce through, to the types of libraries your application depends upon.

8. Statically test your source code, automatically

Static code analysis is a very powerful tool for developers. The security-focused subset of these tools are Static Application Security Testing (SAST) tools. By statically looking at the code you and your team wrote, a SAST tool will indicate if a security-related bug slipped into your source code. Using a SAST tool like Snyk Code can point out issues like SQL injections and code vulnerabilities.

We recommend a SAST over a linter for security. While linters can be very useful for static code analysis (bugs, errors, etc.), they will produce a lot of false positives. Since all linters are rule-based and not looking at the full context of your code, there will be a bunch of cases where a linter will flag your code as a bug or a security issue while this is not the case. Nevertheless, if you fine-tune the ruleset, a linter can prevent nasty mistakes. The best way is to automate these processes as much as possible. For instance, as part of your build process, or maybe when a new pull request is submitted to your repository. Snyk Code handles all of that for you, adding SAST capabilities right into your workflows.

If you don’t have a SAST tool in place, you can either use it during your manual code review or, even better, automate it in your process so people can catch obvious problems even sooner. You can also try Snyk’s free code checker tool for a quick sense on the security of your code.

Live Hack: Exploiting AI-Generated Code

Gain insights into best practices for utilizing generative AI coding tools securely in our upcoming live hacking session.