Snyk Code’s auto-fixing feature, Snyk Agent Fix, just got better

April 23, 2024

0 mins readSnyk Agent Fix is an AI-powered feature that provides one-click, security-checked fixes within Snyk Code, a developer-focused, real-time SAST tool. Amongst the first semi-automated, in-IDE security fix features on the market, Snyk Agent Fix’s public beta was announced as part of Snyk Launch in April 2023. It delivered fixes to security issues detected by Snyk Code in real-time, in-line, and within the IDE. Since then, we have been on a journey to improve this powerful and game-changing functionality for Snyk Code users. Today, with nearly 12 months of public beta user feedback and iterative improvements, we are happy to announce new model improvements in our battle-tested auto-fixing feature. Snyk Agent Fix now boasts the following:

8 supported languages

Groundbreaking, patent-pending technology that significantly boosts accuracy for not just Snyk Agent Fix but also other models we tested the technology on, like GPT-4, GPT-3.5 and Mixtral.

Multimodal AI (our famous hybrid AI approach) to maximize robustness through model diversity.

Addressing the need for seamless auto-fixing, with Snyk Agent Fix

Snyk Code analyzes the source code that developers are working on, at every stage of the software development life cycle (SDLC) – spanning the IDE, SCM, CI/CD pipelines – bringing unmatched accuracy, speed, and proactive remediation in identifying security issues within the SAST space.

Depending on the relevant security policies, once new security issues have been identified, developers will aim to fix them. However, fixing security issues isn’t always straightforward. You need context of how the code works within the larger canvas of the code base, what the security issue is, and the best way to remediate this issue.

Snyk Code offers developers detailed explanations of detected security issues and example fixes from real, permissively licensed repositories that they can use for inspiration on tackling their issues. Before Snyk Agent Fix, Snyk Code offered a significant step forward in reducing the cognitive load of the developer. However, developers still had to find a way to fix security issues on their own.

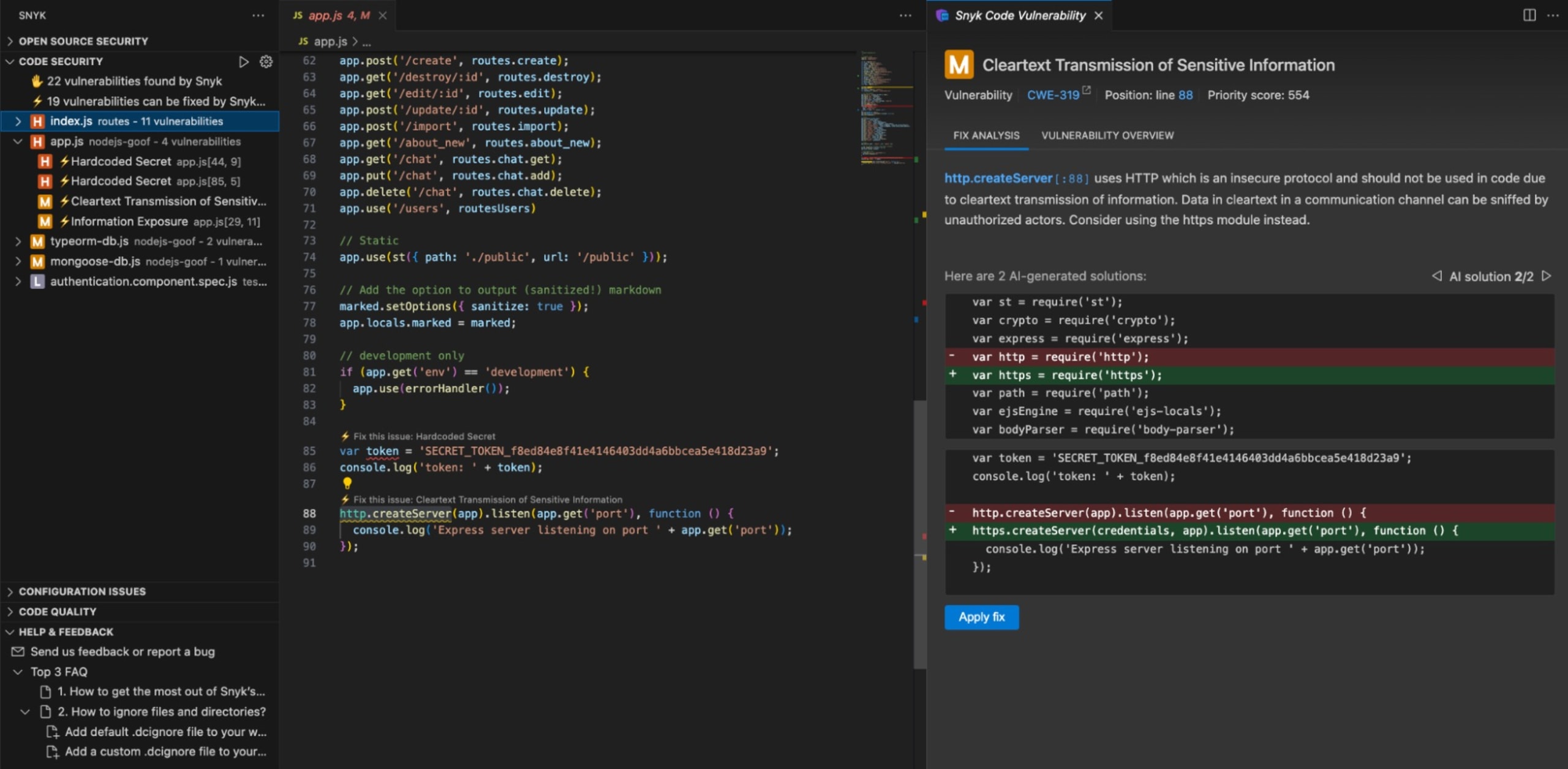

This is why we aimed to make fixing vulnerabilities even easier for developers with Snyk Agent Fix, which is powered by the LLM portion of our DeepCode AI machine (this consists of a combination of symbolic AI and machine learning, including our LLM). Snyk Agent Fix helped developers to build securely and seamlessly by enabling them to fix security issues automatically, beginning with JavaScript and TypeScript projects. Anyone using Snyk Code in Visual Studio Code, or Eclipse was able to ⚡Fix this issue when new security issues were introduced.

Learning from our pioneering early beta release

When Snyk first introduced Snyk Agent Fix in early access, it was adopted across a myriad of businesses of various sizes and industry verticals, as it offered teams a unique way of remediating security issues — addressing problems directly in the IDE before these vulnerabilities could be pushed into production, and automating remediation.

Although we were ahead of others in releasing an AI-powered auto-fixing feature for code, all beta features are by their very nature in improvement phases, and we wanted to continue refining Snyk Agent Fix. Our areas of interest included enhancing the consistency of the “correctness” of fixes, improving our language rules, improving language coverage, extending accessibility (it was only available within the IDE at the time), and enhancing user experience.

These were quite a few areas to cover, and working closely with user feedback, we decided to start by focusing on the following most impactful items:

Improving the quality of the results

Covering additional languages.

The quality and security of fixes are paramount for a capability like Snyk Agent Fix, so we decided to double down on improving the depth and breadth of our proposed fixes.

Building a trusted auto-fixing feature in an AI world

A new LLM model for speed and accuracy

When Snyk Agent Fix was released, it used a version of Google’s T5 model that Snyk’s top security experts had fine-tuned extensively. Why did we start with this model? To put things into perspective, we were ahead of the industry and had been working on Snyk Agent Fix for two whole years before it was released — when LLMs were still in their infancy. So, based on the viable options open to us at that point in time, we felt that the T5 was the best fit for our needs. We fine-tuned the model on permissively licensed open source projects that had fixed security issues, to teach the model ways of remediating security issues.

Before Snyk Agent Fix proposed a security fix, Snyk’s hybrid AI engine, DeepCode AI, used its strict rules and knowledge-based symbolic AI algorithms to re-analyze the code fix with our security predictions incorporated, driving down the possibility of hallucinations and bad fixes that all LLMs, including Snyk Agent Fix, are susceptible to. This practice of checking Snyk Agent Fix’s proposed code continues, even after our transition to using a different, more advanced LLM model for Snyk Agent Fix (see below).

From what we learned in the initial stages with Snyk Agent Fix, we decided to move to LLM models to help us generate more accurate security issue fixes. We tested every potentially viable, interesting LLM to find out which one produced the best results when coupled with our fine-tuning methods. And based on its accuracy and speed, we chose StarCoder as the base model and fine-tuned it on our training datasets.

The improvements in results were incredible:

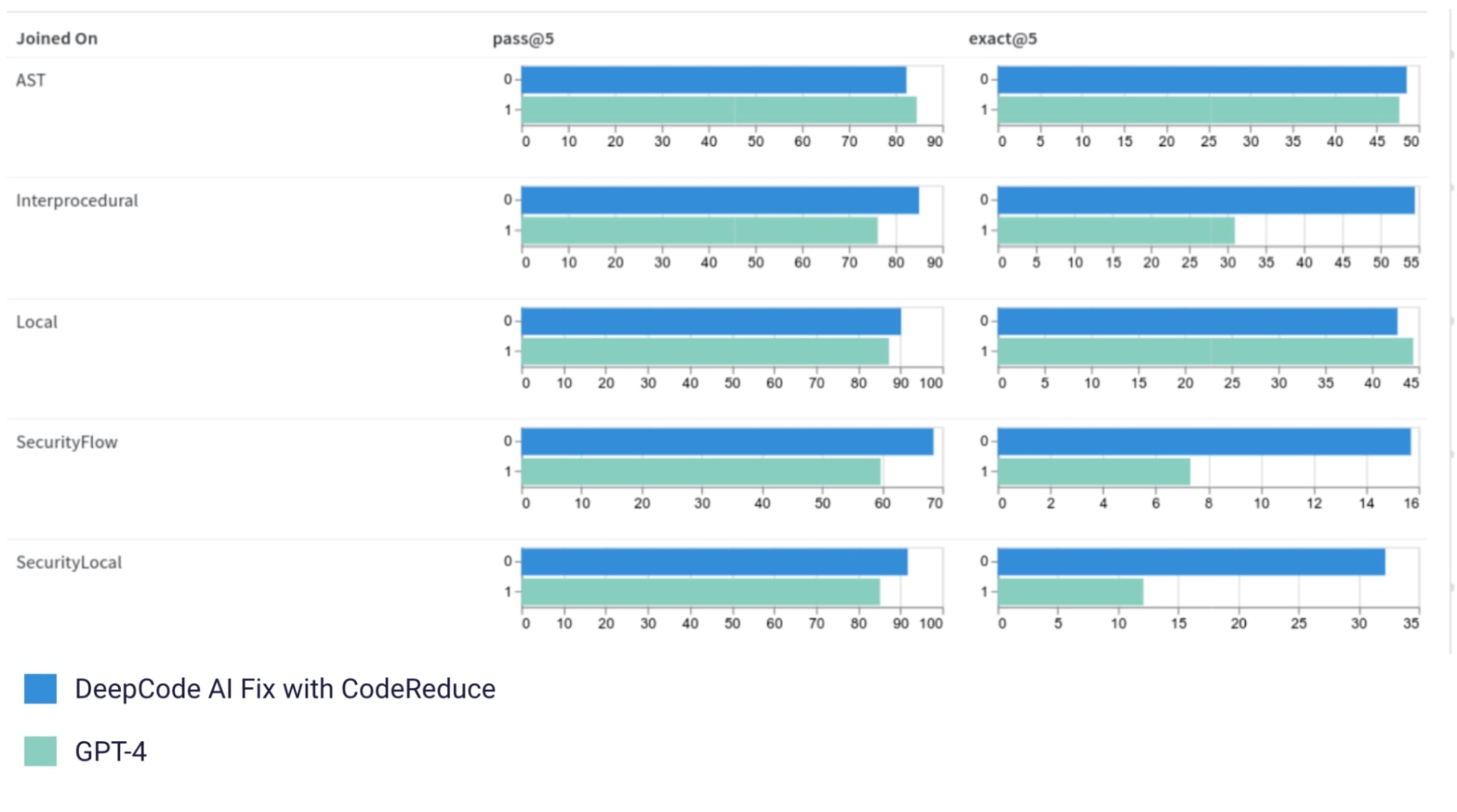

Snyk Agent Fix can automatically fix various kinds of security and coding issues. Per the rows in the image above, we classified these issues into 5 categories, AST, Interprocedural, Local, SecurityLocal, and SecurityFlow*, to summarize the evaluation results. For each issue encountered, Snyk Agent Fix generates 5 different fix candidates. The 3 columns in the image above – pass@1, pass@3 and pass@5 – refer to the percentage of outputs containing 1 accurate fix in 1, 3, and 5 fix candidates respectively.

To safeguard the accuracy of Snyk Agent Fix, all predictions are checked against our human-created rules and knowledge base (embodied in our symbolic AI). A prediction passes the checks if it produces syntactically correct, parseable code, and fixes the relevant security issue without introducing new ones. This is a unique Snyk differentiator: We use our own code analyzer and knowledge base, both powered by symbolic AI (read why this matters, here), to filter out potentially incorrect fixes and hallucinations generated by the LLM behind Snyk Agent Fix, before any such flawed fixes can reach our customers.

This ensures that Snyk Agent Fix is more robust and safer than other code-scanning auto-fixing solutions that solely rely on LLMs to fix, or even check and fix, issues. Detailed information on our evaluations and issue categories can be found in the research paper on Snyk’s CodeReduce technology (see below).

Groundbreaking, patent-pending technology that significantly improves the accuracy of even GPT-4

We also worked on improving the quality of results through improving how the user’s data (i.e. the relevant code) was processed into the prompt of the LLM behind Snyk Agent Fix. This proprietary technology by Snyk, called “CodeReduce”, is now patent-pending. CodeReduce leverages program analysis to focus the LLM’s attention mechanism on just the portions of code needed to perform the relevant fix, helping the LLM to zoom into a shorter snippet of code that contains the reported defect and the necessary code context.

In other words, Snyk is once again removing the noise and streamlining for efficient results with the most impact. Hence, CodeReduce drastically reduces the amount of code for Snyk Agent Fix to process, which in turn helps to improve the fix-generation quality and standards for all tested models (including GPT-3.5, GPT-4, and Mixtral), and reduces the chances of hallucinations. In fact, we improved GPT-4’s accuracy up to an impressive 20% (see below image). We will be chatting further about how CodeReduce works soon, so stay tuned!

Support for 8 languages, and general improvements across rules and data

Aside from changing the underlying base model and improving Snyk Agent Fix’s focus for better quality results, we wanted to improve the breadth and depth of fix results, too. For this, we worked with our labeling team to enhance the quality of our fix database and verify whether the results Snyk AgentI Fix generated were accurate or not, helping us to improve our model continuously.

The result of our multi-dimensional approach to improving Snyk Code’s auto-fixing feature was going from what was already hundreds of rules, but supported for just JavaScript/TypeScript (we count these as one language), to far more rules supported across a whopping 7 additional languages, with higher confidence in every rule. We now support, for OWASP Top 10 threats:

JavaScript/TypeScript

Java

Python

C/C++

C# (Limited support)

Go (Limited support)

APEX (Limited support)

There have been other improvements in the way that we gather data and how we process it, and these improvements have resulted in a leap in the reliability of our LLM's accuracy to an average of 80%, which makes it easier for our symbolic AI to verify the safety of our LLM's suggested fixes, as described above. What does this mean for the user? This means that users can expect to see DeepCode AI's Fix's suggestions resolve the relevant vulnerabilities 100% of the time, in less time than it used to take.

What’s next?

Despite the huge strides we have made in building a reliable auto-fixing feature, we intend to continue improving, optimizing, and iterating on our Snyk Agent Fix LLM. Additionally, we will be tackling the user experience in the IDE and beyond. What does this mean?

Model improvements for even faster and better fixes

LLMs are still evolving at a rapid pace and new or improved models are being released all the time. StarCoder 2, a newer version of StarCoder, is now available, and we will be testing it. If we see that more improvements are achievable, during our tests, we will likely switch to StarCoder 2. Apart from the actual LLM, we are also investing in:

Providing users with faster fixes by optimizing our neural model and server – reducing the latency of fix-generation from an average of ~12 seconds to under 6 seconds.

Extending rule coverage and improving fix quality for our primary languages: JavaScript/TypeScript, Java, Python, C/C++, and C#.

A streamlined IDE experience for effortless fixing

Now, developers using Snyk Agent Fix can see proposed fixes more easily, select from a variety of proposed fixes to choose the one that best suits the context of their needs, and preview potential changes to their code, all within the same flow, before the proposed fix is applied.

All these capabilities help developers to code faster, more effortlessly, and more safely, but what really assists developers (aside from Snyk Agent Fix’s accuracy), is our LLM’s understanding of code context. Unlike some other security tools that merely understand code as plain text, Snyk Agent Fix understands developers’ code, so it can fix code just like a developer would – by making edits with the highest chance of code compiling, and by applying the single most impactful and efficient code fix that would address the security issues.

Users across all currently supported IDEs, including VS Code and Eclipse, will be able to experience streamlined, automatic vulnerability fixing — we’ll also be extending access to Jetbrains IDE users, too.

A user-ready auto-fixing experience with trail-blazing accuracy, high security, and broad applicability

Over the last year, we have worked hard on addressing user feedback on Snyk Agent Fix and have made great strides in improving the breadth and depth of results as well as the overall quality of DeepCode AI’s automatic fixes. Users can now enjoy broader applicability, more accurate autofixing results, and safer fixes, all directly from within the IDE:

8 supported languages.

Our patent-pending CodeReduce technology increases auto-fixing accuracy for not just Snyk Agent Fix, but other AI models like GPT-4 as well.

Multiple AI models to ensure that we reduce the inherent risks of LLMs and model homogeneity, and checking our LLM’s outputs with our static analysis engine and knowledge-based symbolic AI analyses, which filters out incorrect fixes and hallucinations generated by the model before they reach the end-user.

An auto-fixing feature that has been battle-tested in beta for as long as ours has, delivers a superior user experience not just in theory, but in practice too. And despite being proud of our achievements, we do not intend to rest on our laurels. We will continue investing in improving Snyk Agent Fix’s results and the experience for our users.

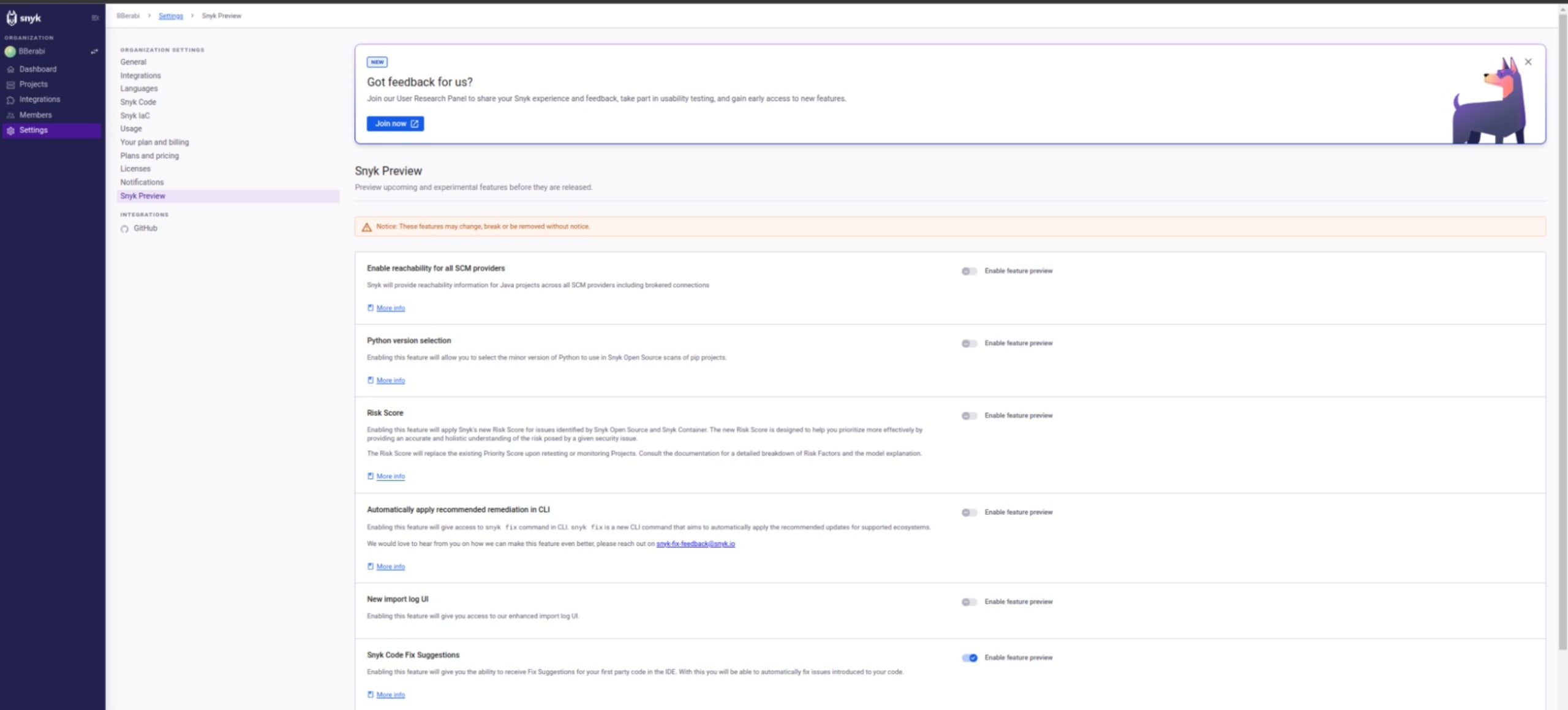

Existing Snyk Code customers can try out Snyk Agent Fix in the IDE by turning on Snyk Code Fix Suggestions in the Snyk Preview settings (see image below). More information is available in our documentation.

Haven’t got Snyk Code but want to start auto-fixing reliably at the speed of AI? Register for a Snyk account here and start experiencing a more proactive, easier, and more streamlined workflow, today.

Own AI security with Snyk

Explore how Snyk’s helps secure your development teams’ AI-generated code while giving security teams complete visibility and controls.

*For its code analysis, Snyk Agent Fix uses Snyk Code. In the version as of September 2023, Snyk Code implements 156 different checks. We classified these into five categories with respect to what kind of analysis each of them needs and whether they check security properties, as follows:

AST: Most of the AST checks are ones that can be performed only on the abstract syntax tree. Many of these rules enforce properties about the VueJS or React extensions of the language and include checks such as missing tags, duplicate variable names and patterns that usually do not depend on analyzing the control or dataflow of the program.

Interprocedural: The Interprocedural checks also depend on interprocedural analysis, but in contrast to the Local checks, the properties that they check always stay in different functions or methods in the program. These rules include checks for mismatches between the signature of a method, its implementation or its usage. (Please note that Interprocedural is referred to as Filewide, in our published research on the new CodeReduce technology.)

Local: The Local checks use interprocedural analysis to discover that certain incorrect values would flow into methods that would not accept them, to discover that resources are not deallocated or that certain expressions have no effect or always produce results with unexpected semantics.

SecurityLocal: Similar to the Local checks, SecurityLocal checks verify incorrect API usage from the security perspective as well as other types of security misconfigurations, typically by tracking the set of all method calls on an object or by tracking the values passed as parameters to method or function calls.

SecurityFlow: The SecurityFlow checks are usually the most complex checks supported by the selected static analyzer. This rule category includes all taint analysis rules that involve interprocedural dataflow analysis starting from a data source as well as the detection of various data sanitization patterns.