Securing the Agent Skill Ecosystem: How Snyk and Vercel Are Locking Down the New Software Supply Chain

February 17, 2026

0 mins readIf you've been paying attention to the AI developer tools space lately, you've probably noticed something big happening: AI agents are no longer just chatbots that help you write code. They're evolving into full-blown autonomous systems that can read your email, deploy your applications, manage your infrastructure, and execute arbitrary shell commands on your machine. And with that evolution, there’s a tremendous opportunity to accelerate agentic innovation if the industry can simultaneously solve the software supply chain risk.

Today, I'm excited to share that we've partnered with Vercel to bring Snyk's security intelligence directly into their new skills.sh marketplace—the open agent skills ecosystem that's rapidly becoming the standard library for AI agents. Every time a new agent skill is installed using the npx skills installer, Vercel's infrastructure calls out to Snyk's high-throughput API to perform deep security analysis on the skill before it ever reaches a developer's machine.

But before we get into the nuts and bolts of how this integration works, let me give you some context on why this matters so much.

The rise of agent skills (and why you should care)

On January 20, 2026, Vercel released skills, a CLI tool and open ecosystem for installing and managing skill packages for AI agents. Think of it like npm, but instead of JavaScript libraries, you're installing capabilities that your AI agent can execute on your behalf. The skills.sh directory acts as the central registry and leaderboard for these packages.

The adoption has been staggering. Within six hours of launch, the top skill had over 20,000 installs. The find-skills utility alone has racked up 235,000+ weekly installs. Major players like Stripe shipped their own skills within hours. The ecosystem now supports a huge range of agents like Claude Code, Cursor, Windsurf, Goose, GitHub Copilot, Gemini CLI, and many more.

Here's the thing, though: when you run npx skills add <package>, you're not just installing a library. You're granting an AI agent the capability to execute logic on your behalf.

That's a fundamentally different trust model than anything we've dealt with in traditional package management. A skill is more than just code that sits in your node_modules directory waiting to be imported, it's code and natural language instructions that an autonomous agent will interpret and act on, potentially with access to your file system, your environment variables, your API keys, and your production infrastructure. This is precisely why the partnership between Snyk and Vercel is so powerful.

How the Snyk + Vercel integration works

Vercel is introducing a Security Leaderboard at skills.sh to give developers immediate transparency into the security posture of the skills they install. Snyk is a primary launch partner for this initiative, providing the automated security auditing engine that evaluates skill submissions at scale.

Here's the architecture at a high level:

Submission-time scanning: When a new agent skill is installed using the

npx skillsinstaller, Vercel's infrastructure triggers a call to Snyk's high-throughput scanning API. This happens automatically, with no action required from the skill author or the end user.Deep multi-layer analysis: Our scanner doesn't just grep for suspicious strings. We built this on the agent-scan scanning engine (also known as mcp-scan), which combines multiple customized LLM-based judges with deterministic rules. This dual approach is essential because agent skills are hybrid artifacts — you need traditional code analysis for the executable components, but you also need language understanding to catch prompt injection and natural language malware that hides in the SKILL.md instructions.

Contextual understanding: We analyze both the execution logic and the natural-language instructions to detect what we call "toxic flows", scenarios in which a seemingly benign prompt can be used to trigger a malicious action. A classic static analysis tool would look at the code and see nothing wrong. Our system understands the intent of the instructions and how they interact with the agent's capabilities.

Security verification signals: The scan results are surfaced directly on the skill's page via a "Security Verified" badge, giving the community an at-a-glance signal that the skill has been rigorously checked for malicious patterns across all 8 of our security policy categories.

Continuous monitoring: This isn't a one-time check. Skills are re-evaluated as our detection capabilities improve and as new threat patterns emerge. The threat landscape moves fast, and our scanning infrastructure is designed to keep pace.

The key technical innovation here is that our CRITICAL-level detectors achieve 90-100% recall on confirmed malicious skills while maintaining a 0% false positive rate on the top 100 legitimate skills from skills.sh. That kind of discriminative power is what makes it possible to deploy this as an automated gate at submission time — you can block truly malicious skills without creating friction for legitimate developers.

We scanned 3,984 agent skills. Here's what we found.

At Snyk, we've been investing heavily in agentic AI security research, powered by our acquisition of Invariant Labs — a globally recognized AI security research firm spun out of ETH Zurich that pioneered work on tool poisoning, MCP vulnerabilities, and runtime detection for agentic systems.

As part of this investment, our research team recently completed the first comprehensive security audit of the AI agent skill ecosystem. We analyzed 3,984 skills from major marketplaces like skills.sh, and published our findings in a detailed technical report: Exploring the Emerging Threats of the Agent Skill Ecosystem.

Why this matters for the broader ecosystem

If you've been in the software industry long enough, this whole situation probably feels familiar. The current state of the agent skill ecosystem mirrors the early days of npm and PyPI: explosive growth, community enthusiasm, and a security posture that hasn't caught up with the adoption curve. We've seen this movie before with dependency confusion attacks, typosquatting campaigns, and protestware in traditional package registries.

But agent skills differ from traditional packages in several critical ways. First, the blast radius is larger. Traditional npm packages can run code, but they typically execute within a relatively constrained environment. An agent skill, on the other hand, inherits all the permissions of the agent itself — and modern agents often have access to your filesystem, your shell, your API keys, your email, your cloud infrastructure, and more.

Second, the attack surface is novel. Agent skills introduce natural language as an attack vector. You can't detect prompt injection with traditional SAST tools. You can't catch "toxic flows" with regex patterns.

Third, the trust model is inverted. With traditional packages, the developer makes a conscious decision to import and call specific functions. With agent skills, the agent decides when and how to use a skill based on natural language instructions. The human is one step removed from the execution decision, which means the agent becomes the new attack target.

This is why Snyk has been investing so heavily in this space. We acquired Invariant Labs specifically because their team — spun out of ETH Zurich's computer science department — had built the foundational technology for understanding and securing agentic systems. Their Guardrails technology provides a transparent security layer at the LLM and agent level, combining contextual information, static scans, runtime monitoring, human annotations, and incident databases into a unified security fabric.

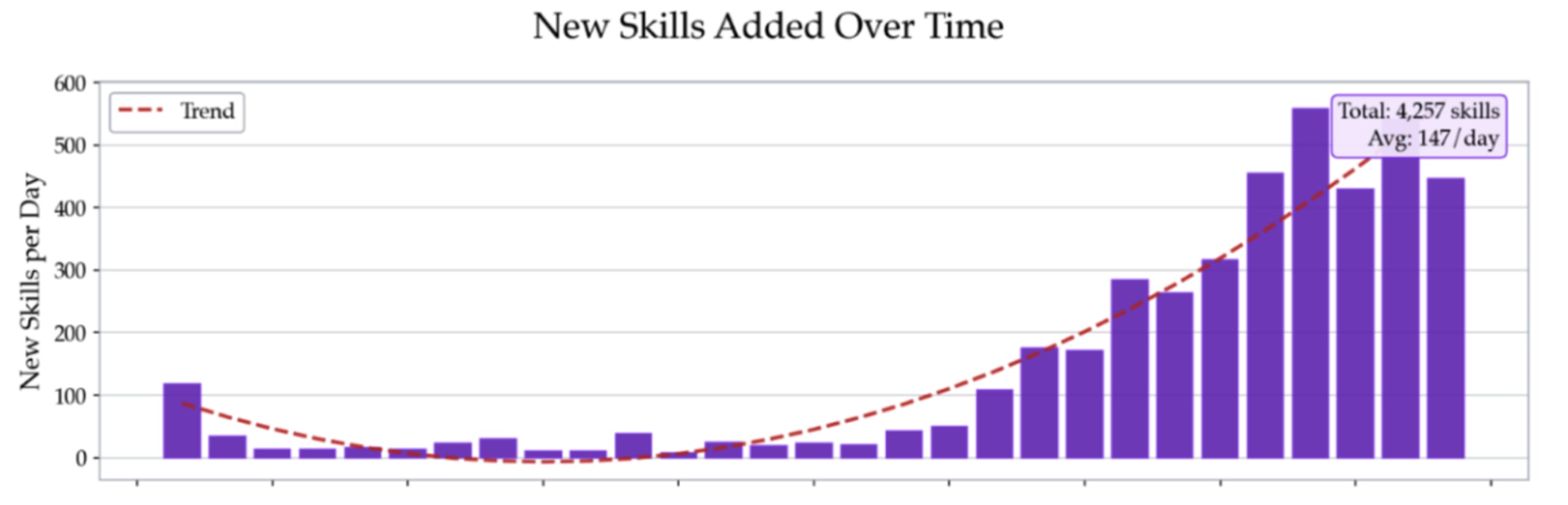

The partnership with Vercel is a natural extension of this work. As the skills ecosystem grows — and it's growing at an average of 147 new skills per day — the only viable strategy is to build security directly into the platform infrastructure. Manual review doesn't scale. Community reporting is too slow. You need automated, continuous, comprehensive scanning that understands both code and language, deployed at the point of submission.

What you can do right now

The good news is that you don't have to wait for someone else to secure your agentic workflows. We've made our scanning technology available through several channels, and they're all free to use today.

Try the Agent Scan - Skill Inspector: This is the same scanning engine that powers the Vercel integration, packaged as a self-service website. Paste in a skill and get an immediate security assessment across all 8 of our threat categories. It's free, it's fast, and it's a great way to vet skills before you install them.

Use the Agent Scan CLI on GitHub: For developers who prefer the command line (and let's be honest, that's most of us), our open source mcp-scan tool can auto-discover your MCP configurations, agent tools, and installed skills, then scan them for prompt injections, malicious code, suspicious downloads, and more. You can also use it as a proxy to monitor and guardrail MCP traffic in real-time. Run uvx mcp-scan@latest --skills to scan your installed skills right now.

Explore Snyk Labs: Our research arm is where we publish new experiments, tools, and findings. If you're interested in the cutting edge of AI security research — from toxic flow analysis to MCP server vulnerability detection — this is where you want to be.

For enterprise teams: Evo by Snyk operationalizes our Labs innovations into enterprise-ready agentic capabilities that integrate directly into the Snyk AI Security platform. Evo provides the visibility, policy enforcement, and orchestration needed to govern AI systems across environments. Skill support in Evo is coming soon.

And if you want the full technical details on our research methodology and findings, including documented examples of real-world malicious skills, check out our technical report: Exploring the Emerging Threats of the Agent Skill Ecosystem.

The bottom line

The agent skill ecosystem is growing fast — 4,257 skills and counting, with an average of 147 new ones published every day. That growth is exciting, and it's going to unlock incredible productivity gains for developers. But it also means the attack surface is expanding just as quickly.

At Snyk, we believe that security has to be a first-class citizen in every software ecosystem, and agent skills are no exception. The partnership with Vercel is a step toward making "secure by default" the standard for the agentic era, not an afterthought.

We're in the early innings of this space. The threats are real, the stakes are high, and the technology to defend against these attacks is still evolving. But if there's one thing I've learned from two decades of working in security, it's that the earlier you build security into the foundation, the better off everyone is.

So go scan your skills. Audit your agent configurations. And if you find something sketchy, let us know. We're all in this together.

GUIDE

Unifying Control for Agentic AI With Evo By Snyk

Evo by Snyk gives security and engineering leaders a unified, natural-language orchestration for AI security. Discover how Evo coordinates specialized agents to deliver end-to-end protection across your AI lifecycle.