In this section

OpenRouter in Python: Use Any LLM with One API Key

Building AI applications often requires juggling multiple API keys, learning different service patterns, and dealing with various authentication methods. What if you could access dozens of top-tier LLMs with just one API key? That's the promise of OpenRouter, and in this article, I'll show you the simplest way to use it in Python. In upcoming articles, we will also demonstrate how OpenRouter can synergize with LangChain, and how to use OpenRouter with LangChain for Tool Calling. Source code for this article is available here.

What is OpenRouter?

OpenRouter acts as a unified gateway to the world's leading language models, including both commercial models and open-source ones. It lets you access models from OpenAI, Anthropic, Google, Meta, Mistral, and other providers through a single, standardized API.

Instead of creating separate accounts for each provider and juggling multiple SDKs, OpenRouter offers an interface that lets you access any large language model from any provider with just a single line of code. This can meaningfully simplify your development workflow if you are building apps that will use multiple different LLMs, or if you are just trying to experiment with different models to see which suits your use case best.

Why use OpenRouter?

We have mentioned some benefits: it’s a single API to access dozens of models, such as GPT-4, Claude, Gemini, Llama, and many others. Moreover, it offers:

Best-Model Routing: There are multiple options for routing prompts to different models depending on your prompts. For example, specifying "model" as "openrouter/auto" will use the AutoRouter to decide which model is best suited to handling your specific prompt.

Reliability & Redundancy: OpenRouter provides failover capabilities, allowing your application to fall back to other providers when one goes down.

OpenAI-compatible API: If you already use OpenAI's SDK, you can switch to OpenRouter with minimal code changes.

Bare bones OpenRouter implementation

This is the simplest way to use OpenRouter in Python. The code in openrouter_simple.py provides a minimal example that gets you up and running quickly. Here's the full script:

"""

Minimal example for using OpenRouter with the OpenAI client directly.

This is the simplest way to get started with OpenRouter.

"""

import os

import pathlib

from dotenv import load_dotenv

from openai import OpenAI

# Get the current directory and load .env file

current_dir = pathlib.Path(__file__).parent.absolute()

env_path = current_dir / '.env'

load_dotenv(dotenv_path=env_path)

# Get API key from environment

api_key = os.environ.get("OPENROUTER_API_KEY")

# Check if API key is available

if not api_key:

raise ValueError("OpenRouter API key not found. Please set OPENROUTER_API_KEY in your .env file.")

# Create a client for OpenRouter

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key=api_key,

default_headers={"HTTP-Referer": "http://localhost:5000"} # Required by OpenRouter

)

# Send a message to the model

response = client.chat.completions.create(

model="openai/gpt-3.5-turbo", # You can change this to any supported model

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Hello, who are you?"}

]

)

# Display the response

print(response.choices[0].message.content)This script contains everything you need to start using OpenRouter. You can think about this in four stages as follows.

1. Environment Setup

The script begins by loading your API key from a .env file, which keeps your credentials secure:

# Get the current directory and load .env file

current_dir = pathlib.Path(__file__).parent.absolute()

env_path = current_dir / '.env'

load_dotenv(dotenv_path=env_path)

# Get API key from environment

api_key = os.environ.get("OPENROUTER_API_KEY")

# Check if API key is available

if not api_key:

raise ValueError("OpenRouter API key not found. Please set OPENROUTER_API_KEY in your .env file.")You'll need to create a .env file with your OpenRouter API key:

OPENROUTER_API_KEY=your_openrouter_api_keyYou can get an API key by signing up at openrouter.ai.

2. OpenAI Client Configuration

The magic happens in these few lines:

# Create a client for OpenRouter

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key=api_key,

default_headers={"HTTP-Referer": "http://localhost:5000"} # Required by OpenRouter

)Notice how we're using the standard OpenAI client, but pointing it to OpenRouter's API endpoint instead. This works because OpenRouter maintains full compatibility with OpenAI's API format.

The HTTP-Referer header is required by OpenRouter to identify where the request comes from. In a production environment, you would set this to your application's URL.

3. Model Selection and Request

When making a request, you specify which model to use:

# Send a message to the model

response = client.chat.completions.create(

model="openai/gpt-3.5-turbo", # You can change this to any supported model

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Hello, who are you?"}

]

)The model identifier follows the format provider/model-name. Some examples include:

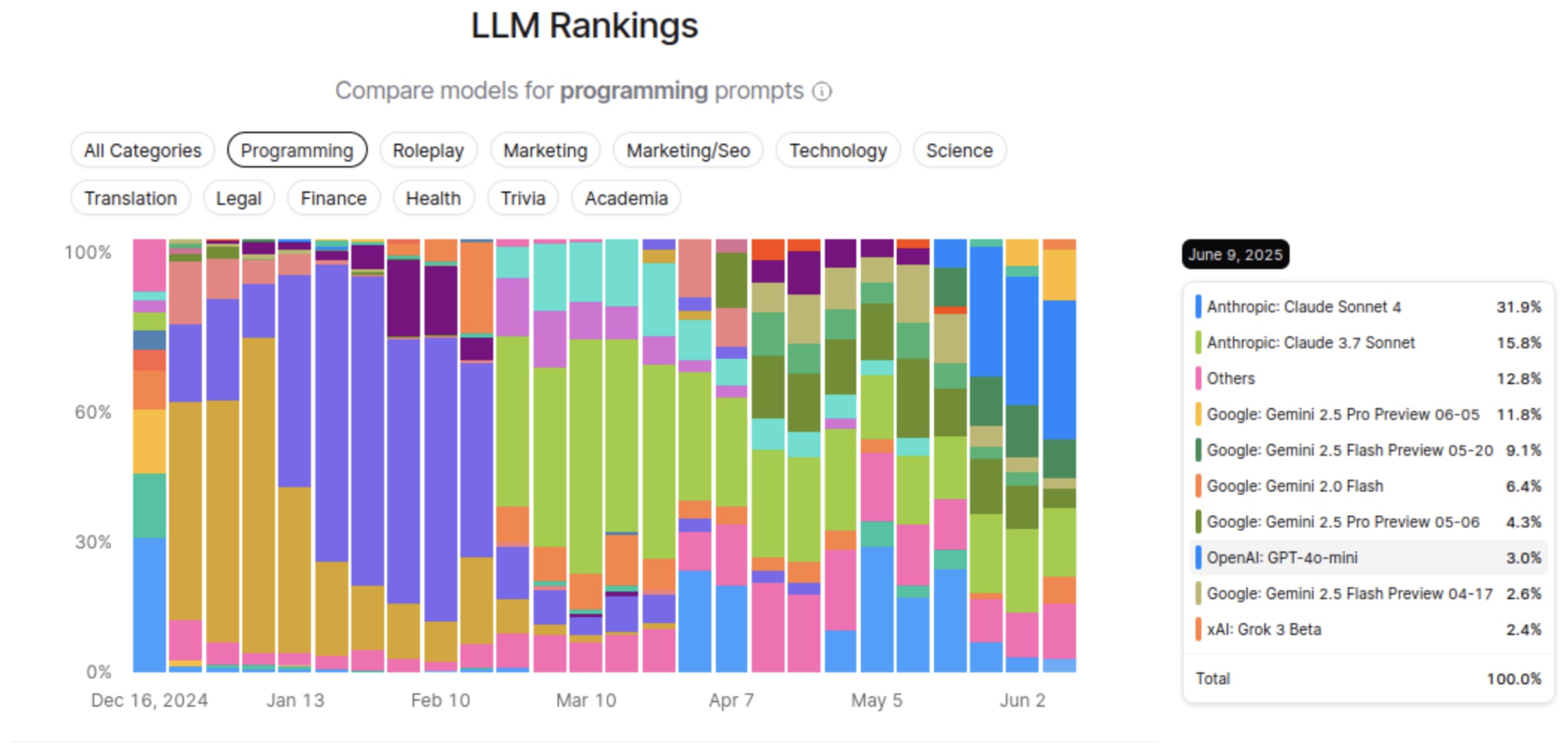

openai/gpt-4o-2024-11-20- OpenAI's GPT-4o (Omni) with a specific knowledge cutoffanthropic/claude-sonnet-4- Anthropic's Claude 4 Sonnetgoogle/gemini-2.5-pro-preview- Google's Gemini Pro 2.5 (Preview)meta-llama/llama-4-maverick- Meta's Llama 4 17B Instruct

At times, OpenRouter gives you access to models you might not normally have. One example was earlier access to premium models like GPT-4 32K. The full list of available models can be found at openrouter.ai/models.

4. Processing the Response

Finally, we extract and display the response:

1# Display the response

2print(response.choices[0].message.content)The response structure follows the same format as the OpenAI API, making it easy to integrate with existing code.

OpenRouter features and options

While the basic example above gets you started quickly, OpenRouter offers many additional features:

Model routing control

OpenRouter offers comprehensive options for controlling which underlying provider your request is routed to. You can specify these using the OpenRouter JSON format (see extra_body):

# Provider preference ordering

response = client.chat.completions.create(

model="meta-llama/llama-3.1-8b-instruct",

messages=[{"role": "user", "content": "Hello!"}],

extra_body={"provider": {"order": ["together", "fireworks", "perplexity"]}}

)

# Quantization preferences (only for open-source models)

response = client.chat.completions.create(

model="meta-llama/llama-3.1-8b-instruct", # Must use open-source model

messages=[{"role": "user", "content": "Hello!"}],

extra_body={"provider": {"quantizations": ["fp8"]}}

)

# Fallback control

response = client.chat.completions.create(

model="meta-llama/llama-3.1-8b-instruct",

messages=[{"role": "user", "content": "Hello!"}],

extra_body={"provider": {"allow_fallbacks": True}}

)

# Data collection control

response = client.chat.completions.create(

model="openai/gpt-3.5-turbo",

messages=[{"role": "user", "content": "Hello!"}],

extra_body={"provider": {"data_collection": "deny"}}

)This example specifies that you want only providers that support fp8 quantization for that model.

Response streaming

OpenRouter supports streaming responses, which is useful for chat applications:

stream = client.chat.completions.create(

model="anthropic/claude-3-sonnet",

messages=[

{"role": "user", "content": "Tell me about renewable energy benefits"}

],

stream=True

)

for chunk in stream:

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end="", flush=True)Vision language models

OpenRouter supports multimodal models that can process images:

import base64

# Method 1: Using local image files

def encode_image_to_base64(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

base64_image = encode_image_to_base64("your_image.png")

response = client.chat.completions.create(

model="openai/gpt-4o", # GPT-4o works reliably for vision

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "What's in this image?"},

{"type": "image_url", "image_url": {"url": f"data:image/png;base64,{base64_image}"}}

]

}

]

)

# Method 2: Using image URLs

response = client.chat.completions.create(

model="openai/gpt-4o",

messages=[

{

"role": "user",

"content": [

{"type": "text", "text": "What's in this image?"},

{"type": "image_url", "image_url": {"url": "https://example.com/image.jpg"}}

]

}

]

)Pricing and cost management

OpenRouter operates on a credit-based system where different models consume varying amounts of credits per request. Free credits are available for testing, but you'll need to purchase additional credits for production use.

You can monitor your usage through the OpenRouter dashboard and set credit limits for API keys to control spending.

Quick start

To use the code in this article:

Sign up for an OpenRouter account at openrouter.ai

Create an API key at openrouter.ai/settings/keys

Set up a Python environment with the required packages:

pip install openai python-dotenv

Create a .env file with your API key:

OPENROUTER_API_KEY=your_openrouter_api_keyRun the example script:

python openrouter_simple.pyWrapping things up

OpenRouter fills a gap in the AI ecosystem by acting as a unified marketplace for language models. The beauty of the implementation I've shown lies in its simplicity - with just a few lines of code, you can switch between dozens of leading models without changing your application's structure.

For developers building AI applications, this means you can:

Test different models without committing to a single provider

Use the best model for each specific task

Implement fallbacks for reliability

Optimize costs by selecting the most cost-effective model for each use case

The included openrouter_simple.py script gives you the foundation. From there, you can build robust applications that leverage the full power of modern language models without the complexity of managing multiple APIs.

Secure your AI implementations with Snyk

As you build applications with OpenRouter and other AI tools, security becomes a critical concern. Snyk offers solutions specifically designed for the emerging AI ecosystem.

Snyk AI Security Platform

Snyk's AI Security Platform is a solution to address the unique security challenges of AI-driven development. This platform helps developers build secure AI applications while maintaining the fast pace that AI enables.

The AI Security Platform is designed to empower organizations to accelerate AI-driven innovation, mitigate business risk, and secure agentic and generative AI. This is especially important as studies suggest that nearly half of all AI-generated code contains security vulnerabilities.

Snyk's AI Security Platform allows teams to develop fast and stay secure in an AI-enabled reality — reducing human effort while improving security policy and governance efficiency. It's built upon Snyk's comprehensive testing engines, powered by DeepCode AI and informed by their industry-leading vulnerability database.

Snyk Secure Developer Program for Open Source

If you're working on open source projects that utilize AI technologies like OpenRouter, Snyk's Secure Developer Program offers valuable resources. Launched in February 2025, this program is designed to strengthen security in the open source ecosystem. The Snyk Secure Developer Program offers a first-of-its-kind support to open-source communities that helps build enterprise-level security programs that find and fix vulnerabilities in code quickly.

The program provides qualifying open source projects with:

Enterprise-grade security tools: Full access to Snyk's premium security platform at no cost

Expert support: Hands-on assistance from Snyk's developer relations team

Community resources: Access to Snyk's Discord community and Partner Connect

API access: Integration capabilities for automating security checks

Snyk offers these leading developer security tools 100% free to qualifying open source projects. To qualify, your project must not be backed by a corporate entity and have at least 10,000 GitHub stars.

By combining the flexibility of OpenRouter with Snyk's security tools, you can build AI applications that are both powerful and secure. This approach is especially valuable as AI development accelerates, and new security challenges emerge.

Learn more about these offerings and apply for the Secure Developer Program.

Get a live Snyk demo for open source security

Chat with our security experts to see how Snyk can help you use open source securely