From Hype to Trust: Building the Foundations of Secure AI Development

July 21, 2025

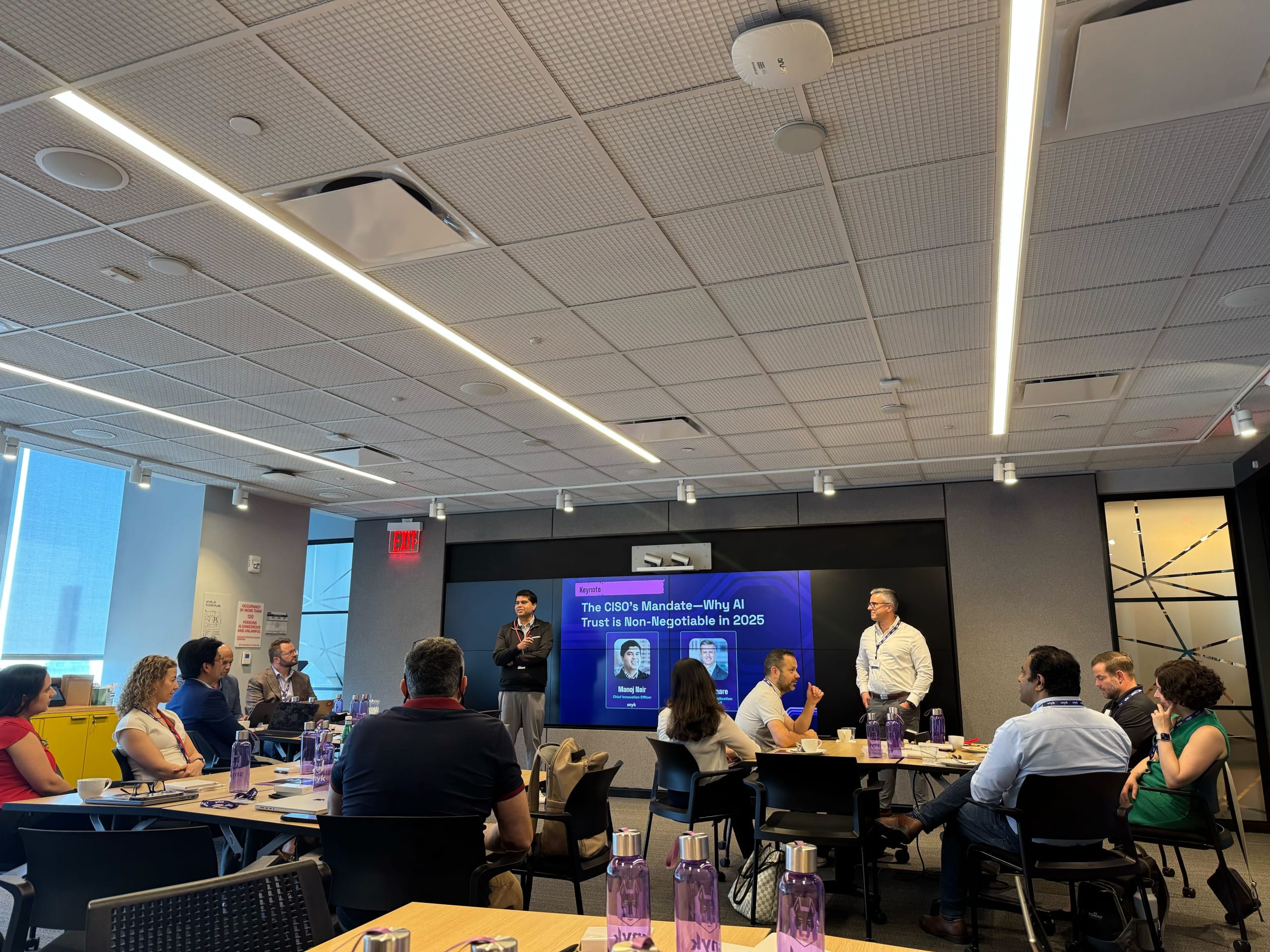

0 mins readGenerative AI and Agentic AI are changing everything from who writes software to how we define secure architecture. At Snyk’s recent Lighthouse event in NYC, leaders from cloud, security, and development teams came together to answer one essential question: how do we move fast with AI without breaking trust? The answer? Start with visibility, bake in security by design, and never lose sight of the humans behind the code.

The real shift: AI isn’t just a toolset. It’s a business model.

Held at Accenture’s Manhattan office with a skyline as bold as the conversation, this invite-only gathering felt less like a tech event and more like a leadership summit. Voices from across the industry, including CISOs, platform engineers, and leading developer shared how AI is reshaping not just their tech stacks, but their org charts, workflows, and boardroom conversations.

There was a clear throughline: AI security isn’t optional. It’s foundational.

Manoj Nair, Chief Innovation Officer for Snyk outlined some of the challenges of AI-Generated Code: AI code assistants, trained on existing codebases, can perpetuate bad habits and introduce vulnerabilities, with one customer reporting a doubling of vulnerabilities in a group using Copilot.

As John Delmare, Managing Director at Accenture noted, “AI must be treated as a business transformation. One that includes security from the start.” And if your organization isn’t operationalizing trust today, you’ll soon be playing catch-up.

AI Trust: The new baseline for secure innovation

Forget static code and rule-based engines. AI-native apps are here and they don’t behave like the software we’re used to. They generate, reason, react, and interact with users in real time.

The bottom line: You can’t have AI trust without AI security. And you can’t have AI security without AI readiness.

That readiness starts by shifting left and right, covering everything from LLM red teaming and AI-BOMs to runtime fuzzing of autonomous agents.

This is where AI readiness comes in and not as a one-time checklist, but as an ongoing posture shift to visibility, ownership, and continuous guardrails.

But the conversation wasn’t just technical.

Anyone can build an app now. That changes everything.

If someone pastes a prompt into ChatGPT to generate workflow logic, guess what? They’re an app creator.

At the NYC Lighthouse event, the room buzzed with discussion around this shift. We explored different aspects of how democratized software creation demands democratized security ownership.

That means threat modeling is not longer just for engineers. Legal teams, product leaders, and yes, security professionals must align around the unique risks of agentic systems, probabilistic outputs, and hidden toolchains.

This isn’t DevSecOps 2.0. It’s AI-native security. This is the new reality.

Supercharging DevSecOps with guardrails, not blockers

Of course, the AI wave isn’t just a risk, it’s also a rocket. Teams shared how they’re using GenAI to speed up code generation, scale documentation, and even suggest vulnerability fixes. But everyone agreed on one thing.

Speed is only an asset if it’s secure.

That’s why operationalizing AI trust means more than just static checks. It means building guardrails that span the entire AI SDLC. It means understanding where models live, what they interact with, and how their behaviors evolve in production.

Because no team wants to discover their chatbot is giving away massive discounts on flights or freebies without authorization.

Culture is the hidden multiplier

Throughout the day, one word kept surfacing: culture.

Yes, you need scanning. Yes, you need observability. But most of all, you need shared ownership, and it needs to span the organization across development, platform, security, and business teams.

Security teams have to be educators, enablers, and policy designers for a new wave of AI-native innovation.

AI trust doesn’t work without trust in the team.

And that, perhaps, is what made this Lighthouse discussion so special. Not just the insights but the openness. The willingness to explore, admit uncertainty, and collaborate toward something better.

What’s next? From NYC to Silicon Valley

The NYC event was just the beginning. Next up is Lighthouse Silicon Valley, where we’ll continue exploring how secure AI development unlocks real business value. From architecture to culture, it’s clear that the future of software is AI-native and trust-built.

If you’re navigating that future, we’d love to hear from you. Join us in the Bay Area next week.

Final word: AI trust begins with Snyk

We left NYC with one shared conviction: securing AI isn’t a side quest. It’s the main event.

Whether you're embedding LLMs into your stack or rethinking your agent workflows, one thing is clear trust can’t be added later. It must be built in from the start.

That’s why at Snyk, we’re building the AI Trust Platform:

To give teams visibility into AI assets

To shift from fire drills to prevention

To scale security alongside speed

Because when the software thinks for itself, security has to think ahead.

SILICON VALLEY LIGHTHOUSE EVENT

Accelerating AI Trust

Explore security challenges and opportunities emerging at the intersection of AI, software development, and governance.