Can AI write secure code?

Frank Fischer

May 3, 2023

0 mins readAI is advancing at a stunning rate, with new tools and use cases are being discovered and announced every week, from writing poems all the way through to securing networks.

Researchers aren’t completely sure what new AI models such as GPT-4 are capable of, which has led some big names such as Elon Musk and Steve Wozniak, alongside AI researchers, to call for a halt on training more powerful models for 6 months so focus can shift to developing safety protocols and regulations.

One of the biggest concerns around GPT-4 is that researchers are still not completely sure what it can do. New exploits, jailbreaks, and emergent behaviours will be discovered over time and are difficult to prevent due to the black-box nature of LLM’s. OpenAI states this in their GPT-4 whitepaper:

OpenAI has implemented various safety measures and processes throughout the GPT-4 development and deployment process that have reduced its ability to generate harmful content. However, GPT-4 can still be vulnerable to adversarial attacks and exploits or, “jailbreaks,” and harmful content is not the source of risk. Fine-tuning can modify the behavior of the model, but the fundamental capabilities of the pre-trained model, such as the potential to generate harmful content, remain latent.

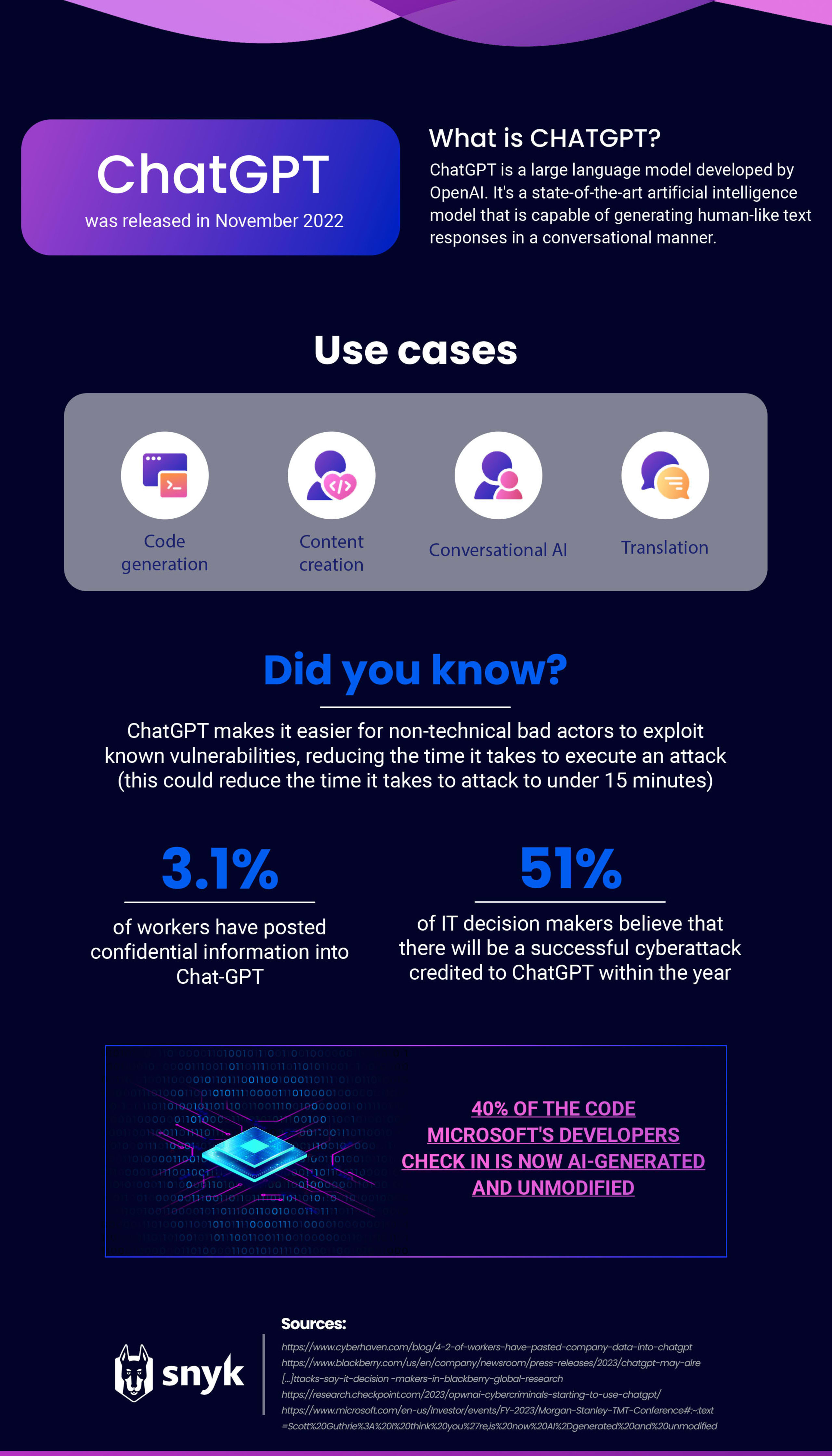

GPT-4 has only just been released, but we do have a lot of data about its predecessor, GPT-3, which has been powering Chat-GPT. Since its launch, ChatGPT has caused so much stir that other tech giants, including Google and Adobe, have been scrambling to make their AI presence felt too.

From optimizing productivity to creating content, the list of ChatGPT’s capabilities is expansive. However, there have been concerns around developers using it to produce code due to privacy issues, cybersecurity risks, and potential impact on application security posture.

Privacy and IP risks of using AI tools for coding

Concerns around ChatGPT and its privacy risks when being used with internal company data have been prevalent amongst consumers and professionals since it was launched late last year.

A recent Cyberhaven report showed that 3.1% of workers have posted confidential information into Chat-GPT, showing the importance of creating policies governing the internal usage of AI tools. Samsung recently had to issue an internal warning about AI usage to their employees after sensitive source code and meeting notes were input to Chat-GPT, this data is now in the hands of Open-AI as training data, and it’s unclear if it can be retrieved.

AI tools enhancing cybersecurity

It’s not all doom and gloom for security professionals. While AI technology has some downsides and privacy related concerns, it’s also giving more tools to security teams to help them deal with emerging threats.

OpenAI red teams successfully used GPT-4 for vulnerability discovery and exploitation, as well as social engineering, which will allow red teams to find and report new vulnerabilities.

Security companies are continuing to find new ways to integrate AI into their existing products. We recently announced the new Auto-Fix feature, leveraging hybrid-AI technology to recommend vulnerability fixes, generated by AI, and validated for security by the Snyk Code engine.

Application security risks of coding using AI

While ChatGPT can help users to write code, it generally only works with smaller pieces of code at a time. So if it is being used to produce a large amount of code, developers can potentially open themselves up to risks as the software does not factor in interfile or multi-level issues. More often than not, AI does not even understand these cases and cannot solve the related issues.

Code generation products are only as good as the data that they’re trained on. It’s almost impossible to have 100% bug or vulnerability-free code, so code generation tools will often include vulnerable code in their suggestions. This is even more concerning when you consider this statistic shared by Microsoft — 40% of the code they're (Developers) checking in is now AI-generated and unmodified. This is a big concern for AppSec teams, as organizations need to ensure that their software is as secure as possible.

Github’s Copilot AI coding tool is trained on code from publicly available sources, including code in public repositories on GitHub, so it builds suggestions that are similar to existing code. If the training set includes insecure code, then the suggestions may also introduce some typical vulnerabilities. A researcher at Invicti tested this by building a Python, and a PHP app with Copilot, then checking both applications for included vulnerabilities, finding issues such as XSS, SQL injection, and session fixation vulnerabilities.

GitHub is very aware of the issues raised around security, and in a recent update, they’ve added a vulnerability filtering feature:

Hardcoded credentials, SQL injections, and path injections are among the most common susceptible coding patterns targeted by the model. With GitHub Copilot, this solution is a huge step in assisting developers in writing more secure code.

GitHub Copilot update includes security vulnerability filtering | InfoWorld

Conversations on Reddit Regarding Coding with ChatGPT

Based on the previous insight conducted into Copilot, we wanted to uncover if ChatGPT was following in its footsteps.

To do this, we decided to turn to Reddit. The social news aggregation and discussion website that technology professionals, such as developers, regularly turn to to get insight from other professionals in the same field.

As such, it is the perfect place to see how people use chatGPT for coding: Are they looking for advice? Are they warning others not to use it for this purpose? Or are they praising it as a reliable resource?

We began by analyzing a Reddit thread around coding and ChatGPT, which consisted of almost 350 posts. We then assessed the sentiment of the comments, specifically focusing on the full Reddit post rather than solely the title.

Positive

Excluding posts with no description or those that were not related, 17.9% had a positive sentiment. One user posted an image of some code that they were working on specifying: “...I was on the border of giving up. Had spent the last half hour looking for a solution, and as a last resort, asked GPT, 5 seconds pass and it gives me the perfect answer…”

Another user was particularly complimentary, stating: “When I hit on a complex problem like refactoring code or manipulating arrays or lists in strange ways, I like that I can just ask ChatGPT how to do it. After 20 years of having to burn my brain cells on how to do these things every time they come up, I can finally offload this boring task to an AI! What kind of a programmer does it make me?"

However, amongst the positive posts, there was a noticeable theme of ChatGPT missing out on the smaller details:

“...Sure, it's been extremely impressive. It's written plausible, relatively accurate code, extremely quickly. But it failed miserably in all the small details…”

"...I'm a big fan of ChatGPT (for the most part) when it comes to coding. It doesn't always come up with the right answer, but that's okay. It usually gets pretty close. It's like having a very clever — but imperfect — Jr. Developer always available…"

"...ChatGPT seems to know where the issues in its code are, but sometimes doesn't provide the correct solution to fixing it…"

The positive nature of the comments being caveated with criticism on ChatGPT missing out on the more finite details highlights where the security concerns come to light. If the AI tool is being used to create code by someone who is not experienced, these mistakes will not be assessed.

Negative

Overall, we found that there were fewer negative posts around coding using ChatGPT than positive ones. 10.3% of the descriptions had a negative sentiment overall, with many users expressing frustration at inaccuracies.

One user specified: “...I find that ChatGPT's suggestions are particularly unreliable. The queries it creates produce error after error…”

Another user highlighted a similar issue, seeking to find specific prompts to help with coding: “After requesting a code change ChatGPT outputs the full code even if only one line in the code was changed. I've tried using prompts such as "Please only provide me the code for the lines that have been changed," but it ignores me and outputs the full code anyway. It wastes time so I'd like a prompt that works to make it only give me the lines of code that changed.”

Seeking help and advice

We found that the majority of the Reddit posts around ChatGPT and coding were seeking help and advice (44.6%).

The requests vary from users asking others if the code they have been provided by ChatGPT is missing anything important to translating code from one language to another.

When we broke this down further, we found that these post were focused on learning resources (51.4%), P2P requests (14.3%), quality questions/queries (14.3%), user creations (11.4%), predictions (5.7%) and user experience (2.9%).

Our conclusions from this data

People that really understand their code still find small tweaks and changes in the details. Chat-GPT is incredibly useful for more simple tasks in its current state, but won’t always output perfect code for your needs.

People who can't understand their code are super impressed and very positive, as they’re able to generate code that achieves their goals, with much less knowledge required from themselves.

Both groups of people are unlikely to be aware of security issues that are being generated by AI tooling, especially those new to development. So there’s a good chance that developers leveraging AI tooling without considering security, could be bringing vulnerabilities generated by AI suggestions into their codebase.

This is even more important when you look at the trends around AI usage. 42% of companies surveyed by IBM last year are looking for ways to use AI in their organizations, so it’s vital to consider security alongside AI tools.

Key considerations for application security in a world with AI

AI tools such as Chat-GPT and Copilot can speed up development, but they should be paired with comprehensive security tools to ensure that the code generated by these tools is secure. LLM’s cannot replace a compiler or an interpreter during development, just as they can’t replace dedicated security tooling.

Internal policies concerning the use of AI tools should be put in place. These should cover things such as:

Clear definition of acceptable use cases: Define which use cases are acceptable for the use of AI tools and what kinds of decisions can be made using them.

Data selection and usage: Specify the type and quality of data that can be used in AI models and which data sources are appropriate to use.

Data privacy and security: Ensure that any data used in AI models is properly protected and that appropriate measures are taken to maintain data privacy and security.

Compliance requirements: Ensure that the use of AI tools complies with all legal, regulatory, and ethical requirements.

Regular updates: Continuously update policies as new AI tools are developed and as the business needs evolve.

This isn’t an exhaustive list of policies for AI use in your organization, but it could be used as a starting point.

Security teams should be aware of developments in AI that give more tools to both security teams and bad actors. AI tools may be a key part of defending against the increase of exploits by giving security teams more efficient tools, especially while companies are still struggling to hire security experts. We expect to see new use cases for AI tools being discovered throughout this year, so it’s vital to stay abreast of recent developments. Security teams should also stay aware of how much their Developer teams are using AI, how much of the code being added to your codebase is being generated by AI, and do you have the right security checks in place to manage it.

Code security is just one aspect of development. Software development is a combination of people, processes, and technology. AI can help with the technology side, but you still need the other factors. Quality is key when it comes to coding — the human factor will always be important.

Snyk’s advice on the best application security options

Whether your code is fully human-written or mostly AI-generated, it’s important to nail the basics of security to minimize your attack surface. Assessing your application security is vital in protecting code against those who may want to exploit it. Conducting an application security assessment involves testing applications to detect threats and developing strategies to defend against them. This process enables organizations to evaluate the current security posture of their applications and identify ways to enhance their software's protection against future attacks. Developers should conduct regular application security assessments to ensure security measures remain current and effective.

Five steps for assessing your application security:

Identify potential threat actors

Determine sensitive data worth protecting

Map out application attack surface

Evaluate current AppSec process pain points

Build a security roadmap for eliminating weak points

While AI tools like ChatGPT can miss out on weak links and vulnerabilities, Snyk is a developer security platform that is much more up to the task. With Snyk, you can identify and automatically secure vulnerabilities in your code, open source dependencies, containers, and cloud environments.

The platform can even integrate directly into dev tools, automation pipelines, and coding workflows to ensure seamless and efficient security.

Accelerate secure development

Snyk brings developers and security teams together to ensure speed and security at scale.