AI quality: Garbage in, garbage out

June 11, 2024

0 mins readIf you use expired, moldy ingredients for your dessert, you may get something that looks good but tastes awful. And you definitely wouldn’t want to serve it to guests. Garbage in, garbage out (GIGO) applies to more than just technology and AI. Inputting bad ingredients into a recipe will lead to a potentially poisonous output. Of course, if it looks a little suspicious, you can cover it in frosting, and no one will know. This is the danger we are seeing now.

With AI/ML, everything looks good. You can use your favorite bot and grab a piece of code that looks fantastic. It has comments, is properly indented, and may even use a library to help you out. But if the milk was spoiled and the bot grabbed it from the fridge, then your code might be sour.

GIGO is discussed conceptually a lot, but let’s look at an example of how this happens. I taught an Artificial Intelligence course at a university, and in the class, we built an expert system using a decision tree.

An expert system is a type of artificial intelligence (AI) computer program that emulates the decision-making ability of a human expert in a particular domain or field of knowledge. It’s designed to solve complex problems, make recommendations, or provide expert-level advice based on a set of rules, knowledge, and logical reasoning. In our example, it’ll solve a very simple problem.

An expert system relies on a knowledge base. This is where the system stores domain-specific information, facts, rules, and heuristics. There is also the inference engine. This is the reasoning component of the expert system. It uses the knowledge from the knowledge base to draw conclusions, make decisions, and solve problems. It employs various reasoning techniques, such as forward chaining (working from known facts to conclusions) or backward chaining (starting with a goal and working backward to find supporting evidence). However, what we are concerned with is the data inside the system.

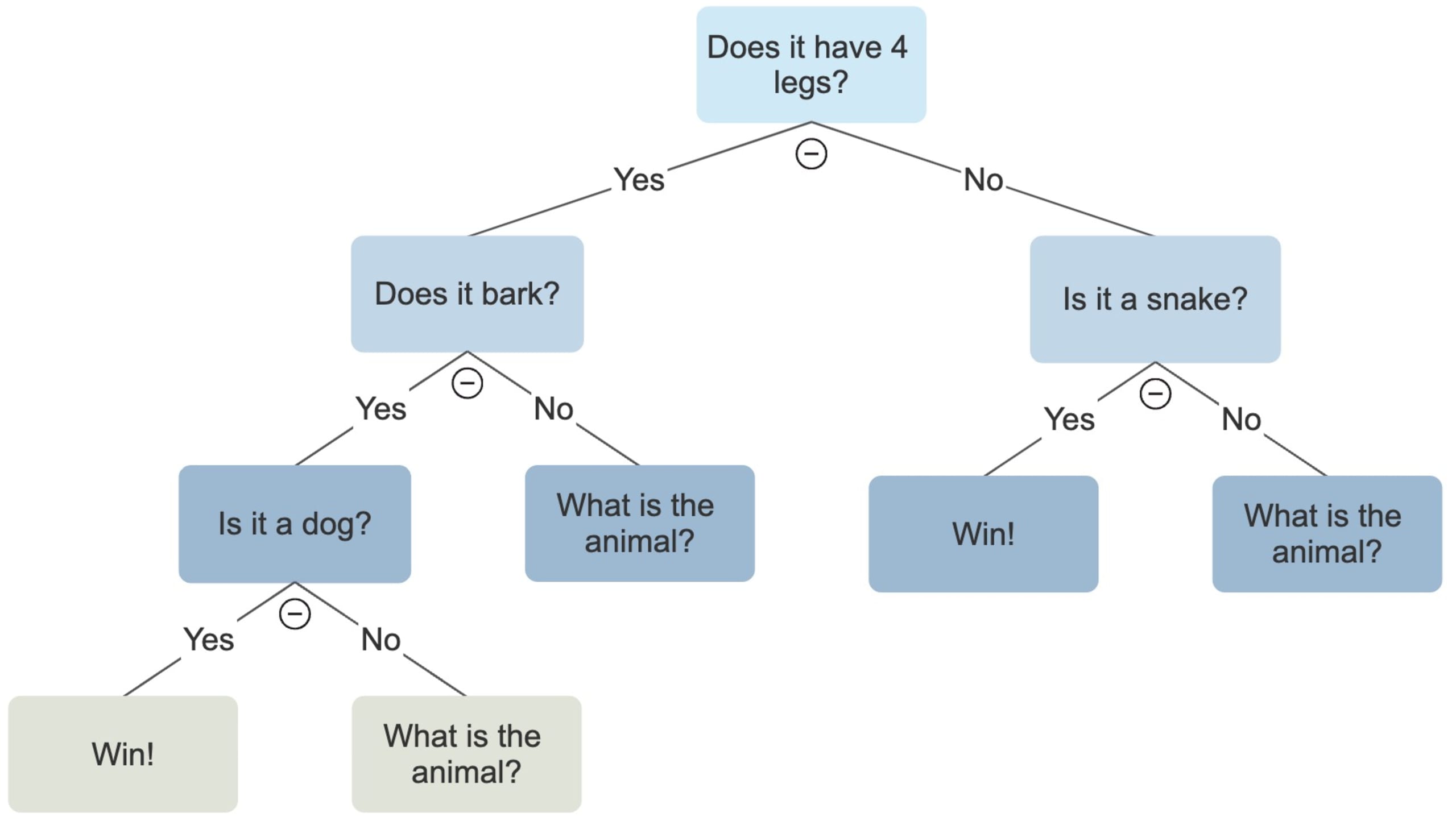

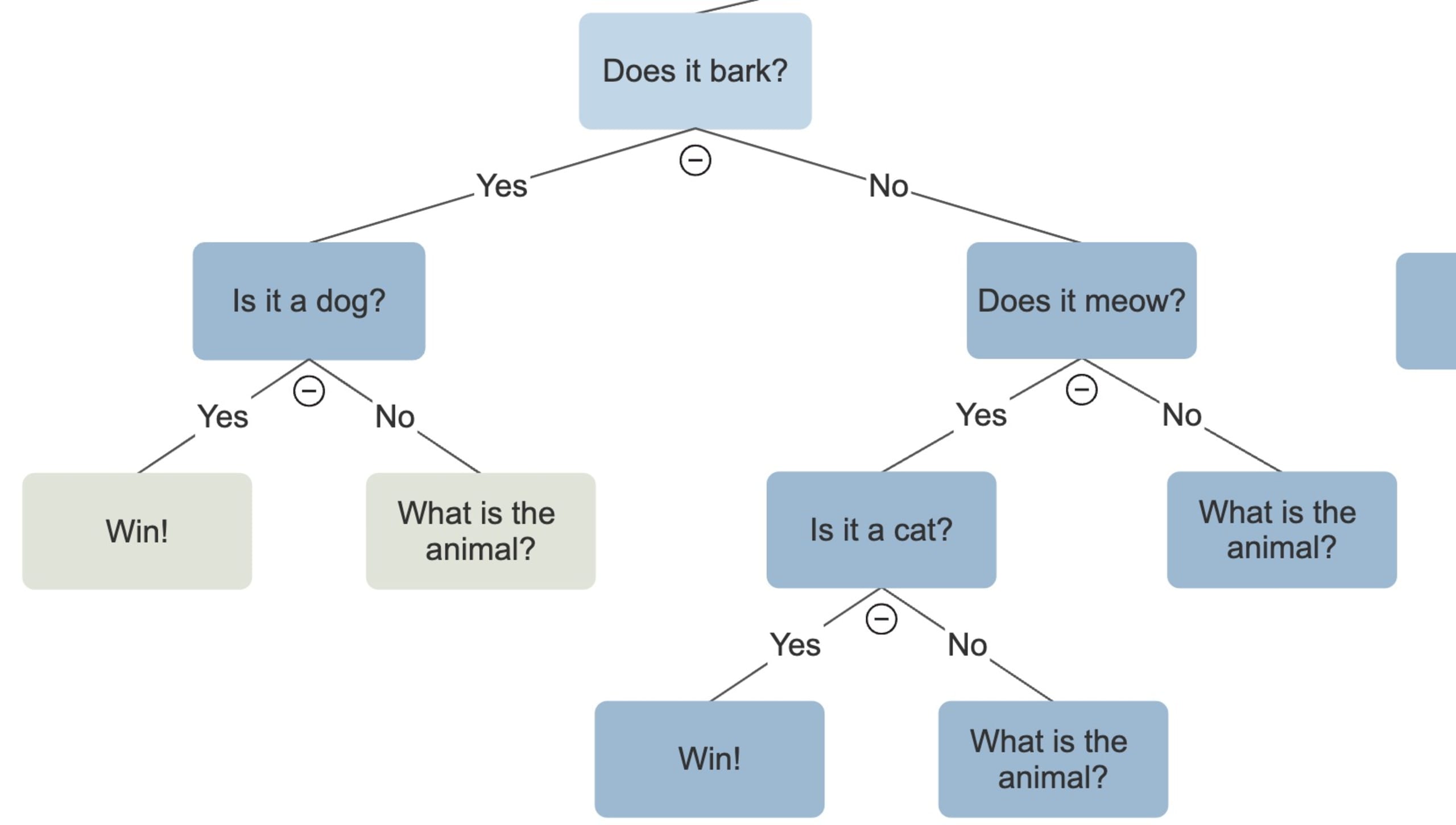

The concept for the assignment I gave out was simple. The program was to try to guess an animal based on attributes provided by the user. If it guessed wrong, it added a new attribute to the system.

With this expert system, the program can seemingly learn and thus display some form of artificial intelligence. For example, if the user was thinking of a cat, the system would initially answer YES for having four legs and then answer NO about barking. From there, the system would ask what the animal was, and then it would ask, “What question would help distinguish a cat from a dog?”

In this case, the user might say, “Does it meow?”

Having a user build this system one animal at a time is incredibly inefficient. But remember, this was just an assignment to help learn about decision trees and expert systems. After a user plays this 100 times, the game will potentially have 100 new animals. If you were to have another user play, there is a good chance that the system would be able to identify what animal the user was thinking of.

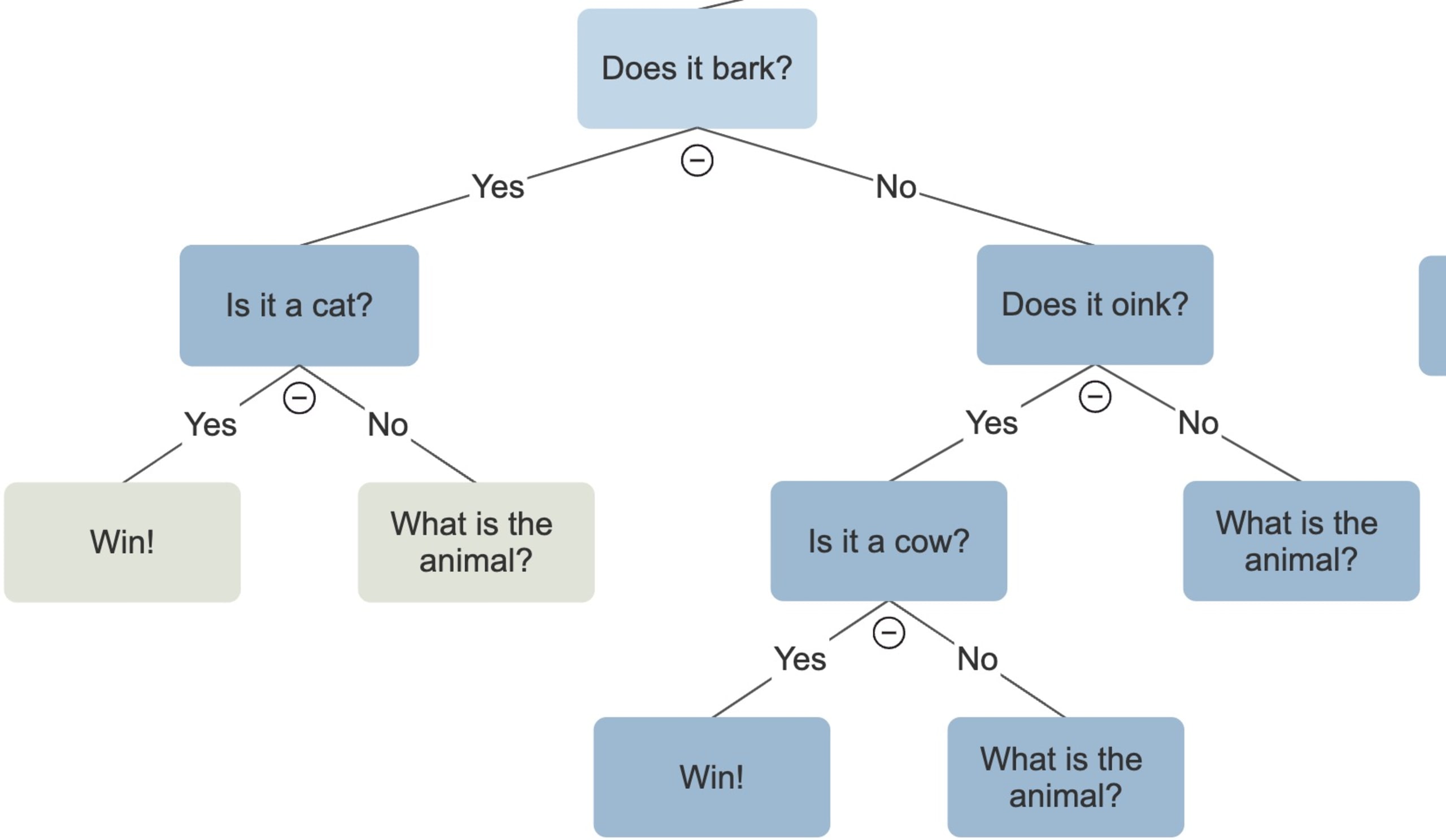

But all of this relies on one thing — good data.

What if a user decides that a cat does indeed bark, a horse meows, and a cow oinks? Would the system still work? Yes. Would it have desirable results? No.

Expert systems (and many other AI systems) can fail due to incomplete data, biased data, human input, and many other reasons. If we ask AI to generate a piece of code, it will do so by looking at existing data (training data). If that data is incorrect, has errors, or is biased in some way, the output will also have those flaws.

Expert systems and LLMs are very different. One relies on a knowledge base, and the other learns from patterns and associations. However, both suffer from GIGO and rely heavily on accurate data.

If you feed any AI system "garbage" through poor-quality data, you'll get "garbage" results, which can have real-world consequences. And we are seeing these consequences now. Our research found that over three-quarters of developers bypass established protocols to use code completion tools despite potential risks.

Learn more about AI vulnerabilities from the OWASP Top 10 LLM and from our Security Education platform with our lessons on AI.