Why You Need a Security Companion for AI-Generated Code

25. Oktober 2023

0 Min. LesezeitEveryone is talking about generative artificial intelligence (GenAI) and a massive wave of developers already incorporate this life-changing technology in their work. However, GenAI coding assistants should only ever be used in tandem with AI security tools. Let's take a look at why this is and what we're seeing in the data.

Thanks to AI assistance, developers are building faster than ever. With this dramatic jump in productivity, security teams need to keep pace without holding back progress or unwittingly letting vulnerabilities seep through in the deluge of code.

Volume is a major issue, but there is also a vast mismatch between the speed of GenAI-enhanced development and that of more traditional testing processes. After a GenAI coding tool generates output, the developer may assume that this output is secure and carry on with their work, right up to the point where a security test is run. At this point, if a vulnerability is surfaced, the developer has to go back and remediate their work. Not only does this mental pivot take time (a previous study by the University of California, Irvine, shows that it takes 23 minutes for us to get back into our flow, after being interrupted), it is also highly inefficient, as work needs to be repeated.

Furthermore, leading studies show that the problem is further compounded by other factors:

Number of vulnerabilities: Developers using GenAI coding assistants introduce a significantly higher number of vulnerabilities into their code, as compared to developers coding without such assistance.

Reduced awareness: Developers using GenAI coding assistants have an increased, misplaced confidence in the code that they produce.

We'll dig into both of those studies below, but the important thing to recognize is that not only are developers putting out more vulnerable code with the help of AI, they are also trusting this vulnerable code more than they trusted their manually created non-secure code. This means that developers and AppSec need security solutions that are smarter, faster, and more intuitive to keep up with these new problems.

GenAI code should not be assumed safe

As mentioned above, it is not just about the volume of code. It's also about the vulnerabilities being unknowingly introduced at AI-speed. Vulnerabilities that are being built right into the foundations of your application.

Why do we say that GenAI should not be assumed safe? Well, for one reason, a study by Stanford University researchers (Dec 2022) showed that participants with access to an AI assistant “wrote significantly less secure code” than those without access. Another is that a very recent study by researchers at Cornell University (Oct 2023) on security issues and their scale — specifically focused on real-world scenarios with pragmatic applications — showed the complexity and weight of AI-generated code vulnerabilities. Specifically:

35.8% of Copilot-generated code snippets contain instances of common weaknesses (CWEs) across multiple languages.

In addition to that, worryingly, the study identified significant diversity of security weaknesses relating to 42 different CWEs. The most commonly encountered vulnerabilities being: CWE-78: OS Command Injection, CWE-330: Use of Insufficiently Random Values, and CWE-703: Improper Check or Handling of Exceptional Conditions.

And the most concerning finding was that around 26% of the CWEs identified are listed amongst 2022’s top 25 CWEs.

Developers showing increased confidence in vulnerable code

The same Stanford University research paper mentioned above also found that, “participants provided access to an AI assistant were more likely to believe that they wrote secure code than those without access to the AI assistant.” In other words, on top of more vulnerabilities being built into the foundations of your apps than ever before, developers are also more likely to be confident about baking these in. It seems safe to assume that speed is being misinterpreted as skill.

The solution: a holistic approach

Now, we are not saying that you should not use GenAI. A 2023 Gartner poll of top executives shows that 70% of their organizations are in investigation and exploration mode with GenAI and 19% of their organizations are in pilot or production mode with GenAI. So, it’s clear that the shift has already happened. In fact, Snyk use GenAI coding tools too, but in a way that prioritizes security.

We believe in leveraging this wonderful new technology, but doing it safely. A GenAI coding tool can be viewed as an inexperienced developer. It is able to produce code but not able to pick up nuances in code, it makes mistakes, and it is certainly not trained to spot security risks, both small and monumental. To address this modern security problem, you will need to take a holistic, DevSecOps approach and consider people, process, and tools.

People

First, you need to educate developers on how GenAI works, its resulting flaws, and the inherent risks associated with the technology.

GenAI models (some also known as Large Language Models or LLMs, which are a subset of GenAI that are trained on text and produce text output) are just statistical models that make predictions based on probability. An LLM looks at vast quantities of data, sees a pattern repeating over and over, and makes a best guess that something is likely (as opposed to factual), based on the high volume of similar or exact repetitions it sees. An LLM has no reasoning capabilities and is only as good as the data that goes into training it. So, any flaw in this training data, including biases and outdated information, will be replicated by the LLM and sometimes, what it predicts does not fit nor make sense in the context or is not even true at all, but the LLM has no ability to understand recognize these as wrong or flag it for the user’s attention.

Some GenAI tools may have security checks built in, but security is not their primary purpose, so the security checks that they perform are inadequate. Just as you would not rely on an operating system firewall to secure your whole business organization, you would not (and should not) rely on a coding assistant as your primary security layer.

AI is not a new topic despite ChatGPT driving recent interest, so in case you wish to learn more, there is a dazzling collection of content available, including Snyk's blog.

Process

Next, you need to rethink your processes. The traditional security processes and methods are comparatively slow and disruptive to developers’ workflow. As you may know, Snyk is a pioneering advocate for, and enabler of “shifting left” — performing software and system testing earlier in the software development lifecycle. We have a good reason for this: developers build the things that create and maintain your revenues, but security saves your reputation and potentially millions or billions that might be lost through error or lack of knowledge, so you need both to work together at optimum pace and flow.

However, there has historically been friction between the ways that both functions work. Developers need to move quickly and existing testing methods are bottlenecks. Instead of disrupting business-generating work, forcing behavior that does not come naturally to your developers, and continuing to over-burden security teams who are doing their best but unable to keep up any more, why not get an AI-powered security champion that neutralizes threats as they’re created?

Tools

This brings us around to tools. AI may have its risks but it also brings immense productivity. Just like how a car vastly improves the way we live by getting us around much faster, but also carries weighty risks so we would never drive without putting our seat belt on first, developers need to use GenAI coding tools with a security “seat belt” firmly on. The above referenced Stanford University study found that, in terms of secure and non-secure code produced by participants using GenAI in the study, “87% of secure responses required significant edits from users”. The study suggests that this may mean “informed modifying” is required to make code secure. This finding aligns with our belief that developers can lean on the vast capabilities of GenAI, so long as real-time security checks are being done on their code, at speed.

Now that we know security checks are required on GenAI code, what then, makes a security solution ideal for this use case? The top characteristics of such a tool would be the ability to run invisibly and keep pace while developers work, thorough and accurate checks, and interoperability.

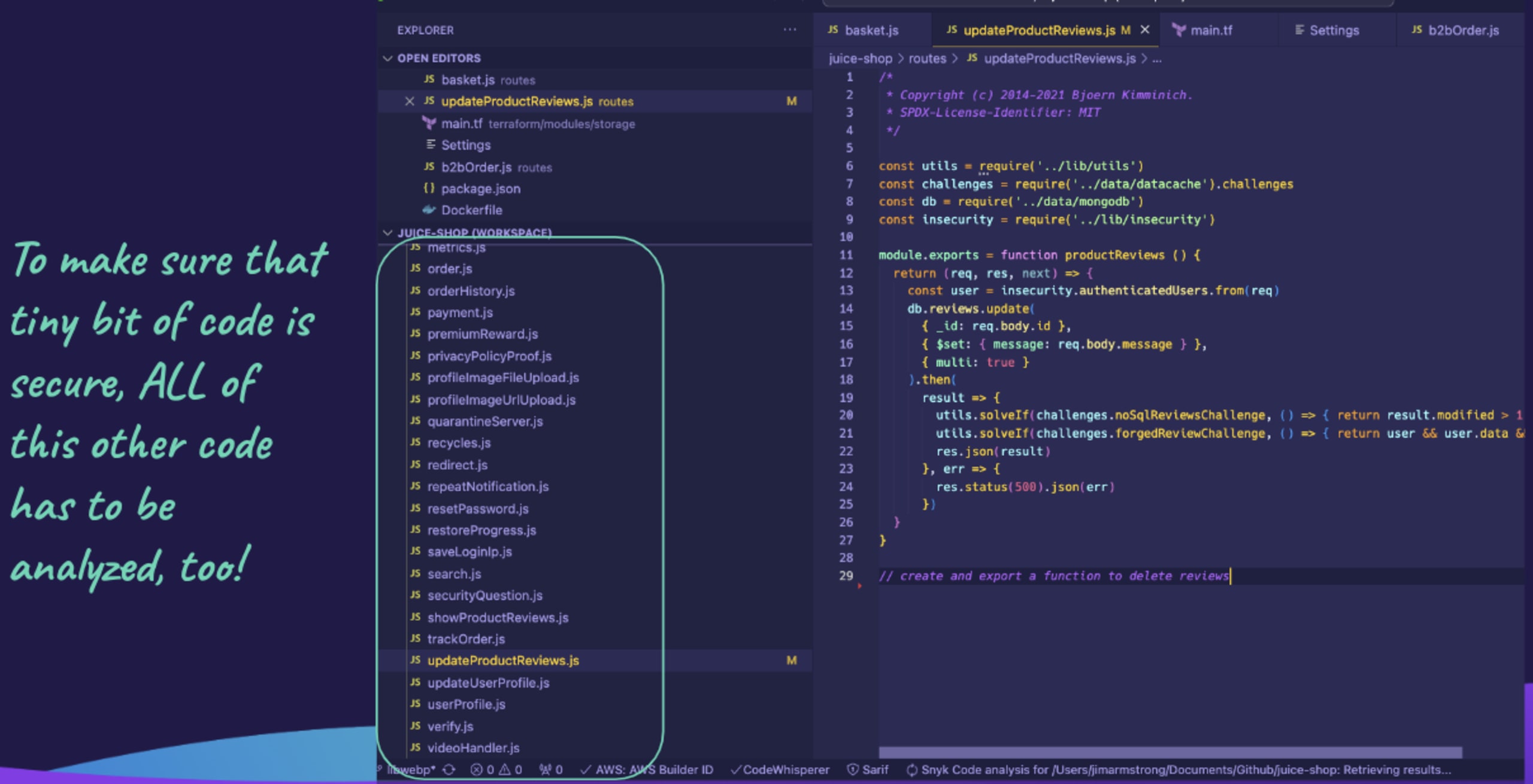

This is where Snyk can help. We leverage our cumulative history as security experts, using our knowledge to secure and rectify your code at the speed of AI-assisted development. Understanding that securing modern applications is a complex process, Snyk checks the code across your entire application in real-time as the code is being written, tracing your data flows to identify only the relevant dependencies and vulnerabilities, generating a potential fix and re-checking the whole application to test the impact of the generated fix, before highlighting the relevant vulnerabilities to the developer and enabling one-click remediation within the IDE. And all this is done in seconds.

Snyk integrates directly into all the most popular tools (IDE, Git, CLI), languages, and workflows. In short, Snyk is like the security-minded best friend of a developer. We are where developers and their AI tools are, working beside them at the same pace, guiding them to safer applications.

Curious about how to pick the right security companion to your GenAI coding tool? We will discuss this in detail next week, so remember to keep an eye on our blog.

Shift to the new “left” with Snyk

We have seen that GenAI is immensely helpful but it has big security issues as well. The problems caused by GenAI coding tools for security teams include:

Disrupted workflow: Because developers cannot be expected to wait around for builds to be completed and testing results to return before carrying on with the same piece of work.

Volume and inherent weaknesses in the volume: Because developers are coding faster with AI, whilst also building proportionately more vulnerabilities into their code.

Increased developer trust in vulnerable code: Because their AI tool gives them a false sense of security.

GenAI tools have changed the game and moved the “left” even further left than the developer, so we need to ensure that we are there, tackling risks at the AI part of the software development life-cycle. Just like how a seat belt allows the driver of a car to go even faster, security tools are there to let you speed ahead with your GenAI coding tools.

Empower developers to embrace the power of GenAI safely. Shift to the new left with the perfect companion to your GenAI coding assistant — Snyk.

Want to see Snyk in action? Watch our virtual session on-demand — Live Hack: Exploiting AI-Generated Code.

Jetzt starten mit Sicherheit für KI-generierten Code

Sie möchten Code aus KI-gestützten Tools in Minutenschnelle sicher machen? Dann registrieren Sie sich direkt für ein kostenloses Snyk Konto oder besprechen Sie in einer Demo mit unseren Experten, was die Lösung für Ihre Use Cases im Bereich Dev-Security möglich macht.