Automatic source locations with Rego

February 12, 2024

0 mins readAt Snyk, we are big fans of Open Policy Agent’s Rego. Snyk IaC is built around a large set of rules written in Rego, and customers can add their own custom rules as well.

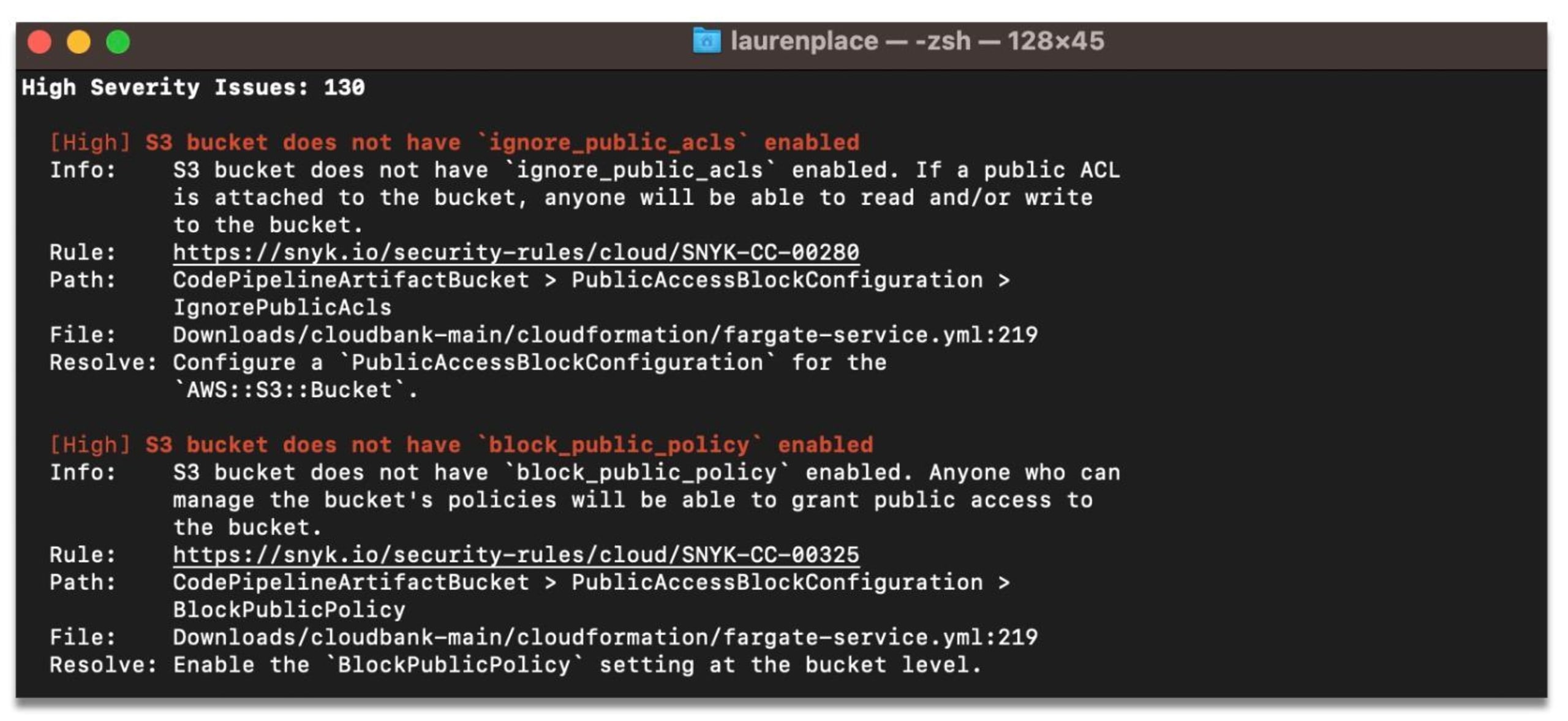

We recently released a series of improvements to Snyk IaC, and in this blog post, we’re taking a technical dive into a particularly interesting feature — automatic source code locations for rule violations.

When checking IaC files against known issues, the updated `snyk iac test` command will show accurate file, line, and column information for each rule violation. This works even for custom rules, without the user doing any work.

But before we provide a standalone proof-of-concept for this technique, we’ll need to make some simplifications. The full implementation of this is available in our unified policy engine.

Let’s start by looking at a CloudFormation example. While our IaC engine supports many formats, with a strong focus on Terraform, CloudFormation is a good example since we can parse it without too many dependencies (it’s just YAML, after all).

1Resources:

2 Vpc:

3 Type: AWS::EC2::VPC

4 Properties:

5 CidrBlock: 10.0.0.0/16

6 PublicSubnet:

7 Type: AWS::EC2::Subnet

8 Properties:

9 VpcId:

10 Ref: Vpc

11 CidrBlock: 10.0.0.0/24

12 PrivateSubnet:

13 Type: AWS::EC2::Subnet

14 Properties:

15 VpcId:

16 Ref: Vpc

17 CidrBlock: 10.0.128.0/20We want to ensure that no subnets use a CIDR block larger than `/24`, so let’s write a Rego policy to do just that:

1package policy

2

3deny[resourceId] {

4 resource := input.Resources[resourceId]

5 resource.Type == "AWS::EC2::Subnet"

6 [_, mask] = split(resource.Properties.CidrBlock, "/")

7 to_number(mask) < 24

8}This way, `deny` will produce a set of denied resources. We won’t go into the details of how Rego works, but if you want to learn more, we recommend the excellent OPA by Example course.

We can subdivide the problem into two parts:

We’ll want to infer that our policy uses the `CidrBlock` attribute

Then, we’ll retrieve the source code location

Let’s start with (2) since it provides a good way to familiarize ourselves with the code.

Source location retrieval

A source location looks like this:

1type Location struct {

2 File string

3 Line int

4 Column int

5}

6func (loc Location) String() string {

7 return fmt.Sprintf("%s:%d:%d", loc.File, loc.Line, loc.Column)

8}We will also introduce an auxiliary type to represent paths in YAML. In YAML, there are two kinds of nested documents — arrays and objects.

1some_array:

2- hello

3- world

4some_object:

5 foo: barIf we wanted to be able to refer to any subdocument, we could use something akin to JSON paths. In the example above, [`"some_array", 1`] would then point to `"word"`. But since we won’t support arrays in our proof-of-concept, we can get by just using an array of strings.

type Path []stringOne example of a path would be something like:

Path{"Resources", "PrivateSubnet", "Properties", "CidrBlock"}Now we can provide a convenience type to load YAML and tell us the `Location` of certain `Paths`.

1type Source struct {

2 file string

3 root *yaml.Node

4}

5func NewSource(file string) (*Source, error) {

6 bytes, err := ioutil.ReadFile(file)

7 if err != nil {

8 return nil, err

9 }

10

11 var root yaml.Node

12 if err := yaml.Unmarshal(bytes, &root); err != nil {

13 return nil, err

14 }

15

16 return &Source{file: file, root: &root}, nil

17}Finding the source location of a `Path` comes down to walking a tree of YAML nodes:

1func (source *Source) Location(path Path) *Location {

2 cursor := source.root

3 for len(path) > 0 {

4 switch cursor.Kind {

5 // Ignore multiple docs in our PoC.

6 case yaml.DocumentNode:

7 cursor = cursor.Content[0]

8 case yaml.MappingNode:

9 // Objects are stored as an array.

10 // Content[2 * n] holds to the key and

11 // Content[2 * n + 1] to the value.

12 for i := 0; i < len(cursor.Content); i += 2 {

13 if cursor.Content[i].Value == path[0] {

14 cursor = cursor.Content[i+1]

15 path = path[1:]

16 }

17 }

18 }

19 }

20 return &Location{

21 File: source.file,

22 Line: cursor.Line,

23 Column: cursor.Column,

24 }

25}Sets and trees of paths

With that out of the way, we’ve reduced the problem from automatically inferring source locations that are used in a policy to automatically inferring attribute paths.

This is also significant for other reasons — for example, Snyk can apply the same policies to IaC resources as well as resources discovered through cloud scans, the latter of which don’t really have meaningful source locations, but they do have meaningful attribute paths!

So, we want to define sets of attribute paths. Since paths are backed by arrays, we unfortunately can’t use something like `map[Path]struct{}` as a set in Go.

Instead, we will need to store these in a recursive tree.

type PathTree map[string]PathTreeThis representation has other advantages. In general, we only care about the longest paths that a policy uses, since they are more specific. Our example policy is using `Path{"Resources", "PrivateSubnet", "Properties"}` as well as `Path{"Resources", "PrivateSubnet", "Properties", "CidrBlock"} — we only care about the latter.

We’ll define a recursive method to insert a` Path` into our tree:

1func (tree PathTree) Insert(path Path) {

2 if len(path) > 0 {

3 if _, ok := tree[path[0]]; !ok {

4 tree[path[0]] = map[string]PathTree{}

5 }

6 tree[path[0]].Insert(path[1:])

7 }

8}…as well as a way to get a list of Paths back out. This does a bit of unnecessary allocation, but we can live with that.

1func (tree PathTree) List() []Path {

2 if len(tree) == 0 {

3 // Return the empty path.

4 return []Path{{}}

5 } else {

6 out := []Path{}

7 for k, child := range tree {

8 // Prepend `k` to every child path.

9 for _, childPath := range child.List() {

10 path := Path{k}

11 path = append(path, childPath...)

12 out = append(out, path)

13 }

14 }

15 return out

16 }

17}We now have a way to nicely store the `Paths` that were used by a policy, and we have a way to convert those into source locations.

Static vs runtime analysis

The next question is to figure out which `Paths` in a given input are used by a policy, and then `Insert` those into the tree.

This is not an easy question, as the code may manipulate the input in different ways before using the paths. We’ll need to look through user-defined ( `has_bad_subnet`) as well as built-in functions ( `object.get`), just to illustrate one of the possible obstacles:

1has_bad_subnet(props) {

2 [_, mask] = split(props.CidrBlock, "/")

3 to_number(mask) < 24

4}

5

6deny[resourceId] {

7 resource := input.Resources[resourceId]

8 resource.Type == "AWS::EC2::Subnet"

9 has_bad_subnet(object.get(resource, "Properties", {}))

10}Fortunately, we are not alone in this since people have been curious about what programs do since the first program was written. There are generally two ways of answering a question like that about a piece of code:

Static analysis: Try to answer by looking at the syntax tree, types, and other static information that we can retrieve from (or add to) the OPA interpreter. The advantage is that we don’t need to run this policy, which is great if we don’t trust the policy authors. The downside is that static analysis techniques will usually result in some false negatives as well as false positives.

Runtime analysis: Trace the execution of specific policies, and infer from what `Paths` are being used by looking at runtime information. The downside here is that we actually need to run the policy, and adding this analysis may slow down policy evaluation.

We tried both approaches but decided to go with the latter since we found it much easier to implement reliably, and the performance overhead was negligible. It’s also worth mentioning that this is not a binary choice — you could take a hybrid approach and combine the two.

OPA provides a Tracer interface that can be used to receive events about what the interpreter is doing. A common use case for tracers is to send metrics or debug information to some centralized log. Today, we’ll be using it for something else, though.

1type locationTracer struct {

2 tree PathTree

3}

4func newLocationTracer() *locationTracer {

5 return &locationTracer{tree: PathTree{}}

6}

7func (tracer *locationTracer) Enabled() bool {

8 return true

9}Tracing usage of terms

Rego is an expressive language. Even though some desugaring happens to reduce it to a simpler format for the interpreter, there are still a fair number of events.

We are only interested in two of them. We consider a value used if:

1. It is unified (you can think of this as assigned, we won’t go in detail) against another expression, such as:

x = input.FooThis also covers `==` and `:=`. Since this is a test that can fail, we can state we used the left-hand side as well as the right-hand side.

2. It is used as an argument to a built-in function, like:

regex.match("/24$", input.cidr)While Rego borrows some concepts from lazy languages, arguments to built-in functions are always completely grounded before the built-in is invoked. Therefore, we can say we used all arguments supplied to the built-in.

3. It is used as a standalone expression, such as:

volume.encryptedThis is commonly used to evaluate booleans and check that attributes exist.

Now, it’s time to implement. We match two events and delegate to a specific function to make the code a bit more readable:

1func (tracer *locationTracer) Trace(event *topdown.Event) {

2 switch event.Op {

3 case topdown.UnifyOp:

4 tracer.traceUnify(event)

5 case topdown.EvalOp:

6 tracer.traceEval(event)

7 }

8}We’ll handle the insertion into our `PathTree` later in an auxiliary function called `used(*ast.Term)`. For now, let’s mark both the left- and right-hand sides to the unification as used:

1func (tracer *locationTracer) traceUnify(event *topdown.Event) {

2 if expr, ok := event.Node.(*ast.Expr); ok {

3 operands := expr.Operands()

4 if len(operands) == 2 {

5 // Unification (1)

6 tracer.used(event.Plug(operands[0]))

7 tracer.used(event.Plug(operands[1]))

8 }

9 }

10}`event.Plug` is a helper to fill in variables with their actual values.

An `EvalOp` event covers both (2) and (3) mentioned above. In the case of a built-in function, we will have an array of terms, of which the first element is the function, and the remaining elements are the arguments. We can check that we’re dealing with a built-in function by looking in `ast.BuiltinMap`.

The case for a standalone expression is easy.

1func (tracer *locationTracer) traceEval(event *topdown.Event) {

2 if expr, ok := event.Node.(*ast.Expr); ok {

3 switch terms := expr.Terms.(type) {

4 case []*ast.Term:

5 if len(terms) < 1 {

6 // I'm not sure what this is, but it's definitely

7 // not a built-in function application.

8 break

9 }

10 operator := terms[0]

11 if _, ok := ast.BuiltinMap[operator.String()]; ok {

12 // Built-in function call (2)

13 for _, term := range terms[1:] {

14 tracer.used(event.Plug(term))

15 }

16 }

17 case *ast.Term:

18 // Standalone expression (3)

19 tracer.used(event.Plug(terms))

20 }

21 }

22}Annotating terms

When we try to implement `used(*ast.Term)`, the next question poses itself — given a term, how do we map it to a `Path` in the input?

One option would be to search the input document for matching terms. But that would produce a lot of false positives, since a given string like `10.0.0.0/24` may appear many times in the input!

Instead, we will annotate all terms with their path. Terms in OPA can contain some metadata, including the location in the Rego source file. We can reuse this field to store an input` Path`. This is a bit hacky, but with some squinting, we are morally on the right side, since the field is meant to store locations.

The following snippet illustrates how we want to annotate the first few lines of our CloudFormation template:

Resources: # ["Resources"]

Vpc: # ["Resources", "Vpc"]

Type: AWS::EC2::VPC # ["Resources", "Vpc", "Type"]

Properties: # ["Resources", "Vpc", "Properties"]

CidrBlock: 10.0.0.0/16 # ["Resources", "Vpc", "Properties", "CidrBlock"]

`annotate` implements a recursive traversal to determine the `Path` at each node in the value. For conciseness, we only support objects and leave sets and arrays out.

1func annotate(path Path, term *ast.Term) {

2 // Annotate current term by setting location.

3 if bytes, err := json.Marshal(path); err == nil {

4 term.Location = &ast.Location{}

5 term.Location.File = "path:" + string(bytes)

6 }

7 // Recursively annotate children.

8 switch value := term.Value.(type) {

9 case ast.Object:

10 for _, key := range value.Keys() {

11 if str, ok := key.Value.(ast.String); ok {

12 path = append(path, string(str))

13 annotate(path, value.Get(key))

14 path = path[:len(path)-1]

15 }

16 }

17 }

18}With this annotation in place, it’s easy to write `used(*ast.Term)`. The only thing to keep in mind is that not all values are annotated. We only do that for those coming from the input document, not, for example, literals embedded in the Rego source code.

1func (tracer *locationTracer) used(term *ast.Term) {

2 if term.Location != nil {

3 val := strings.TrimPrefix(term.Location.File, "path:")

4 if len(val) != len(term.Location.File) {

5 // Only when we stripped a "path" suffix.

6 var path Path

7 json.Unmarshal([]byte(val), &path)

8 tracer.tree.Insert(path)

9 }

10 }

11}Wrapping up

That’s it, folks! We skipped over a lot of details, such as arrays and how to apply this to a more complex IaC language like HCL.

In addition to that, we’re also marking the `Type` attributes as used, since we check those in our policy. This isn’t great, and as an alternative, we try to provide a resources-oriented Rego API instead. But that’s beyond the scope of this example.

If you’re interested in learning more about any of these features, we recommend checking out snyk/policy-engine for the core implementation or the updated Snyk IaC, which comes with this and a whole host of other features, including an exhaustive rule bundle.

What follows is a main function to tie everything together and print out some debug information. It’s mostly just wrapping up the primitives we defined so far, and running it on an example. But let’s include it to make this post function as a reproducible standalone example.

1func infer(policy string, file string) error {

2 source, err := NewSource(file)

3 if err != nil {

4 return err

5 }

6

7 bytes, err := ioutil.ReadFile(file)

8 if err != nil {

9 return err

10 }

11

12 var node yaml.Node

13 if err := yaml.Unmarshal(bytes, &node); err != nil {

14 return err

15 }

16

17 var doc interface{}

18 if err := yaml.Unmarshal(bytes, &doc); err != nil {

19 return err

20 }

21

22 input, err := ast.InterfaceToValue(doc)

23 if err != nil {

24 return err

25 }

26

27 annotate(Path{}, ast.NewTerm(input))

28 if bytes, err = ioutil.ReadFile(policy); err != nil {

29 return err

30 }

31

32 tracer := newLocationTracer()

33 results, err := rego.New(

34 rego.Module(policy, string(bytes)),

35 rego.ParsedInput(input),

36 rego.Query("data.policy.deny"),

37 rego.Tracer(tracer),

38 ).Eval(context.Background())

39 if err != nil {

40 return err

41 }

42

43 fmt.Fprintf(os.Stderr, "Results: %v\n", results)

44 for _, path := range tracer.tree.List() {

45 fmt.Fprintf(os.Stderr, "Location: %s\n", source.Location(path).String())

46 }

47 return nil

48}

49func main() {

50 if err := infer("policy.rego", "template.yml"); err != nil {

51 fmt.Fprintf(os.Stderr, "%s\n", err)

52 os.Exit(1)

53 }

54}The full code for this PoC can be found in this gist.

IaC security designed for devs

Snyk secures your infrastructure as code from SDLC to runtime in the cloud with a unified policy as code engine so every team can develop, deploy, and operate safely.