Installing multiple Snyk Kubernetes controllers into a single Kubernetes cluster

August 15, 2022

0 mins readKubernetes provides an interface to run distributed systems smoothly. It takes care of scaling and failover for your applications, provides deployment patterns, and more. Regarding security, it’s the teams deploying workloads onto the Kubernetes cluster that have to consider which workloads they want to monitor for their application security requirements.

But what if each team could actually control which container images are scanned and for which workloads in particular? There could be multiple teams deploying to Kubernetes, each with unique requirements around what is monitored. This use case is best solved by allowing each team to set up their own Snyk Kubernetes integration and monitor the workloads they need to. Let’s take a look at how it works.

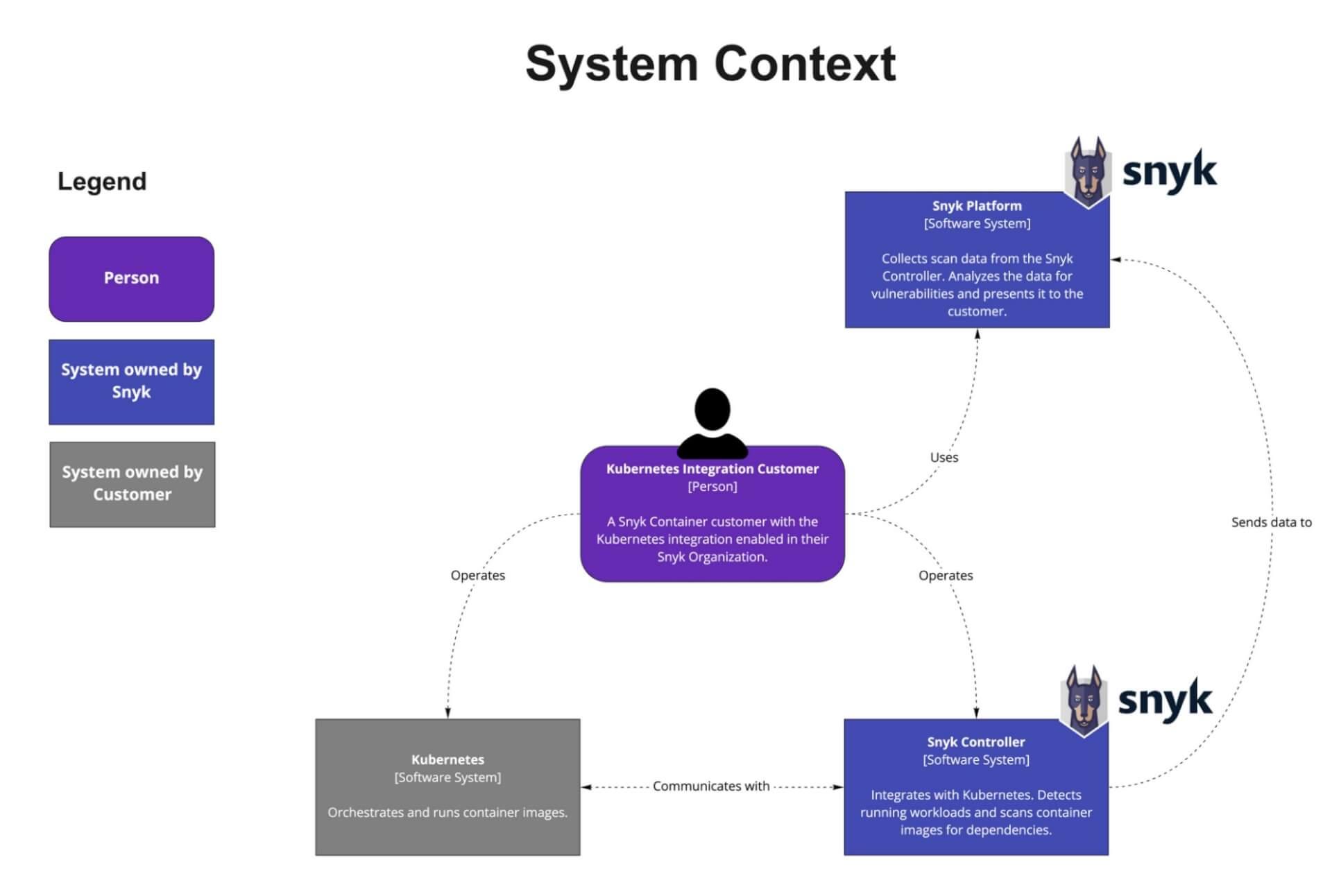

Snyk Kubernetes integration overview

Snyk integrates with Kubernetes, enabling you to import and continually test your running workloads to identify vulnerabilities in their underlying images, as well as any configurations that might make those workloads less secure. Once workloads are deployed, Snyk continues to monitor those workloads, identifying exposure to newly-discovered vulnerabilities, as well as any additional security issues as new containers are deployed or the workload configuration changes.

Kubernetes integration architecture diagram

How to install multiple Snyk controllers into a single Kubernetes cluster

Now the fun part! We’re going to walk through step-by-step instructions for setting up multiple Snyk Controllers in a single Kubernetes cluster. Here are the steps we’ll take:

Configure separate namespaces

Create a Snyk organization for each namespace

Install and configure

snyk monitorfor the first namespace (apples)Install and configure

snyk monitorfor the second namespace (bananas)Verify that deployed workloads are automatically imported via the policy

Deploy workloads to bananas that are auto-imported through a Rego policy file

Prerequisites

You’ll need to have a Business or Enterprise account with Snyk in order to use the Kubernetes integration. If you don’t have one of these, you can start a free trial of our Business plan. Even if you don’t want to try it yourself, I’d still encourage you to keep reading (skipping the code blocks) to learn how to install and manage multiple Snyk controllers in a single Kubernetes cluster.

A Kubernetes cluster such as AKS, EKS, GKE, Red Hat OpenShift, or any other supported flavor.

Step 1: Configure separate namespaces

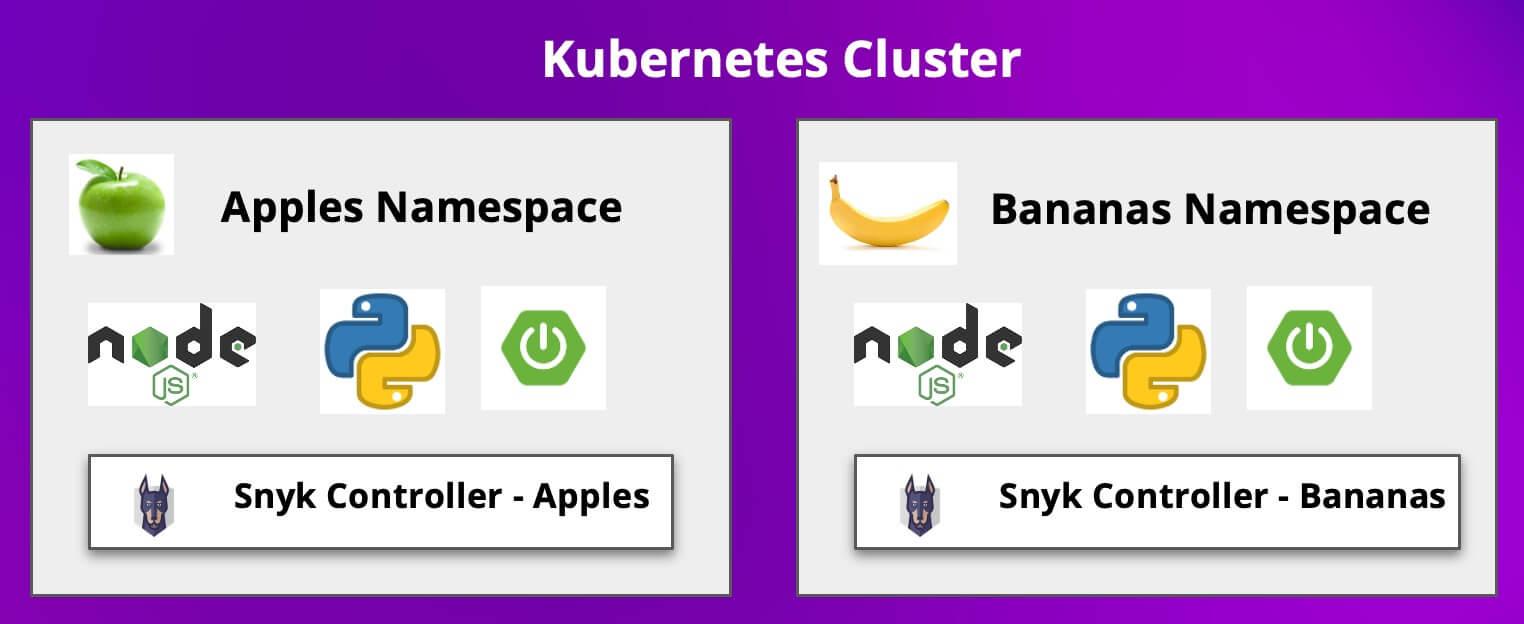

In this demo, I am using an Azure AKS service for my Kubernetes cluster. In this cluster we will install two Snyk controllers, each in their own namespace. One in the apples namespace, and one in the bananas namespace, as shown in the diagram below.

Go ahead and create the namespaces as shown below:

1➜ kubectl create namespace apples

2namespace/apples created

3➜ kubectl create namespace bananas

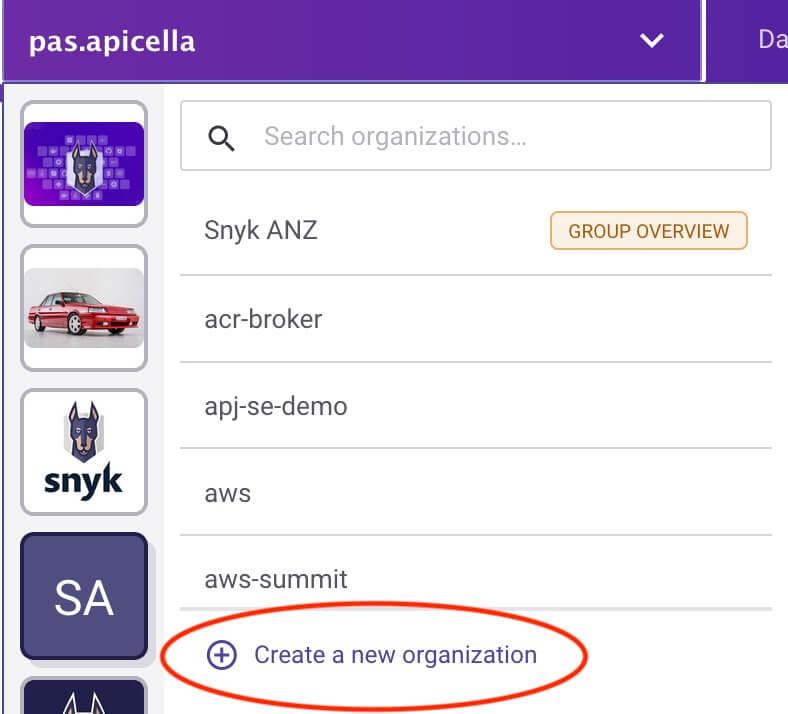

4namespace/bananas createdStep 2: Create a Snyk organization for each namespace

Using separate Snyk organizations allows us to separate the workload scanning, allowing feature teams to use their own separate organizations to have better control of the scanning of their workloads and images. You're free to use the same organization with each of the Snyk controllers that we deploy, but in this case we'll keep them distinct. In either case, the workloads will appear using a label we set when we install the Snyk controllers.

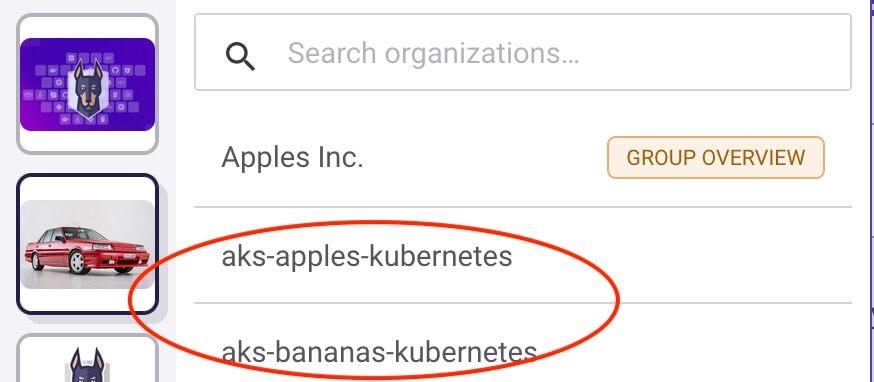

Our new organizations

One for the apples namespace:

One for the bananas namespace:

Once created you should see two empty organizations within the Snyk app. We will use them shortly.

For each organization, enable the Kubernetes integration by selecting the Integrations tab, selecting Kubernetes, and choosing Connect. Then refer to the Snyk Kubernetes documentation to complete the setup.

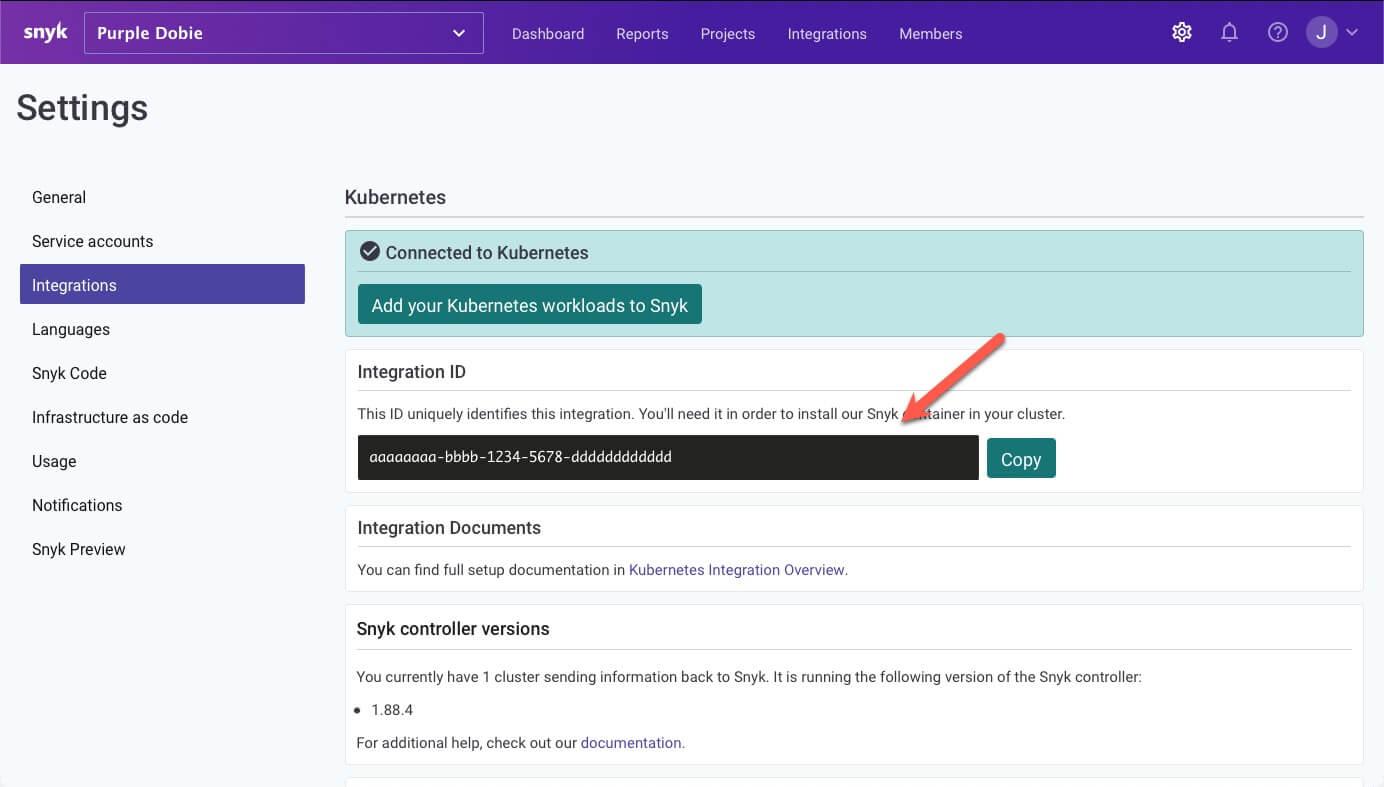

Please make a note of the Integration IDs for each Snyk organization's Kubernetes integration — you will need them soon. You can retrieve the Kubernetes Integration ID from the organization settings icon on the top navigation bar in Snyk , then by navigating to Integrations in the sidebar, and selecting the Kubernetes integration, as shown below.

Step 3: Install and configure snyk monitor for the apples namespace

Now that we have our organizations and integrations set up and connected to our cluster, we can configure the Snyk monitor, which we'll do from a terminal window.

We'll create a file to subscribe to workload events, define the criteria for our subscriptions, install Snyk's Helm chart for Kubernetes monitoring, and setup / start up the monitor

Create the registry file

In a terminal, create a directory for the apples organization and change to it:

1➜ mkdir apples

2➜ cd applesCreate a file called workload-events.rego with content as follows, replacing the <APPLES_INTEGRATION_ID> with the Integration ID of the apples org's Kubernetes integration.

From this Rego policy below, we are instructing the Snyk controller to import/delete workloads from the apples namespace only as long as the Kubernetes workload type is not a CronJob or Service. To learn more about the different workload types, consult the Snyk documentation.

workload-events.rego

1package snyk

2orgs := ["<APPLES_INTEGRATION_ID>"]

3default workload_events = false

4workload_events {

5 input.metadata.namespace == "apples"

6 input.kind != "CronJob"

7 input.kind != "Service"

8}Add the Snyk Helm charts to your repo

Access your Kubernetes environment and run the following command in order to add the Snyk Charts repository to Helm, we will need them when we install this Helm chart shortly.

1➜ helm repo add snyk-charts https://snyk.github.io/kubernetes-monitor --force-update

2"snyk-charts" has been added to your repositoriesCreate the configuration for Snyk monitor and deploy the Helm chart

Using a Kubernetes secret allows us to run without leaking plain-text credentials. In these steps we'll create and use the secret as we deploy our configuration for the apples organization.

Create the snyk-monitor secret for the apples namespace, replacing the <APPLES_INTEGRATION_ID> with apples’ Snyk app Kubernetes integration ID. In this demo, we are using the public Docker Hub for our container registry which does not require credentials when accessing public images. For more information on using private container registries please follow the Snyk controller documentation.

1➜ kubectl create secret generic snyk-monitor -n apples \

2 --from-literal=dockercfg.json={} \

3 --from-literal=integrationId=<APPLES_INTEGRATION_ID>

4secret/snyk-monitor createdNow create a configmap to store the Rego policy file for the snyk-monitor to determine what to auto import or delete from the apples namespace:

1➜ kubectl create configmap snyk-monitor-custom-policies -n apples \ --from-file=./workload-events.rego

2configmap/snyk-monitor-custom-policies createdNext, install the snyk-monitor into the apples namespace, replacing <APPLES_INTEGRATION_ID> with apple's Snyk app integration ID in the command:

1➜ helm upgrade --install snyk-monitor-apples snyk-charts/snyk-monitor \

2 --namespace apples \

3 --set clusterName="AKS K8s - Apples" \

4 --set policyOrgs=<APPLES_INTEGRATION_ID> \

5 --set workloadPoliciesMap=snyk-monitor-custom-policies

6

7Release "snyk-monitor-apples" does not exist. Installing it now.

8LAST DEPLOYED: Thu Jul 14 19:29:28 2022

9NAMESPACE: apples

10STATUS: deployed

11REVISION: 1

12TEST SUITE: NoneNote:Using the label “AKS K8s - Apples” is how you find the imported workloads in Snyk UI which you will see soon.

Finally, verify everything is up and running (this command assumes that you've got jq installed; if not, omit the | jq portion of the instruction):

1➜ helm ls -A -o json | jq

2[

3 {

4"name": "snyk-monitor-apples",

5"namespace": "apples",

6"revision": "1",

7"updated": "2022-07-14 19:45:56.681028 +1000 AEST",

8"status": "deployed",

9"chart": "snyk-monitor-1.92.10",

10"app_version": ""

11 }

12]

13

14➜ kubectl get pods -n apples

15NAME READY STATUS RESTARTS AGE

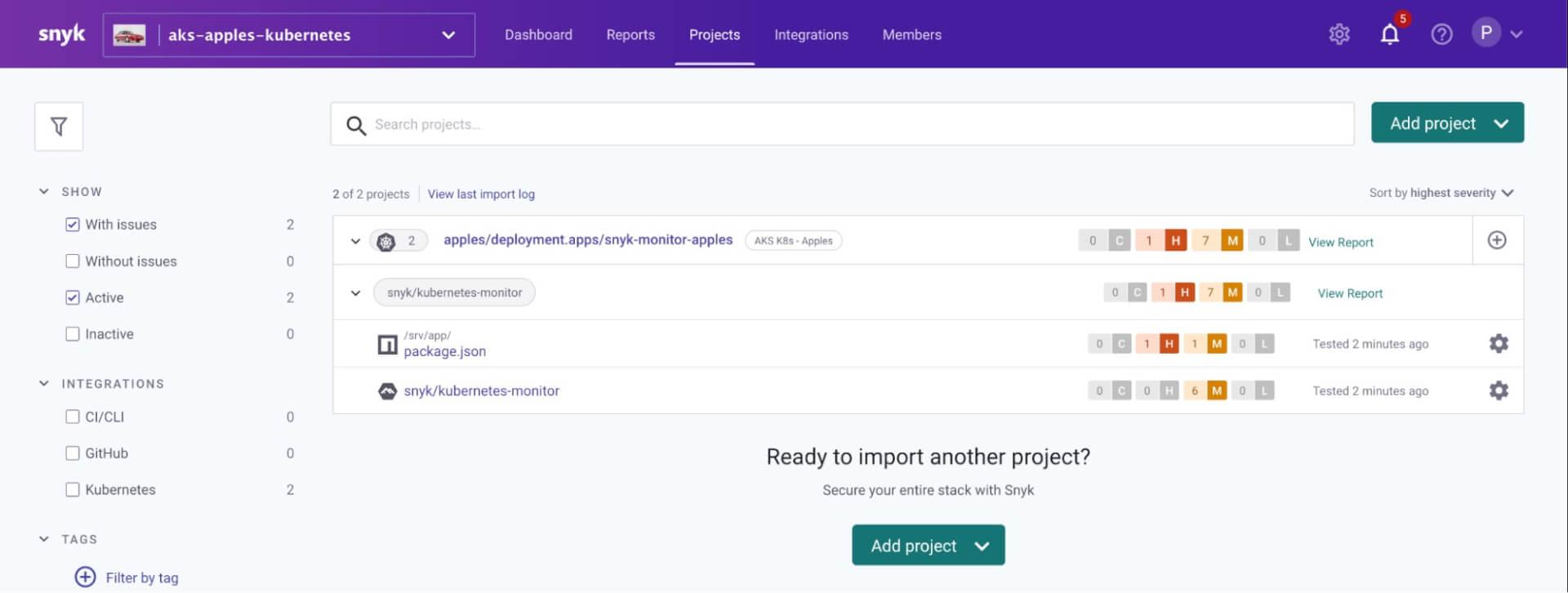

16snyk-monitor-apples-797cd64c-sqvj4 1/1 Running 0 16mAt this point, we have a Snyk controller installed in the apples namespace with its own unique name. Later we will show how this Snyk controller automatically imports workloads based on what we have configured as the workloads are deployed to the Kubernetes cluster’s apples namespace. For now, though, you may notice that if you refresh the projects page for the apples organization that it has scanned the Snyk monitor itself: that is because we told it to scan workloads of type Deployment in the apples namespace

Step 4: Install and configure snyk monitor for the bananas namespace

Create a directory called bananas and change to it:

1➜ cd ..

2➜ mkdir bananas

3➜ cd bananasNow create a file called workload-events.rego with content as follows, replace <BANANAS_INTEGRATION_ID> with bananas’ Snyk app Kubernetes integration ID:

1package snyk

2orgs := ["<BANANAS_INTEGRATION_ID>"]

3default workload_events = false

4workload_events {

5 input.metadata.namespace == "bananas"

6 input.kind != "CronJob"

7 input.kind != "Service"

8}Next, create the snyk-monitor secret for the bananas namespace, replacing BANANAS_INTEGRATION_ID with banana’s Snyk app Kubernetes integration ID:

1➜ ~/snyk/SE/blogs/snyk-multi-namespace-controllers/bananas kubectl create secret generic snyk-monitor -n bananas \

2 --from-literal=dockercfg.json={} \

3 --from-literal=integrationId=BANANAS_INTEGRATION_ID

4secret/snyk-monitor createdThen, create a configmap to store the Rego policy file for the snyk-monitor to determine what to auto import or delete from the bananas namespace:

1➜ kubectl create configmap snyk-monitor-custom-policies -n bananas --from-file=./workload-events.rego

2configmap/snyk-monitor-custom-policies createdInstall the snyk-monitor into the bananas namespace, replacing ¤BANANAS_INTEGRATION_ID> with bananas’ Snyk app Kubernetes integration ID:

1➜ helm upgrade --install snyk-monitor-bananas snyk-charts/snyk-monitor \

2 --namespace bananas \

3 --set clusterName="K8s - Bananas" \

4 --set policyOrgs=<BANANAS_INTEGRATION_ID> \

5 --set workloadPoliciesMap=snyk-monitor-custom-policies

6Release "snyk-monitor-bananas" does not exist. Installing it now.

7NAME: snyk-monitor-bananas

8LAST DEPLOYED: Thu Jul 14 20:19:36 2022

9NAMESPACE: bananas

10STATUS: deployed

11REVISION: 1

12TEST SUITE: NoneFinally, verify everything is up and running:

1➜ helm ls -A -o json | jq .

2[

3 {

4"name": "snyk-monitor-apples",

5"namespace": "apples",

6"revision": "1",

7"updated": "2022-07-14 19:45:56.681028 +1000 AEST",

8"status": "deployed",

9"chart": "snyk-monitor-1.92.10",

10"app_version": ""

11 },

12 {

13"name": "snyk-monitor-bananas",

14"namespace": "bananas",

15"revision": "1",

16"updated": "2022-07-14 20:19:36.563328 +1000 AEST",

17"status": "deployed",

18"chart": "snyk-monitor-1.92.10",

19"app_version": ""

20 }

21]

22

23➜ kubectl get pods -n bananas

24NAME READY STATUS RESTARTS AGE

25Snyk-monitor-bananas-54b8c4bf89-r6tf2 1/1 Running 0 3m38sStep 5: Verify that deployed workloads are automatically imported via the policy

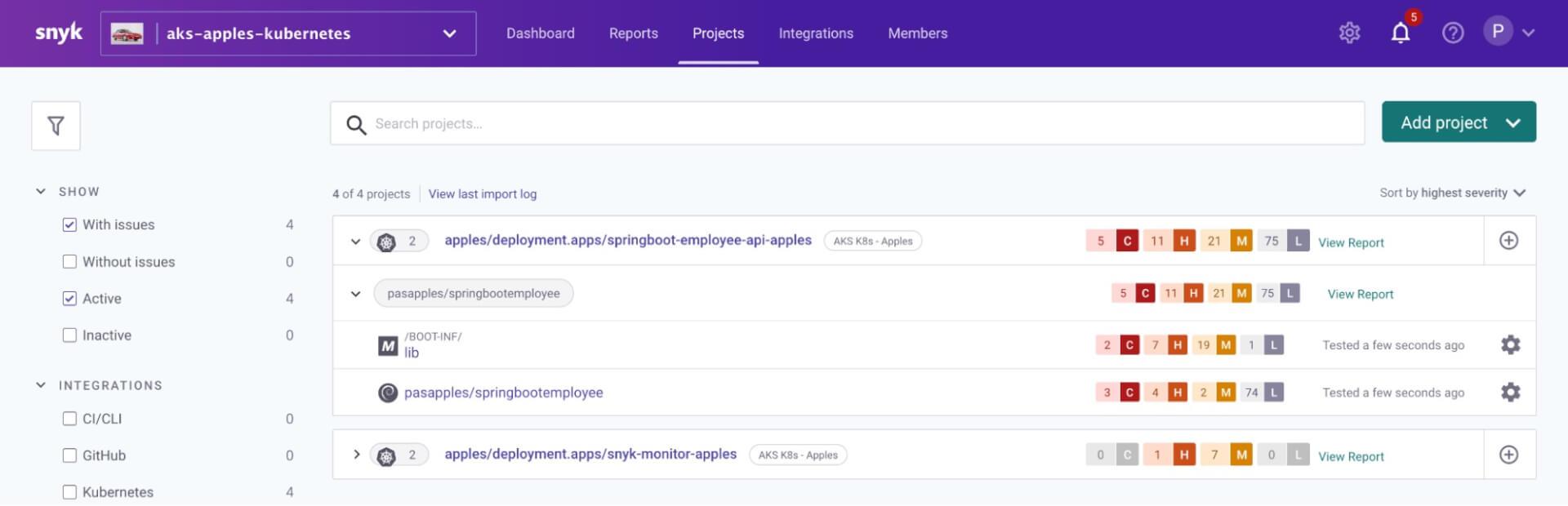

Next, we will deploy workloads to our apples namespace to make sure that they are automatically imported. We'll start with a Spring Boot application which we will deploy to Kubernetes as shown below.

Create a file called springbootemployee-K8s.yaml with the following contents:

1apiVersion: apps/v1

2kind: Deployment

3metadata:

4 name: springboot-employee-api-apples

5 namespace: apples

6spec:

7 selector:

8matchLabels:

9 app: springboot-employee-api-apples

10 replicas: 1

11 template:

12metadata:

13 labels:

14 app: springboot-employee-api-apples

15spec:

16 containers:

17 - name: springboot-employee-api-apples

18 image: pasapples/springbootemployee:multi-stage-add-layers

19 imagePullPolicy: Always

20 ports:

21 - containerPort: 8080Then deploy it:

1➜ kubectl apply -f springbootemployee-K8s.yaml

2deployment.apps/springboot-employee-api-apples createdVerify that after a few minutes, the workload is scanned and is automatically imported into the Snyk apples organization in Snyk as instructed to do so by the Snyk controller in the apples namespace

Step 6: Deploy workloads to bananas — auto imported through Rego policy file

Create a file called snyk-boot-web-deployment.yaml with contents as follows

1apiVersion: apps/v1

2kind: Deployment

3metadata:

4 name: snyk-boot-web

5 namespace: bananas

6spec:

7 selector:

8 matchLabels:

9 app: snyk-boot-web

10 replicas: 1

11 template:

12 metadata:

13 labels:

14 app: snyk-boot-web

15 spec:

16 containers:

17 - name: snyk-boot-web

18 image: pasapples/snyk-boot-web:v1

19 imagePullPolicy: Always

20 ports:

21 - containerPort: 5000Deploy as follows:

1➜ kubectl apply -f snyk-boot-web-deployment.yaml

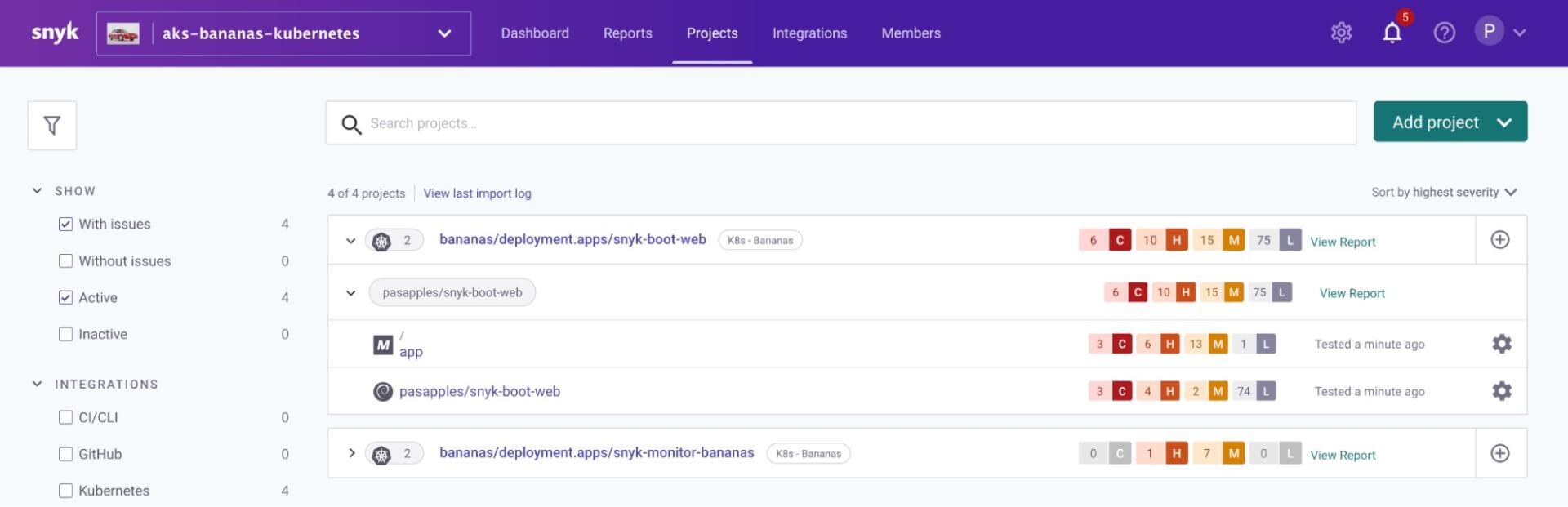

2deployment.apps/snyk-boot-web createdVerify after about 3 minutes that the workload is scanned and is auto imported into the Snyk bananas org in snyk app as instructed to do so by the Snyk controller in the bananas namespace

Bonus: viewing the logs for the Snyk controllers

Kubernetes configuration and YAML files can be somewhat tricky. If you run into issues setting up your monitors, it may be helpful to view the logs for each of the Snyk controllers, as shown in the following command snippets:

Apples Snyk controller

1export APPLES_POD=`kubectl get pods --namespace apples -l "app.kubernetes.io/name=snyk-monitor-apples" -o jsonpath="{.items[0].metadata.name}"`

2

3kubectl logs -n apples $APPLES_POD -fBananas Snyk controller

1export BANANAS_POD=`kubectl get pods --namespace bananas -l "app.kubernetes.io/name=snyk-monitor-bananas" -o jsonpath="{.items[0].metadata.name}"`

2

3kubectl logs -n bananas $BANANAS_POD -fSummary

In this blog we've shown how to deploy multiple Snyk controllers to a single Kubernetes cluster, each monitoring their own namespace. The controllers communicate with the Kubernetes API to determine which workloads (for instance the Deployment, ReplicationController, CronJob, etc. as defined in the Rego Policy File) are running on the cluster, find their associated images, and scan them directly on the cluster for vulnerabilities.

Feel free to try these steps out on your own Kubernetes cluster. To do so, start a free trial of a Snyk Business plan.

Resources to learn more

Now that you have learned how easy it is to set up the Kubernetes integration with Snyk, here are some useful links to help you get started on your container security journey

How to setup Snyk Container for automatic import/deletion of Kubernetes workloads projects

Learn Rego with Styra’s excellent tutorial course

Secure infrastructure from the source

Snyk automates IaC security and compliance in workflows and detects drifted and missing resources.