Lessons learned from improving full-text search at Snyk with Elasticsearch

November 4, 2021

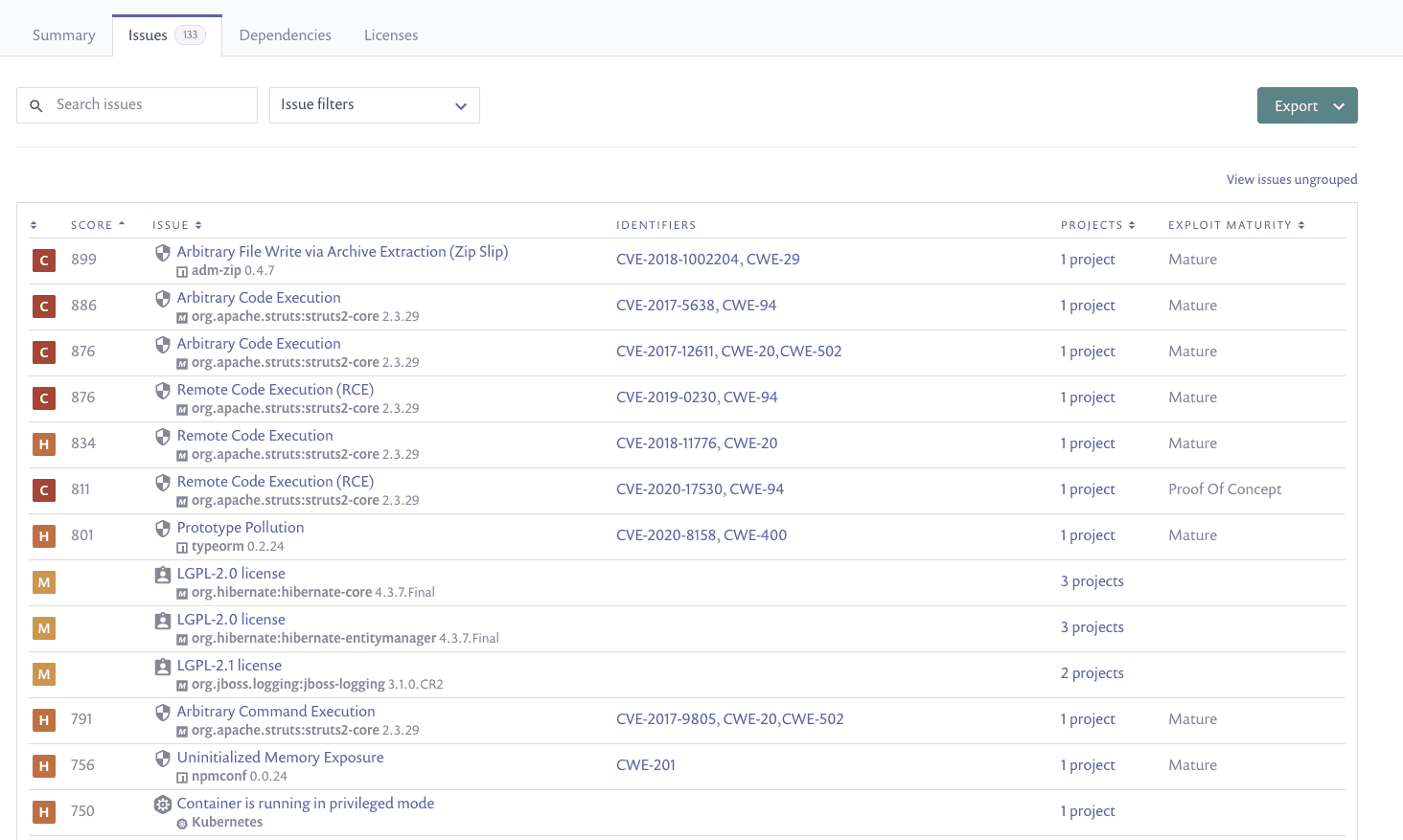

0 mins readElasticsearch is a popular open source search engine. Because of its real-time speeds and robust API, it’s a popular choice among developers that need to add full-text search capabilities in their projects. Aside from being generally popular, it’s also the engine we’re currently moving our Snyk reports functionality for issues! And once we have everything tuned in issues, we’ll start using Elasticsearch in other reporting areas.

While Elasticsearch is powerful, it can also seem complicated at first (unless you have a background in search engines). But since I’ve just recently learned a lot about this tool while implementing it at Snyk, I thought I’d pass some knowledge on to you, developer to developer!

Sometimes a standard analyzer isn’t enough

Elasticsearch stays fast by treating different data, well, differently. Among a wide variety of field types, Elasticsearch has text fields — a regular field for textual content (ie. strings). To make information stored in that field searchable, Elasticsearch performs text analysis on ingest, converting data into tokens (terms) and storing these tokens and other relevant information, like length, position to the index. By default, it uses a standard analyzer for text analysis.

From the official documentation: “The standard analyzer gives you out-of-the-box support for most natural languages and use cases. If you choose to use the standard analyzer as-is, no further configuration is needed.”

In basic cases, standard analysis can be enough. Let’s illustrate how it works by running the following command we will be able to see the generated analysis for any text we provide:

1POST _analyze

2{

3 "analyzer": "standard",

4 "text": "Regular Expression Denial of Service (ReDoS)"

5}

6

7And the result will look like:

8

9{

10 "tokens" : [

11 {

12 "token" : "regular",

13 "start_offset" : 0,

14 "end_offset" : 7,

15 "type" : "<ALPHANUM>",

16 "position" : 0

17 },

18 {

19 "token" : "expression",

20 "start_offset" : 8,

21 "end_offset" : 18,

22 "type" : "<ALPHANUM>",

23 "position" : 1

24 },

25 {

26 "token" : "denial",

27 "start_offset" : 19,

28 "end_offset" : 25,

29 "type" : "<ALPHANUM>",

30 "position" : 2

31 },

32 {

33 "token" : "of",

34 "start_offset" : 26,

35 "end_offset" : 28,

36 "type" : "<ALPHANUM>",

37 "position" : 3

38 },

39 {

40 "token" : "service",

41 "start_offset" : 29,

42 "end_offset" : 36,

43 "type" : "<ALPHANUM>",

44 "position" : 4

45 },

46 {

47 "token" : "redos",

48 "start_offset" : 38,

49 "end_offset" : 43,

50 "type" : "<ALPHANUM>",

51 "position" : 5

52 }

53 ]

54}Here we can see what exactly the standard analyzer does: it breaks text into tokens and generates service info for every chunk to store in the index.

Searching our indexed text

Once indexed, we can test the full-text search with a standard analyzer. We will create a sample document with two fields: title and description. Elasticsearch will automatically map the document and detect text fields.

1PUT text-search-index/_doc/1

2{

3 "title": "Regular Expression Denial of Service (ReDoS)",

4 "description": "The Regular expression Denial of Service (ReDoS) is a type of Denial of Service attack. Regular expressions are incredibly powerful, but they aren'\''t very intuitive and can ultimately end up making it easy for attackers to take your site down."

5}Now let’s search for “express” with the following query:

1GET text-search-index/_search

2{

3 "query": {

4 "match": {

5 "title": {

6 "query": "express"

7 }

8 }

9 }

10}No documents will be returned, even though we have “expression” in the title. This result is expected (well, expected by me since I’ve run into this before!), as there is no “express” token created by the analyzer (as we can see from the generated tokens we retrieved above).

If we change the search query to “expression” the document will be returned:

1GET text-search-index/_search

2{

3 "query": {

4 "match": {

5 "title": {

6 "query": "expression"

7 }

8 }

9 }

10}Now, there are more expensive queries that can be run to get around this token matching issue, like fuzzy and wildcard queries, but those are computationally expensive. So instead of throwing CPU cycles at the problem, we need to reevaluate our analyzer.

It is clear that a standard analyzer may not be enough if we want better and more flexible search results. To achieve what we want, we will configure a custom analyzer. What our requirements will be:

Use smaller queries

Case-insensitive search

To achieve that our next steps will be:

Create a custom analyzer

Configure a better tokenizer for the use case

Enable the new custom analyzer for chosen fields in the Elasticsearch index

Taking a deeper look at analyzers

By using the standard analyzer, we made life easy by bypassing the need to really understand what the analyzer was doing. Now that we’re making a custom analyzer, we’ll need to understand what happens during text analysis:

Character filters (optional) are applied to the text being analyzed to strip out characters.

A tokenizer breaks text into tokens or terms. This can be done in different ways, generating tokens by whitespace, by letters, etc.

Token filters (optional) perform additional changes on tokens, like converting to lowercase, removing specific tokens, and more.

An analyzer is a combination of tokenizers and filters. It performs text analysis and makes it ready for search. Every analyzer must have a single tokenizer, but there can be as few or many of the two filter types. One thing that never changes is the order of operations. It always goes: character filter > tokenizer > token filter.

Creating our first custom analyzer

As we saw earlier, by default all text fields use the standard analyzer. Elasticsearch provides a wide range of built-in analyzers, which can be used in any index without further configuration, and you can also create your own. We’re going to need to create our own for this use case.

Analyzers can be set up on different levels: index, field, or query. We will create a custom analyzer and specify it for a field. This is how a custom analyzer can be configured. Note:Configurations are stored in the index settings:

1PUT text-search-index

2{

3 "settings":{

4 "analysis":{

5 "analyzer":{

6 "my_analyzer":{

7 "type":"custom",

8 "tokenizer":"standard"

9 }

10 }

11 }

12 },

13 "mappings":{

14 "properties":{

15 "title": {

16 "type":"text",

17 "analyzer":"my_analyzer"

18 },

19 "text": {

20 "type": "text"

21 }

22 }

23 }

24}Here’s what we did:

A new custom analyzer named

my_analyzeris specified for the indexmy_analyzeruses thestandardtokenizer andlowercasefiltermy_analyzerenabled as analyzer for title property in the index mapping

This example is fairly simple and does not change the behavior of the standard analyzer. Now we can try to tweak it and make it possible for case insensitive search using incomplete words.

Choose and configure a better tokenizer

In our previous example, we used the standard tokenizer, now it is time to replace it with the edge_ngram that will break up tokens based on length (not by whitespace). And if you’re curious why I’m using edge_ngram instead of stemmer, you’ll need to keep reading!

1...

2"analysis":{

3 "analyzer":{

4 "my_analyzer":{

5 "type":"custom",

6 "tokenizer":"my_tokenizer"

7 }

8 },

9 "tokenizer": {

10 "my_tokenizer": {

11 "type": "edge_ngram",

12 "min": 3,

13 "max": 8,

14 "token_chars": ["letter", "digit"]

15 }

16 }

17}

18...Here’s what we did:

my_tokenizerusesedge_ngramtokenizerIt will create tokens from

3to8symbols in lengthTokens will include letters and digits only

Choose and configure a better token filter

The analyser we created above now enables search using incomplete words 3 to 8 chars length. The last thing left to cover is to make search case insensitive, this can be achieved by adding a proper lowercase token filter to my_analyzer:

1...

2"analysis":{

3 "analyzer":{

4 "my_analyzer":{

5 "type":"custom",

6 "tokenizer":"my_tokenizer",

7 "filter":[

8 "lowercase"

9 ]

10 }

11 },

12 "tokenizer": {

13 "my_tokenizer": {

14 "type": "edge_ngram",

15 "min": 3,

16 "max": 8,

17 "token_chars": ["letter", "digit"]

18 }

19 }

20}

21...Final result

After all the changes we made, we are now ready to create a new index with a custom analyzer. It’s worth noting before changing the analyzer that its setting can not be updated on existing fields using the update mapping API.

Here's what settings with the mappings for our basic test index will look like:

1PUT text-search-index

2{

3 "settings":{

4 "analysis":{

5 "analyzer":{

6 "my_analyzer":{

7 "type":"custom",

8 "tokenizer":"my_tokenizer",

9 "filter":[

10 "lowercase"

11 ]

12 }

13 },

14 "tokenizer": {

15 "my_tokenizer": {

16 "type": "edge_ngram",

17 "min": 3,

18 "max": 8,

19 "token_chars": ["letter", "digit"]

20 }

21 }

22 }

23 },

24 "mappings":{

25 "properties":{

26 "title": {

27 "type":"text",

28 "analyzer":"my_analyzer"

29 },

30 "text": {

31 "type": "text"

32 }

33 }

34 }

35}Now we can add a document and run different queries to ensure that our search works as expected:

1PUT text-search-index/_doc/1

2{

3 "title": "Regular Expression Denial of Service (ReDoS)",

4 "description": "The Regular expression Denial of Service (ReDoS) is a type of Denial of Service attack. Regular expressions are incredibly powerful, but they aren'\''t very intuitive and can ultimately end up making it easy for attackers to take your site down."

5}Search for titles that contain “express” or other incomplete and case insensitive tokens:

1GET text-search-index/_search

2{

3 "query": {

4 "match": {

5 "title": {

6 "query": "express" // try: EXPRESS, exp, expression

7 }

8 }

9 }

10}Note that we did not configure a custom analyzer for the text property of our index, so there we still have a good old standard (analyzer) search.

What’s next?

In this simple article, we configured a custom analyzer for a more flexible text search in Elasticsearch, and we covered some specific and basic settings and configurations. Elasticsearch provides a wide range of built-in analyzers, tokenizers, filters, normalizers, stop search settings, and much more.

Combining them we can achieve great custom search results. If you’re new to Elasticsearch, I hope you found this helpful. Stay tuned because we have plans to share more about our search journey. Up next will be aggregations! (You can count on it...)

If you'd like to be a part of Snyk and build great developer security tools, check out our open engineering positions.