Scoring security vulnerabilities 101: Introducing CVSS for CVEs

May 16, 2019

0 mins readSimilar to how software bugs are triaged for a severity level, so are security vulnerabilities, as they need to be assessed for impact and risk, which aids in vulnerability management.

The Forum of Incident Response and Security Teams (FIRST) is an international organization of trusted security computer researchers and scientists that have received the task of creating best practices and tools for incident response teams, as well as standardizing security policies and methodologies.

One of FIRST’s initiatives is a Special Interest Group (SIG) that is responsible for developing and maintaining the Common Vulnerability Scoring System (CVSS) specification in an effort to help teams understand and prioritize the severity of a security vulnerability.

Scoring vulnerabilities

CVSS is recognized as a standard measurement system for industries, organisations, and governments that need accurate and consistent vulnerability impact scores.

The quantitative model of CVSS ensures repeatable and accurate measurement while enabling users to see the underlying vulnerability characteristics that were used to generate the scores.

CVSS is commonly used to prioritize vulnerability remediation activities and to calculate severity of vulnerabilities discovered on one’s systems.

Challenges with CVSS

Missing applicability context

Vulnerability scores don’t always account for the correct context in which a vulnerable component is used by an organization. A Common Vulnerabilities and Exposures (CVE) system can factor in various variables when determining an organization’s score, but in any case, there are other factors that might affect the way in which a vulnerability is handled regardless of the score appointed to it by a CVE.

For example, a high severity vulnerability as classified by the CVSS that was found in a component used for testing purposes, such as a test harness, might end up receiving little to no attention from security teams, IT or R&D. One reason for this could be that this component is used internally as a tool and is not in any way exposed in a publicly facing interface or accessible by anyone.

Furthermore, vulnerability scores don’t extend their context to account for material consequences, such as when a vulnerability applies to medical devices, cars or utility grids. Each organization would need to triage and account for specific implications based on relevance to the prevalence in the specific vulnerable components for their products.

Incorrect scoring

A vulnerability score is comprised of over a dozen key characteristics and without proper guidance, experience and supporting information, mistakes are easy to make. A recent academic study[1] found that only 57% of security questions with regards to CVE vulnerability scoring presented to participants in the study were accurately answered. The study further elaborates on which information cues are crucial for better accuracy, as well as which would actually drive confusion and lower accuracy in scoring.

It is not unusual to find false positive in a CVE or inaccuracies in scores assigned to any of the metrics groups, which introduces a risk of losing trust in a CVE or creating panic for organizations which is uncalled for.

CVSS has a score range of 0-10 that maps to severity levels beginning from low to high or critical; inaccurate evaluation of variables can result in a score that maps to an incorrect CVSS level. Another study[2] from Carnegie Mellon University reported similar findings with regards to the accuracy of scoring. The report states: “More than half of survey respondents are not consistently within an accuracy range of four CVSS points” where 4 points alone moves the needle from a high or critical severity to lower levels.

[1] Allodi, Luca & Banescu, Sebastian & Femmer, Henning & Beckers, Kristian. (2018). Identifying Relevant Information Cues for Vulnerability Assessment Using CVSS. 119-126. 10.1145/3176258.3176340.

[2] Spring, J.M, Hatleback, E. Householder, A. Manion, A. Shick, D. "TOWARDS IMPROVING CVSS" Carnegie Mellon University, December 2018

The Snyk method

At Snyk we use CVSS v3.1 for assessing and communicating security vulnerability characteristics and their impact.

Snyk’s dedicated Security Research team takes part in uncovering new vulnerabilities across ecosystems. In addition, they work to triage CVE scores to confirm that they correctly reflect severities in order to balance out the aforementioned scoring inaccuracies made by other authorities that issue CVEs.

Snyk’s vulnerability database offers supporting metadata beyond the CVE details for each vulnerability. The Security Research team curates each vulnerability with information such as an overview about the vulnerable component, details about the type of vulnerability which are enriched with examples and reference links to commits, fixes, or other related matter on the vulnerability.

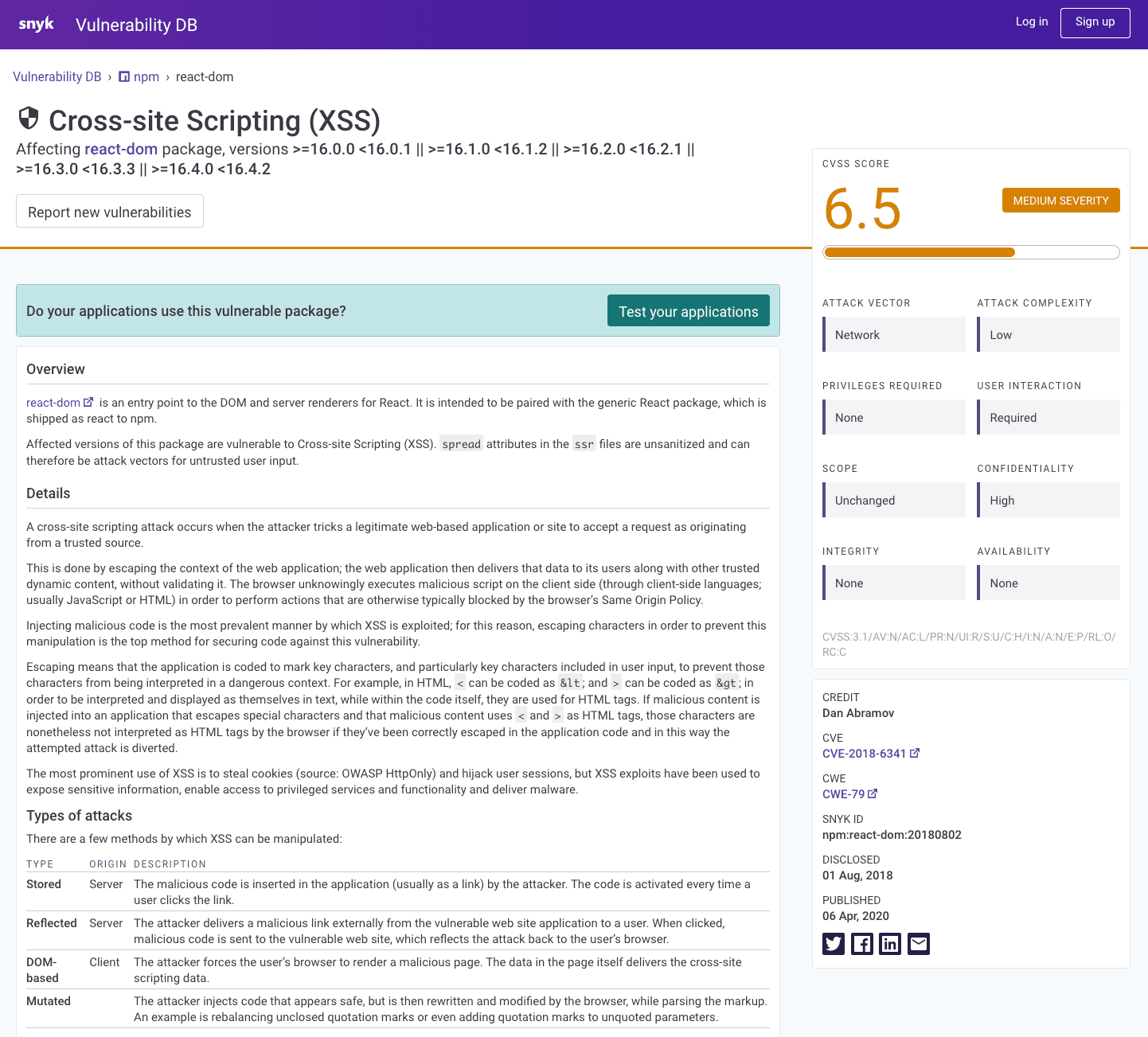

The following image shows a cross-site scripting (XSS) security vulnerability that was found in the frontend React framework, affecting different versions in the 16.x range of the React DOM module.

The image further shows the base metric for the CVSS v3.1 score with a detailed breakdown of the metrics.

How CVSS works

There are three versions in CVSS’s history, starting from its initial release in 2004 through to the widespread adoption of CVSS v2.0, and finally to the current working specification of CVSS v3.1. The remainder of this article focuses on CVSS v3.1.

The specification provides a framework that standardizes the way vulnerabilities are scored in a manner that is categorized to reflect individual areas of concerns.

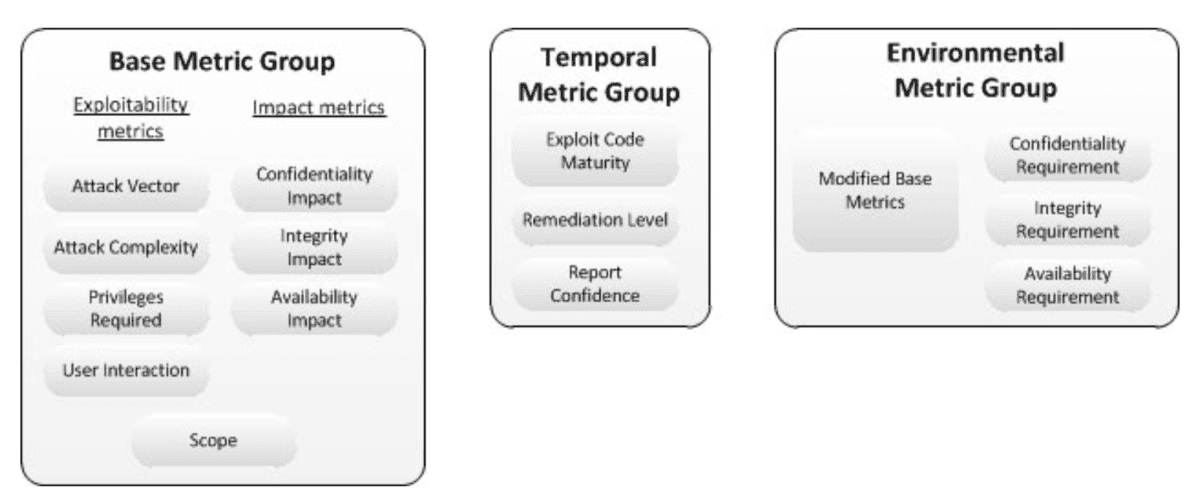

The metrics for a CVSS score are divided into three groups:

Base—impact assessment and exploitability metrics that are not dependent on the timing of a vulnerability or a user’s environment, such as the ease with which the vulnerability can be exploited. For example, if a vulnerable component is denied complete access due to a vulnerability, it will score a high availability impact.

Temporal—metrics that account for circumstances that affect a vulnerability score. For example, if there’s a known exploit for a vulnerability the score increases, whereas if there’s a patch or fix available, the score decreases.

Environmental—metrics that enable customizing the score to the impact for a user’s or organization’s specific environment. For example, if the organization greatly values the availability related to a vulnerable component, it may set a high level of availability requirement and increase the overall CVSS score.

The base metrics group form the basis of a CVSS vector. If temporal or environmental metrics are available, they are factored into the overall CVSS score.

CVSS 3.0 Metric Groups by FIRST / CC-BY-SA-3.0

Base scores

CVSS base metrics are composed of exploitability and impact metrics sub-groups, and assess their applicability to a software component, which may impact other components (software, hardware, or networking devices).

Exploitability represents the effort and means by which the security vulnerability can be exploited, and is composed of the following:

Attack Vector (AV)—represents four medium types by which exploitation can take place: physical, local, adjacent and network. The more accessible the medium is, the higher the score.

For example, a cross-site scripting (XSS) vulnerability in jQuery that could be employed in a public-facing website would be scored as a network attack vector.

Attack Complexity (AC)—represents either a low or high score, depending on whether or not the attack requires a special circumstance or configuration to exist as a pre-condition in order to execute successfully.For example, a path traversal, which is relatively easy to execute and which was found in the Next.js package in 2018 and 2017 for example, is a low-rated attack complexity. A high-rated attack complexity is a timing attack found in Apache Tomcat.

Privileges Required (PR)—represents one of these score levels: none, low or high. These score levels correlate with the level of privileges an attacker must gain in order to exploit the vulnerability.For example, an arbitrary code injection in Wordpress MU allowed authenticated users to upload and execute malicious files remotely. This classifies as a low score level, because any authenticated user would be able to execute the attack, regardless of their roles and permissions in a Wordpress application.

User Interaction (UI)—represents a high score for when no action is required by a user in order for an attack to be executed successfully and a lower score when any sort of user interaction is required.For example, in cases of malicious modules that when installed would compromise the environment, user interaction is required to begin with, in the form of installing the malicious package. In the npm ecosystem, we’ve seen dozens of malicious packages, one of which was an attempt to steal environment variables by a typosquatting attack in a package called crossenv. These are examples of low level scores because user interaction is necessary.

The Impact metrics sub-group represents the impact to confidentiality, integrity, and availability of a vulnerable component when successfully exploited, and is composed of the following:

Confidentiality (C)—measures the degree of the loss of confidentiality to the impacted component, such as disclosing information to unauthorized users.For example, a high loss of confidentiality takes place when an arbitrary script injection vulnerability is exploited in Angular applications (AngularJS to be precise). A user input that originates as a DOM event and isn’t well sanitized can result in code execution.

Integrity (I)—measures the degree of the loss of integrity to the impacted component, such as the ability to modify data when the vulnerable component is exploited successfully.For example, a high loss of integrity occurred in the open source framework Apache Struts 2, when improper serialization resulted in arbitrary command execution. This vulnerability was in the center of 2017 headlines due to its part in the Equifax breach, which you’re welcome to read more about in our blog.

Availability (A)—measures the loss of availability of resources and functionality of the impacted component, such as whether an attack related to the vulnerable component could lead to a denial of service for users.For example, a high level of availability disruption occurs in a version of lodash which is vulnerable to regular expression denial of service. When lodash is used as a frontend library, an exploitation of this vulnerability affects its end-users’ browsers, but the issue worsens when lodash is used in a Node.js server-side application where the impact renders the event-loop unresponsive and affects all incoming requests for an API service.

It’s important to note that when the scope has changed, the impact metrics represent the repercussions of the highest degree, whether that is the vulnerable component itself or the impacted component.

Lastly, scope is a new metric that CVSS v3.0 introduced. Scope reflects whether a security vulnerability affects further resources beyond itself. Values assigned to the scope metric can be either unchanged or changed, whereas successful exploitation of a vulnerable component that affects resources governed by another authorization scope would reflect that the scope has changed.

For example, cross-site scripting (XSS) vulnerabilities in phpMyAdmin would constitute as a scope change because the vulnerable component is phpMyAdmin, which runs as a server-side PHP application; the impact scope however is the user’s browser.

Security vulnerabilities such as a remote command execution, where the vulnerable component is provided with very high privileges, is a good reference for how scope can change beyond the authorization of just that one component to potentially affecting other components.

Temporal Scores

The purpose of the temporal score is to provide context with regards to the timing of a CVE severity. For example, if there are known public exploits for a security vulnerability, this raises the criticality and severity for the CVE because of the significantly easy access to resources for employing such attacks.

An overall CVSS score is calculated including the temporal score part based on the highest risk for a value, and is only included if there is temporal risk. Therefore, any temporal score values assigned to the vuln keep the overall CVSS score at the very least, or even lower the overall score, but cannot raise it.

The temporal score metrics are:

Exploit Code Maturity (E)—measures how likely the vulnerability is to be exploited, depending on the matureness of public exploit code that is available. Possible values are 'U' for no exploit code available, 'P' for a known proof-of-concept code. 'F' for a functional exploit code available, or 'H' for a high likelihood of exploitation made possible either by automated attack tools such as the widely known Metasploit framework, or in cases where no special exploit code is required to execute a successful attack.

Remediation Level (RL)—measures how likely it is to apply mitigation or complete remediation for this vulnerability. For example, if a vulnerability has been properly disclosed and handled by a vendor then by the time the CVE is made public, the vulnerable component would have an upgrade path. Possible values are 'O' for an official fix available by the vendor through a patch or upgrade. 'T' for a temporary fix available such as hotfix releases or workarounds to mitigate the vulnerability. 'W' is applied for where a workaround is known but it is unofficial and not supported by the vendor, and lastly 'U' for a no publicly known mitigation or remediation is available.

Report Confidence (RC)—measures the degree of confidence in the vulnerability report. Sometimes, disclosed security reports may not be thorough in providing background, supporting material in the form of a proof-of-concept or reproducing steps, which makes it more difficult to triage the credibility of the report and its impact. Possible values are ‘C’ for indicating a high level of certainty in reproducing the report, ‘R’ for reasonable input that has been provided to confirm the report, and ‘U’ for unknown details around the root cause of the vulnerability.

All three temporal metrics can be assigned an ‘X’ to indicate that values should not be assigned nor should they affect the base score.

Get started in capture the flag

Learn how to solve capture the flag challenges by watching our virtual 101 workshop on demand.