Building Docker images in Kubernetes

Vitalis Ogbonna

May 3, 2022

0 mins readHosting a CI/CD platform on Kubernetes is becoming more common among engineers. This approach saves time through automation, ensures consistent deployments, and makes it easier to monitor and manage microservices. However, building container images in Kubernetes clusters involves some technical hurdles that require workarounds.

In this article, we’ll explore some ways to build Docker images in a Kubernetes cluster for CI/CD processes. We’ll also discuss the advantages and disadvantages of using these methods.

Tools for building Docker images in Kubernetes

If you’ve ever built a container image, you’ve probably run a command like docker build. Then, when it was time to automate that process, you may have scripted your CI/CD tools to use docker build as well. This works for simple CI servers, but you may eventually want to deploy a Kubernetes-based CI platform.

The challenge is that the Docker daemon isn’t freely accessible from the Kubernetes cluster. So we must use alternatives while making sure we don’t compromise our application’s integrity and infrastructure.

There are several tools for this:

Buildah specializes in building Open Container Initiative (OCI) images. Its commands replicate all of a Dockerfile’s commands. It enables building images with and without Dockerfiles, without requiring root privileges.

img is a standalone, daemon-less, unprivileged Dockerfile- and OCI-compatible container image builder. It’s cache efficient and can execute multiple build stages concurrently, as it internally uses BuildKit's DAG solver as its image builder.

kaniko builds container images from a Dockerfile, inside a container or Kubernetes cluster. kaniko doesn't depend on a Docker daemon, and executes each Dockerfile command completely in userspace.

Docker in Docker is a recipe for running Docker within Docker. It builds Docker containers in Kubernetes clusters by mounting the

/var/run/docker.sockfile as a volume in a Docker container.Sysbox Enterprise Edition (Sysbox-EE) by Nestybox lets rootless containers run workloads such as Docker, systemd, and Kubernetes as virtual machines.

BuildKit CLI builds single and multi-architecture OCI and Docker images within Kubernetes clusters. It replaces the docker build command with kubectl build to create images in Kubernetes clusters.

Jib for Java containers builds container images without using a Dockerfile or requiring a Docker installation. Jib plugins are available for Maven and Gradle. Jib is also available as a Java library.

KO is a fast and simple container image builder for Go applications. It builds images by effectively executing go build on your local machine, so it doesn't require installing Docker.

Let’s focus on two popular approaches to building Docker images in a Kubernetes cluster: Docker in Docker and kaniko.

Docker in Docker

The Docker in Docker (DIND) method is commonly used in CI/CD pipelines where images are built and pushed after a successful code build. This method is also used for integrating Jenkins into deployment pipelines (during tests in sandbox environments, for example).

The DIND approach may seem convenient and easy. However, former Docker employee and DIND contributor Jérôme Petazzoni maintains that Docker created this approach to accelerate internal processes, and alludes to security concerns as reasons not to use it in production environments.

Docker originally built containers to run in privileged mode using the DIND approach. The Docker daemon runs as root, so the container runs as root on the host. Anyone who accesses the Docker socket has root access, giving them permission to run any software, create new users, and access everything connected to the container. This makes the containers vulnerable to attacks that could spread throughout the architecture.

Furthermore, since Kubernetes has officially removed Dockershim support, mounting docker.sock to the host likely won’t work in the future unless we add Docker to all Kubernetes nodes. AWS offers a helpful tool to detect Docker socket use in clusters.

The DIND method also presents issues regarding storage driver compatibility. Additionally, managing image caches can be challenging because we need to pull the Docker images every time we initiate a build.

One way to resolve these issues is to use tools that don’t require a container runtime for building images. One such tool is kaniko, Google’s open source solution for building Docker images in a Kubernetes cluster.

kaniko

kaniko builds container images from a Dockerfile inside a container or Kubernetes cluster. It doesn't depend on a Docker daemon, and it executes each Dockerfile command completely in userspace. This allows you to build container images in environments that can't easily or securely run a Docker daemon — such as standard Kubernetes clusters.

We’ll use free, publicly-available tools to illustrate the kaniko workflow. To follow this tutorial, you’ll need:

Docker Desktop installed on your PC with Kubernetes enabled

A valid Docker Hub account for kaniko pod to authenticate and push the Docker image

A GitHub account for kaniko to access the Dockerfile

We’ll use this sample project on GitHub to illustrate how kaniko works. Clone it using git clone https://github.com/agavitalis/kaniko-kubernetes.git to follow along.

This sample project has two files and a README.md file:

#sample project directory

kaniko-build-demo

dockerfilepod.ymlREADME.md

dockerfile contains this code for the image build commands:

1FROM ubuntu

2ENTRYPOINT ["/bin/bash", "-c", "echo Hello to Kaniko from Kubernetes"]

3

4pod.yml contains this code for the kaniko configurations:

5

6apiVersion: v1

7kind: Pod

8metadata:

9 name: kaniko-demo

10spec:

11 containers:

12 - name: kaniko-demo

13 image: gcr.io/kaniko-project/executor:latest

14 args: ["--context=git://github.com/agavitalis/kaniko-kubernetes.git",

15 "--destination=agavitalis/kaniko-build-demo:1.0.0",

16 "--dockerfile=dockerfile"]

17 volumeMounts:

18 - name: kaniko-secret

19 mountPath: /kaniko/.docker

20 restartPolicy: Never

21 volumes:

22 - name: kaniko-secret

23 secret:

24 secretName: reg-credentials

25 items:

26 - key: .dockerconfigjson

27 path: config.jsonIn the kaniko configuration code, the kaniko image executor uses the latest version. We also specified the location of our Dockerfile and image repository and the name of our image registry credentials in Kubernetes.

How kaniko works

kaniko works in a fairly simple way. The kaniko executor image gcr.io/kaniko-project/executor:latest runs the Dockerfile commands to build images. It reads the specified Dockerfile, pulls the base image defined in the FROM command (in this case, ubuntu) into the defined container filesystem, and then pushes the images to a registry.

After pulling the base image from the FROM directive, kaniko runs every command in the Dockerfile individually, taking a snapshot of the userspace after executing each command. It then appends the snapshot layer to the base layer on each run.

We set these configurations in the pod.yml file:

context: the Dockerfile’s location. In this case, the Dockerfile is in the repository’s root directory. UseGIT_USERNAMEandGIT_PASSWORD(API token) variables to authenticate in private Git repositories.destination: Replace <dockerhub-username> with your username for kaniko to push the image to the Docker Hub registry.docker-file: the Dockerfile’s path relative to the context.

Now that we understand kaniko’s operating principles, let's create a Kubernetes Secret. Then we’ll use it to build and deploy an image.

Creating a Docker Hub Kubernetes Secret

We must create a Kubernetes Secret for kaniko to access Docker Hub. To do so, we need the following information:

docker-server: The Docker registry server to host your images. If you’re using Docker Hub, this value should behttps://index.docker.io/v1/.docker-username: Your Docker registry username.docker-password: Your Docker registry password.docker-email: The email configured on your Docker registry.

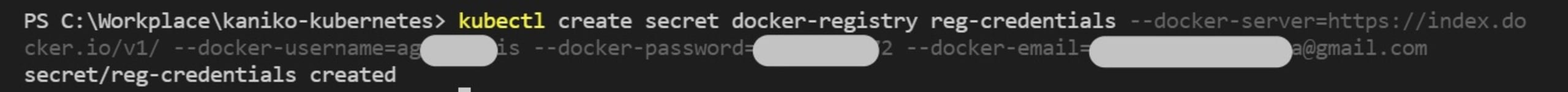

Run the following commands, substituting each variable appropriately:

1#bash

2$ kubectl create secret docker-registry reg-credentials

3--docker-server=<docker-server> --docker-username=<username> --docker-password=<password> --docker-email=<email>The command above mounts this Secret on kaniko pod for easy authentication when pushing the built image to a Docker registry. You should receive a confirmation message like this one:

Deploying kaniko pod to build a Docker image

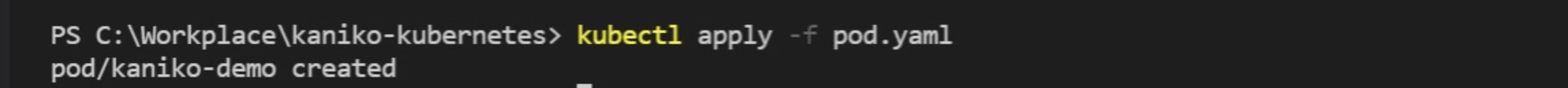

Now, let's kickstart the build by spawning the pod in our Kubernetes cluster. Deploy the pod using the command:

1#bash

2$ kubectl apply -f pod.yaml

This starts the image build process then pushes the image to the specified Docker registry.

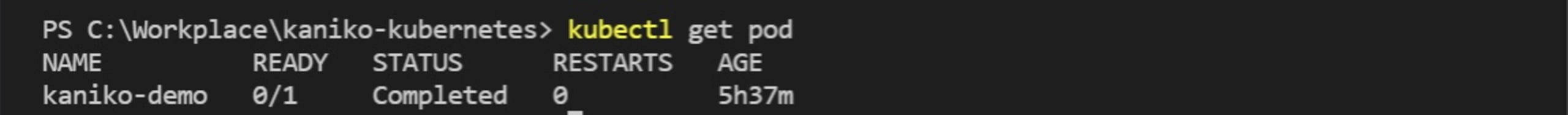

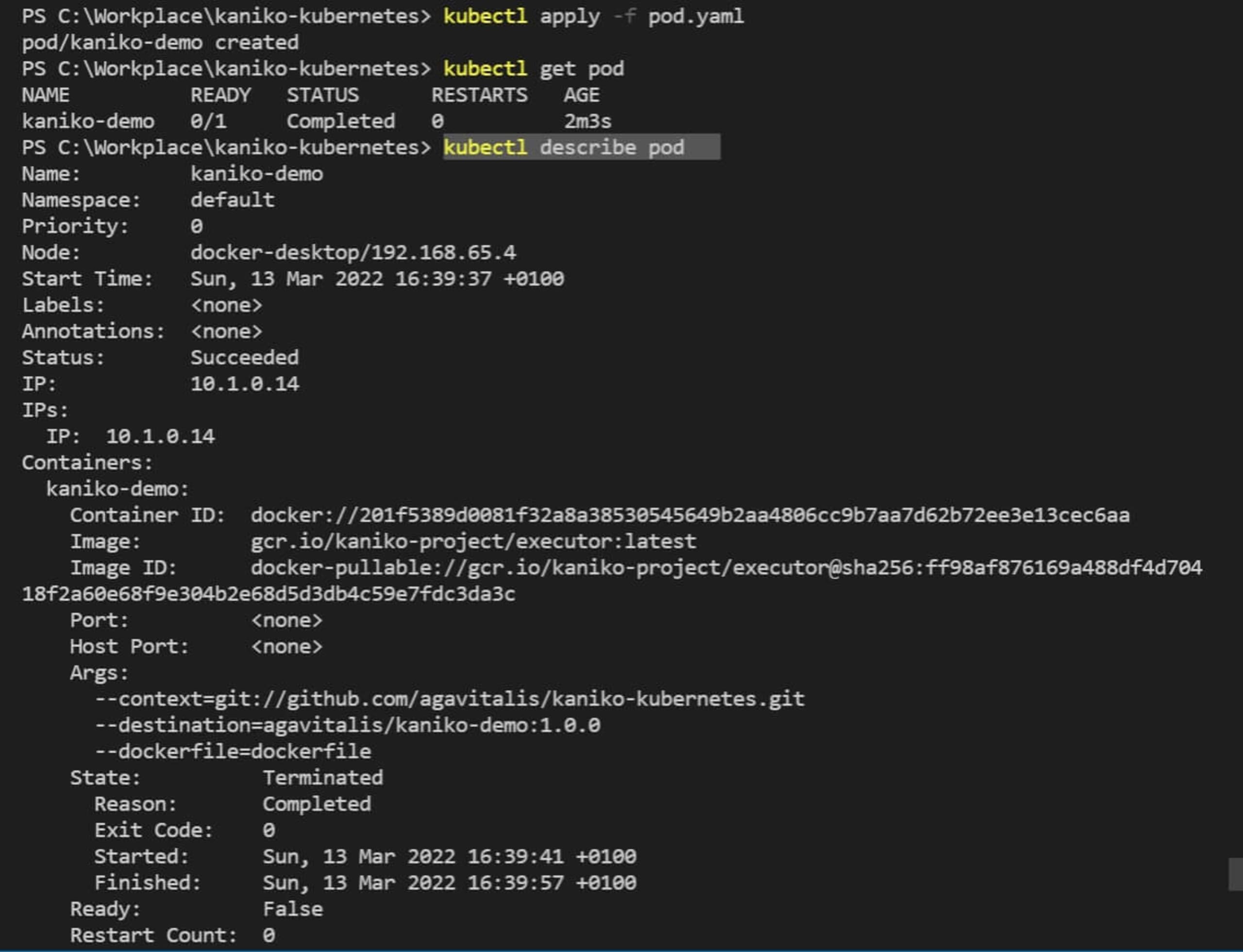

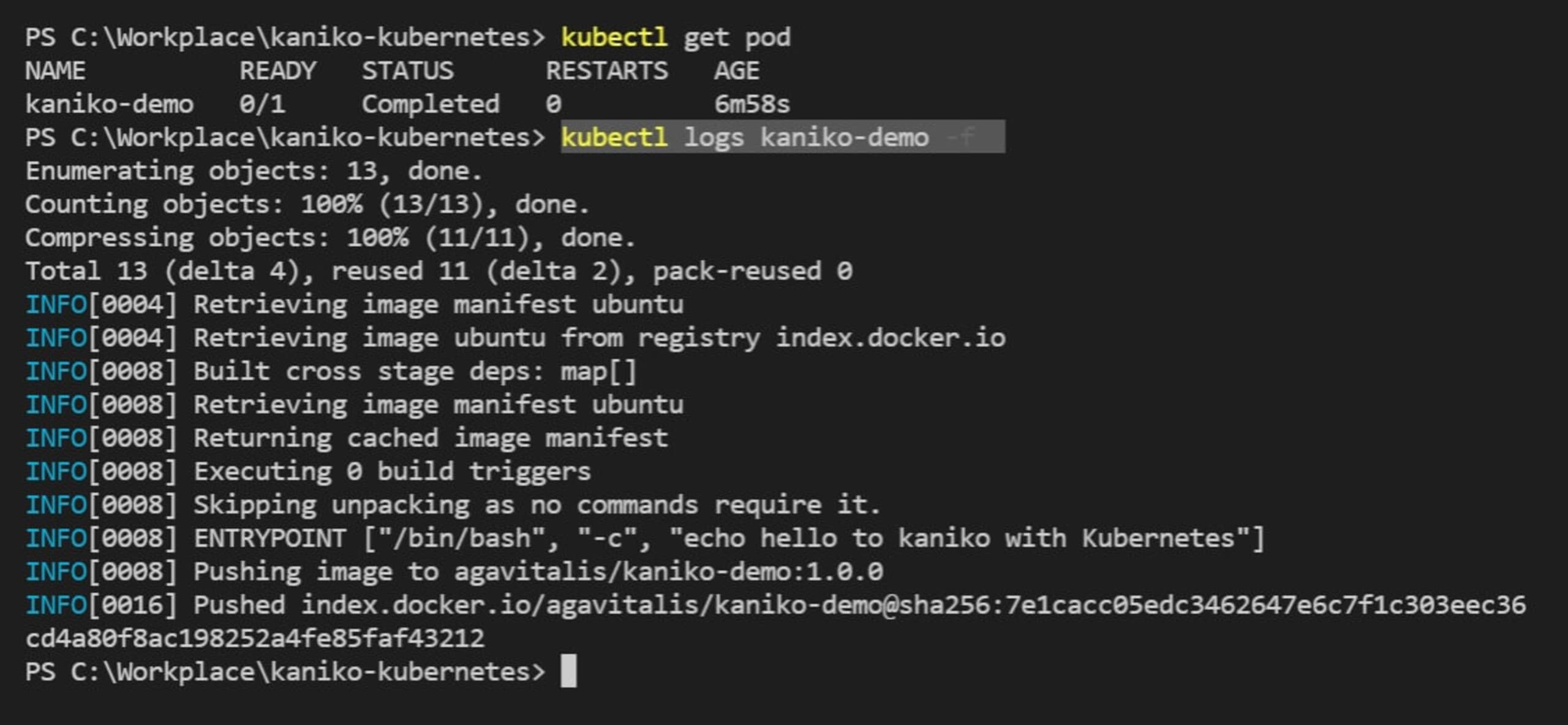

You can list the available pods in your Kubernetes cluster using the command:

1#bash

2$ kubectl get pod

This displays the available pods, their status, and their age. (Note that you may get an error if kaniko is using the wrong credentials or pushing to the wrong repository.)

If you wish, use the kubectl delete pod <pod-name> command to delete existing pods.

To get comprehensive details of the pod you just deployed, use the command:

1#bash

2$ kubectl describe pod

You can also view the build log using the command:

1#bash

2$ kubectl logs kaniko-demo -f

Check out the kubectl cheat sheet for other commands you can use to explore, troubleshoot, and get more information about your cluster.

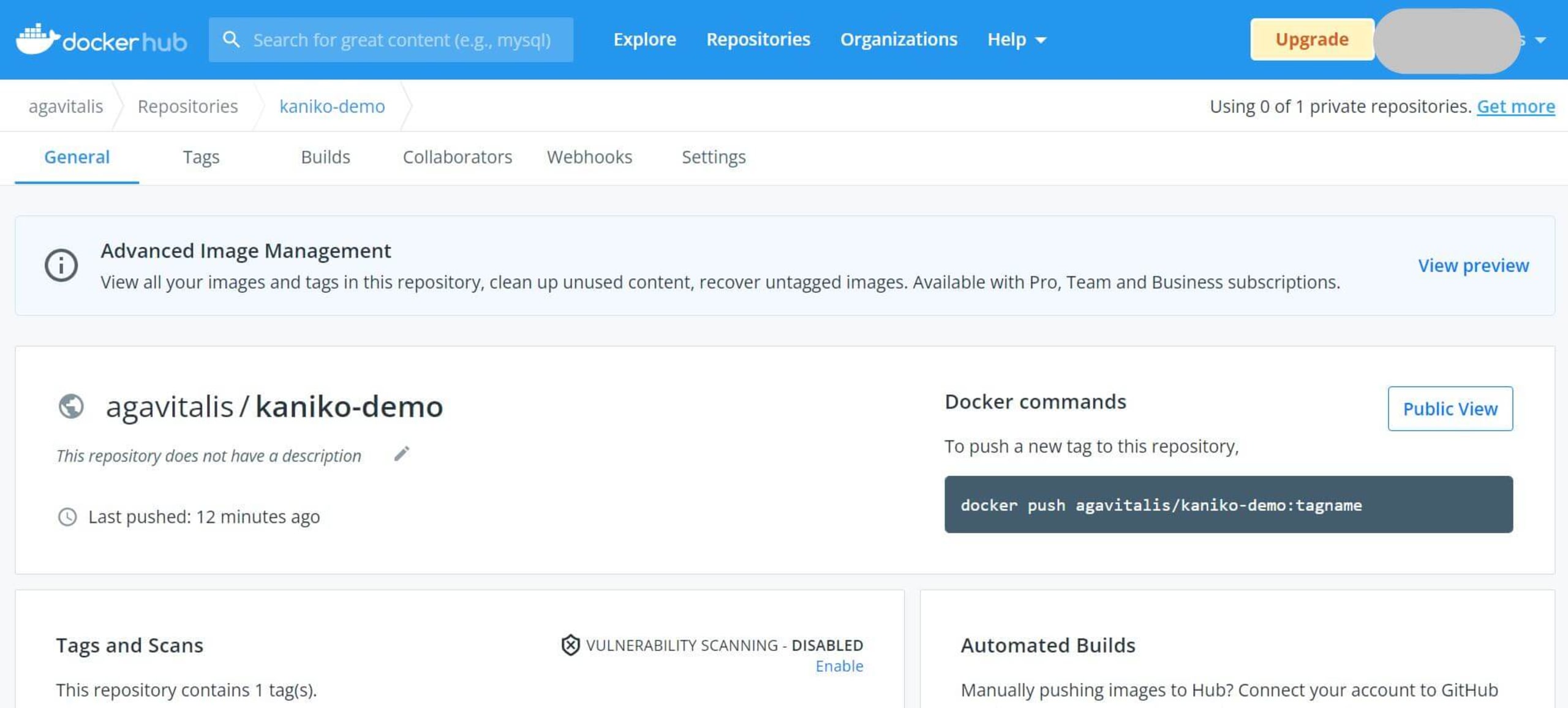

Next, go to Docker Hub to confirm that everything worked and that you have successfully deployed your images to Docker.

We’ve successfully built and deployed our Docker image from a Kubernetes cluster using kaniko!

Snyk for container security

kaniko provides a secure way to build Docker images in Kubernetes clusters. It grabs the Dockerfile from the defined build context, makes the image, and pushes the result to an image registry. Its powerful cache system creates your image faster.

You may still be at risk for Kubernetes security vulnerabilities even if you don’t use an insecure method to make your Docker images in Kubernetes. Snyk Container provides a reliable container security solution for finding and fixing vulnerabilities in cloud-native applications. Snyk integrates seamlessly with Docker, GitHub, Kubernetes, Jenkins, and other tools to ensure your application and infrastructure are safe.

Developer-first container security

Snyk finds and automatically fixes vulnerabilities in container images and Kubernetes workloads.