3 tips for rebuilding a Docker image faster (and save CI seconds!)

December 28, 2023

0 mins readDocker has become an invaluable tool that simplifies the process of building, shipping, and running applications. Docker allows developers to package an application with all its dependencies into a standardized unit known as a container image.

# Example of a Dockerfile

FROM ubuntu:18.04

COPY . /app

RUN make /app

CMD python /app/app.pyThis Dockerfile is a simple document that contains all the commands needed to build a container image. This image will, in turn, be the basis of a Docker container when run.

These containers are lightweight, standalone, and executable packages that include everything needed to run a piece of software, including the code, system tools, libraries, and settings.

The beauty of containers lies in their portability. They ensure that the application runs seamlessly, regardless of the environment in which it is deployed. This has significantly streamlined the software development process and has become a cornerstone of the DevOps approach, enabling continuous integration and deployment.

The need for faster Docker image building

While container images are fundamental to running applications in various environments, building them can sometimes be time-consuming. This is particularly true for larger applications with many dependencies.

# Building a container image can take time

docker build -t my-application .In the above example, Docker reads the Dockerfile in the current directory and builds an image called my-application. Depending on the complexity and size of the application, this can take a significant amount of time.

The art of Docker image building

Building Docker images is a fundamental part of working with containers. Docker images are essentially a "snapshot" of a Docker container at a certain point in time, and they provide the basis for creating new Docker containers.

Building a Docker image involves creating a Dockerfile, which is a text file that contains all the commands needed to build an image. A Dockerfile is like a recipe — it tells Docker what to do when you want to create an image.

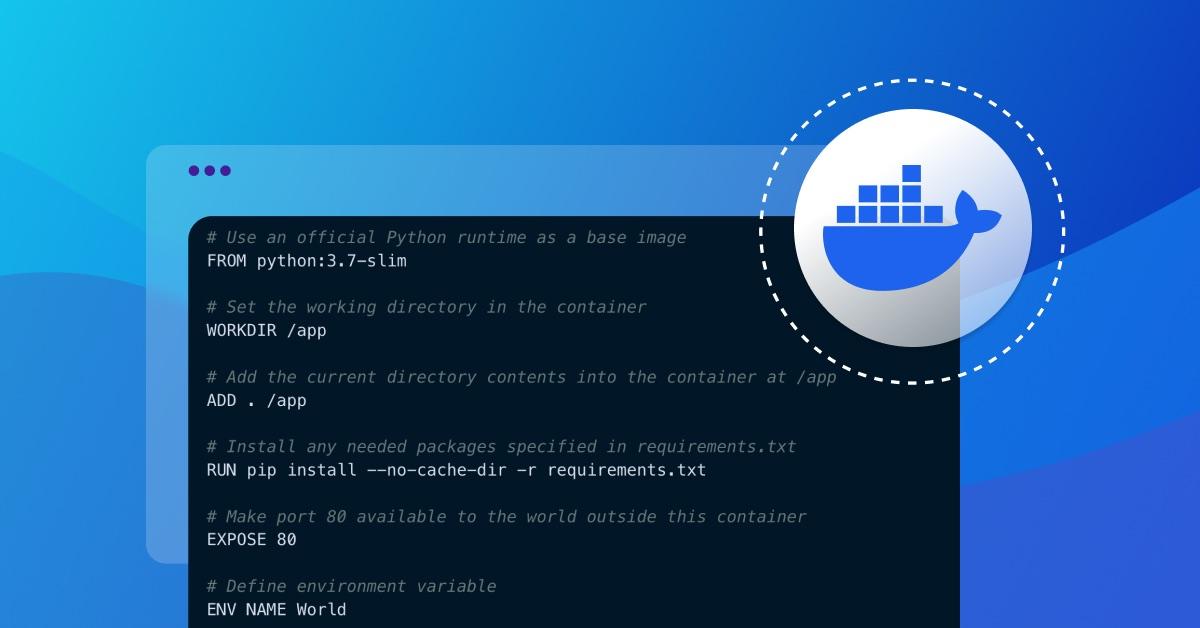

Here is an example of a simple Dockerfile:

# Use an official Python runtime as a base image

FROM python:3.7-slim

# Set the working directory in the container

WORKDIR /app

# Add the current directory contents into the container at /app

ADD . /app

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

# Make port 80 available to the world outside this container

EXPOSE 80

# Define environment variable

ENV NAME World

# Run app.py when the container launches

CMD ["python", "app.py"]Once you have a Dockerfile, you can use the docker build command to create an image. Here's what that might look like:

docker build -t my-python-app .This command tells Docker to build an image using the Dockerfile in the current directory (the . at the end of the command), and tag it (-t) as my-python-app.

Note: If you’re a Python developer or in a DevOps team that builds Python application containers, I highly recommend reviewing and applying the best practices for containerizing Python applications with Docker.

Common challenges in Docker image building

Building Docker images is not always straightforward. There are several common challenges that developers and DevOps teams may encounter:

Managing dependencies: One of the most common challenges is managing dependencies. The dependencies for your application may change over time, and it can be difficult to keep your Docker image up to date. To address this, it can be helpful to use a package manager that automatically handles dependencies for you.

Image size: Another challenge is keeping the size of your Docker images as small as possible. Large images take longer to build and push to a Docker repository, which can slow down your CI/CD pipeline. To minimize image size, you can use a smaller base image, remove unnecessary files, and use multi-stage builds to minimize final image contents.

Security: Security is a major concern when building Docker images. You need to ensure that your images do not contain any vulnerabilities that could be exploited by attackers. To help with this, you can use tools like Docker Bench for Security and Snyk Container to scan your images for vulnerabilities.

Tip 1: Add a .dockerignore file to your repository

In the world of Docker and container security, efficiency is a key factor. If you're a developer or a DevOps professional, you'll know that every second counts when it comes to continuous integration (CI) processes.

One simple yet effective way to speed up Docker image building is by incorporating a .dockerignore file into your repository. Let’s read into the workings of a .dockerignore file, learn how it can make your Docker image build faster, and explore a step-by-step guide on adding a .dockerignore file to a repository.

What is a .dockerignore file?

A .dockerignore file, much like a .gitignore file, is a text file that specifies a pattern of files and directories that Docker should ignore when building an image from a Dockerfile. That is, it tells Docker which files or directories to disregard in the context of the build.

# Sample .dockerignore file

node_modules

npm-debug.log

Dockerfile*

*.mdThe code snippet above is an example of a .dockerignore file that instructs Docker to ignore the node_modules directory, any npm-debug.log files, any Dockerfile (regardless of the extension), and any Markdown files.

How can a .dockerignore file speed up Docker image building?

When Docker builds an image from a Dockerfile, it sends the entire build context (i.e. the current directory and its children) to the Docker daemon. Without a .dockerignore file, the Docker daemon receives unnecessary files and directories, which can significantly increase the build time.

By incorporating a .dockerignore file into your repository, you instruct Docker to disregard unnecessary files and directories, reducing the size of the build context. As a result, Docker can build images faster, saving you valuable CI seconds.

Step-by-step guide to adding a .dockerignore file to a repository

Here's how you can add a .dockerignore file to your repository:

1. Create a new file named .dockerignore in the root directory of your repository.

touch .dockerignore

2. Open the newly created .dockerignore file with your favorite text editor.

nano .dockerignore3. Add patterns for files and directories that should be ignored during the Docker image build. For instance, you can ignore all log files and the node_modules directory, as shown below:

*.log

node_modules/4. Save and close the .dockerignore file. The next time you build a Docker image from this repository, Docker will ignore the specified files and directories, and your Docker image build will be faster!

A .dockerignore file is a simple yet powerful tool that can significantly reduce Docker image build time. By incorporating it into your repositories, you can make your Docker and CI processes more efficient and secure.

Tip 2: Use a dependency lockfile

A dependency lockfile is a crucial tool in achieving faster and deterministic Docker builds. It locks down the versions of your project's dependencies, ensuring that all future builds use the exact same versions. This not only improves the speed of your builds, but also the reliability and security of your Docker images.

What is a dependency lockfile and why is it important?

A dependency lockfile is a file generated by your package manager that describes the exact version and dependencies of your project at a specific point in time. It's like a snapshot of your project's dependencies. In the context of Docker, it's a record of what software versions were used to build a specific Docker image.

The lockfile is vital for several reasons. It ensures that your Docker builds are deterministic and repeatable. This means that every time you build your Docker image, you'll get the same result. This is critical for debugging and security compliance, as it ensures that you're always aware of exactly what's in your Docker image.

Moreover, a dependency lockfile can significantly speed up your Docker builds. Since the lockfile contains the exact versions of your dependencies, Docker can cache these layers and skip the installation step in future builds, leading to faster build times.

How a dependency lockfile ensures faster and deterministic builds

Let's consider a simple Dockerfile without a lockfile:

FROM node:20

WORKDIR /app

COPY package.json .

RUN npm install

COPY . .

CMD [ "node", "app.js" ]Every time we run a build, npm install will fetch the latest versions of the dependencies defined in package.json. This is a time-consuming process and makes our builds non-deterministic, as the installed dependencies can vary between builds.

Note: If you are building Node.js applications with Docker, I highly recommend reviewing 10 best practices to containerize Node.js web applications with Docker.

Now, let's use a lockfile:

FROM node:20

WORKDIR /app

COPY package.json package-lock.json ./

RUN npm ci

COPY . .

CMD [ "node", "app.js" ]Here, we're copying the package-lock.json into the Docker image and using npm ci instead of npm install. The npm ci command uses the lockfile to install the exact versions of the dependencies, ensuring deterministic builds and allowing Docker to cache the dependency layer for faster builds.

How to use a dependency lockfile in Docker

To use a dependency lockfile in Docker, you must ensure that the lockfile is included in your Docker build context. You can do this by copying the lockfile into your Docker image using the COPY command in your Dockerfile.

Here's an example with a Python project using Pipfile.lock:

FROM python:3.7

WORKDIR /app

COPY Pipfile Pipfile.lock ./

RUN pip install pipenv && pipenv install --system --deploy

COPY . .

CMD [ "python", "app.py" ]In this Dockerfile, we're copying Pipfile and Pipfile.lock into the Docker image. We then use pipenv install --system --deploy to install the exact versions of the dependencies defined in Pipfile.lock. This provides deterministic builds and optimizes build performance by leveraging Docker's caching mechanism.

A dependency lockfile is a vital tool for achieving faster, deterministic Docker builds. It not only keeps your Docker images consistent and reliable but also enhances the security of your images by ensuring that you're always aware of the software versions inside your Docker images.

Tip 3: Group commands based on their likelihood to change

Container security and software development are enhanced by efficient practices, and Docker image building is no exception. One such practice is the grouping of commands based on their likelihood to change. This method can help you save considerable time and resources during the Docker image rebuilding process. Let's delve into how this works.

The principle of command grouping in Docker

In Docker, each command you write in a Dockerfile creates a new layer in the Docker image. Docker utilizes a caching mechanism for these layers to speed up the image building process.

When Docker builds an image, it processes each command line by line. If a command hasn't changed since the last build, Docker will use the cached layer instead of executing the command again, significantly reducing the build time.

However, if a single line changes, Docker can't utilize the cache for that line or any lines after that. Therefore, if a frequently changing line is placed at the beginning of the Dockerfile, the cache won't be used effectively, and Docker will need to rebuild most of the image every time. This is where command grouping comes into play.

Faster Docker image rebuild by grouping commands

By grouping commands and organizing them based on how likely they are to change, you can optimize the use of the Docker cache and significantly speed up the Docker image rebuilding process.

Essentially, you want to structure your Dockerfile so that commands that change frequently are at the end. This way, Docker can use cached layers for the unchanged, less frequently updated commands, and only the layers for the changed commands will need to be rebuilt.

Place the least likely to change commands at the top

Another related tip in this section to enable faster Docker image rebuild is to place at the top of the Dockerfile the commands that are least likely to change and enhance the Docker caching mechanism.

In a Dockerfile, each command you write creates a new layer in the Docker image. These layers are stacked on top of each other and form the final Docker image. Docker utilizes a caching mechanism for these layers to speed up image builds. If a layer hasn't changed (i.e., the command is the same as in previous builds), Docker uses the cached version instead of re-building that layer.

The catch here is that Docker invalidates the cache for a layer if any of the layers it depends on have changed. This means that if you modify a command at the top of your Dockerfile, all layers below it will be re-built, even if their commands haven't changed.

By placing the least likely to change commands at the top of your Dockerfile, you can ensure that Docker will use the cache for these layers in most of your builds. This approach reduces the number of layers Docker needs to rebuild, thus speeding up the build process.

Practical examples of command grouping

Let's look at an example Dockerfile:

FROM debian:stretch

RUN apt-get update && apt-get install -y \

python3 \

python3-pip

COPY . /app

WORKDIR /app

RUN pip3 install -r requirements.txt

CMD ["python3", "app.py"]In this Dockerfile, the COPY . /app command is likely to change frequently as you update your application code. By moving this command and the subsequent RUN pip3 install -r requirements.txt command (which also changes whenever the requirements.txt file changes) to the end of the Dockerfile, Docker can use the cache for the RUN apt-get update && apt-get install -y python3 python3-pip command, which changes less frequently.

The optimized Dockerfile would look like this:

FROM debian:stretch

RUN apt-get update && apt-get install -y \

python3 \

python3-pip

WORKDIR /app

COPY . /app

RUN pip3 install -r requirements.txt

CMD ["python3", "app.py"]By grouping commands based on their likelihood to change, developers and DevOps can optimize Docker image rebuilding, save CI seconds, and improve container security and software development practices.

Conclusion

The benefit of rebuilding Docker images faster stretches beyond just the time saved. It significantly influences the efficiency of the CI pipeline and the overall software development process.

A faster Docker image building process leads to shorter build times in the CI pipeline, which results in quicker feedback loops for developers. This accelerates the pace of software development, enabling developers to iterate on their changes more quickly.

Moreover, it also leads to cost savings as less computational resources are used in the CI pipeline. In the world of cloud computing, where costs are often directly tied to CPU usage and build time, a faster Docker image build process can lead to substantial savings over time.

Lastly, the overall security posture of your application can be improved. Faster build times mean quicker feedback on potential security issues in your Docker images, allowing for faster remediation and thus reducing the window of exposure.

In conclusion, adopting these strategies to rebuild Docker images faster is not just a nice-to-have but a necessity in today’s fast-paced world of software development. It is essential not only for boosting the speed and efficiency of your CI pipeline but also for improving the security and cost-effectiveness of your software development process.

Container security for DevSecOps

Find and fix container vulnerabilities for free with Snyk.