Take actions to improve security in your Docker images

William Henry

17. April 2019

0 Min. LesezeitWelcome to the Docker security report: Shifting Docker security left.This report is split into several posts:

The top two most popular Docker base images each have over 500 vulnerabilities

Take actions to improve security in your Docker images

Or download our lovely handcrafted pdf report which contains all of this information and more in one place:

Or download our lovely handcrafted pdf report, which contains all of this information and more in one place.

Choosing the right base image

A popular approach to this challenge is to have two types of base images: one used during development and unit testing and another for later stage testing and production. In later stage testing and production your image does not require build tools such as compilers (for example, Javac) or build systems (such as Maven) or debugging tools. In fact, in production, your image may not even require Bash.

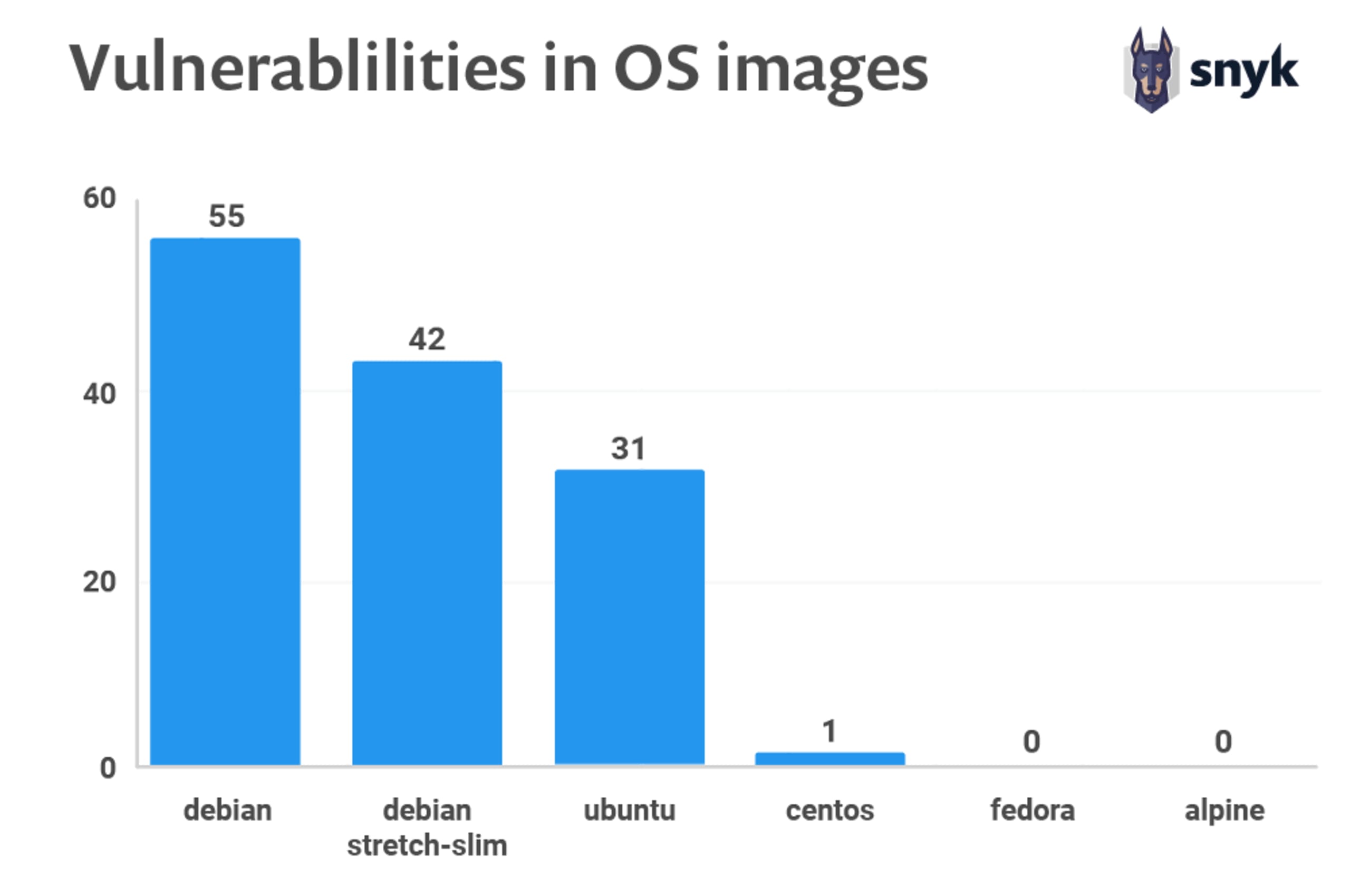

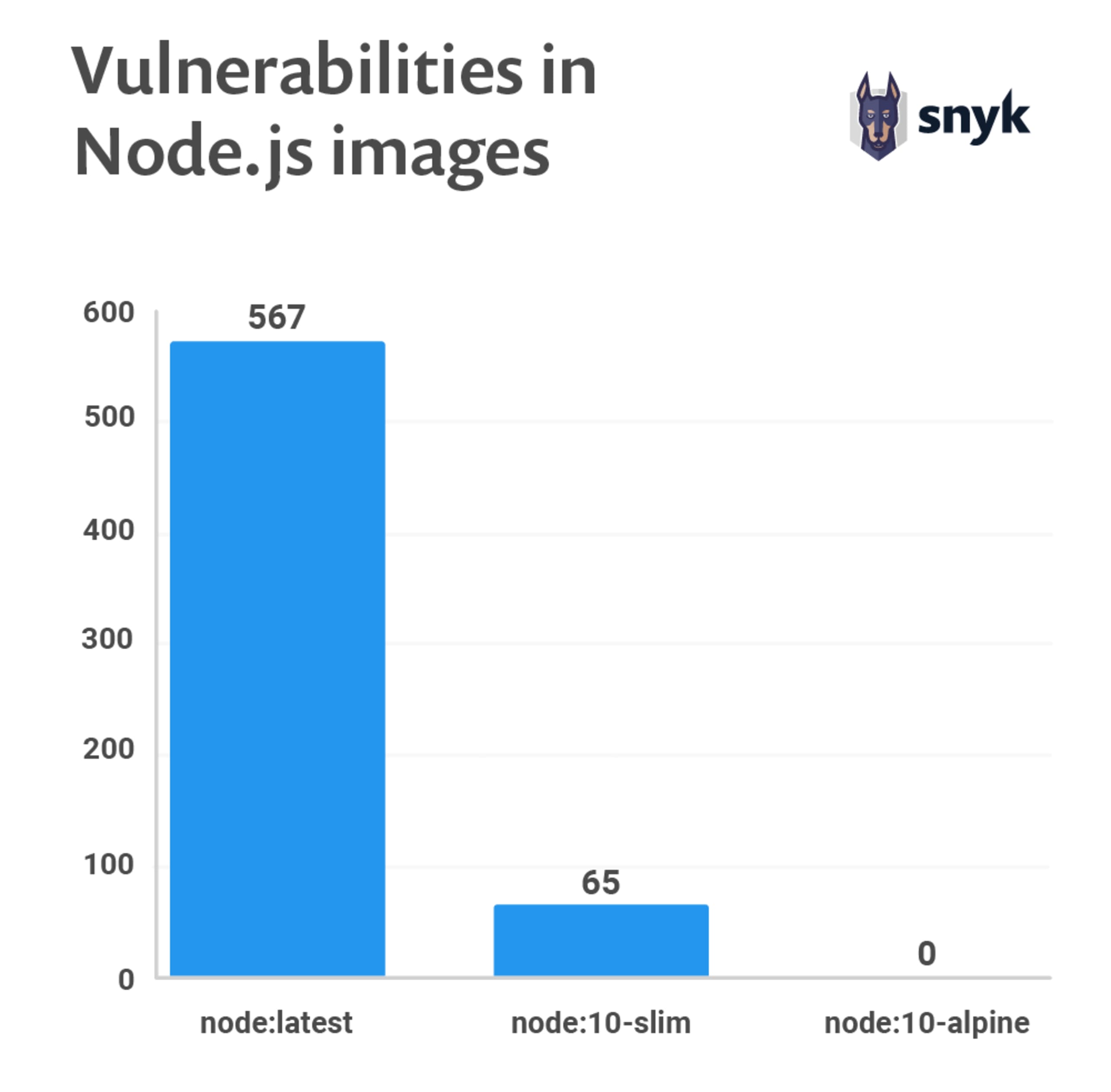

We see dramatic differences between the basic operating system images and the different variants. Most of the time, a full-blown operating system image is not necessary. Image build tools, like Buildah, allow you to build images from scratch and only install the packages you need and their dependencies. This lowers the attack plain on images considerably. Consider a Python application image with nothing but the Python package, it’s dependent packages, and the Python application.

An additional advantage of Buildah is that it does not require the Docker daemon process. This is important in large scale container deployment platforms that reuse resources for building container images. The Docker daemon is a privileged process that has an open socket used to communicate with it. If the Docker daemon gets exploited then the node becomes compromised and often that means an entire cluster can be compromised. Either explicitly isolating build nodes or using a tool like Buildah will eliminate the need for a Docker daemon.

Selecting a stripped version or another implementation of a Linux distro can help trim down the number of vulnerabilities. When scanning the Alpine base image, a minimal 5MB in size Docker image based on Alpine Linux, we don’t find any known vulnerability, however that is mostly due to the Alpine project not maintaining a security advisory program, and so if vulnerabilities are present there is no official advisory to share them on in any case. Still, Alpine makes a good case of a very minimal and stripped- down base image upon which to build.

While no vulnerabilities were detected in the version of the Alpine image we tested, that’s not to say that it is necessarily free of security issues. Alpine Linux handles vulnerabilities differently than the other major distros, who prefer to backport sets of patches. At Alpine, they prefer rapid release cycles for their images, with each image release providing a system library upgrade.

As you can see in the graph above, changing the base image inside the Dockerfile or simply using another tag of a standard image can make a lot of difference.

When building your own image from a Dockerfile be sure that you do not depend on larger images than necessary. This shrinks the size of your image and also minimizes the number of vulnerabilities introduced through your dependencies.

Based on scans performed by Snyk users, we found that 44% of docker image scans had known vulnerabilities, for which there were newer and more secure base image available. This remediation advice is unique to Snyk. Developers can take action to upgrade their Docker images. Automating the process of scanning for newer or better base images and alerting to this can be considered a best practice.

Use multi-stage builds

Multi-stage builds are available when using Docker 17.05 and higher. These kinds of builds are designed to create an optimized Dockerfile that is easy to read and maintain.

With a multi-stage build, you can use multiple images and selectively copy only the artifacts needed from a particular image. You can use multiple FROM statements in your Dockerfile, and you can use a different base image for each FROM, copying the artifacts from one step to the next. You can leave the artifacts that you don't need behind and still end up with a concise final image.

This method of creating a tiny image does not only significantly reduce complexity but also the change of implementing vulnerable artifacts in your image. So instead of images that are built on images that again are built on other images, with multi-stage builds you are able to “cherry-pick” your artifacts without inheriting the vulnerabilities from the base images on which you rely. More information on how to build multi-stage builds can be found in the Docker docs.

As mentioned earlier in the report, another approach is to use tools like Buildah to create minimal production images with only the packages required to run your application. For more information on Buildah see Buildah.io and Podman and Buildah for Docker Users.

Rebuilding images

Every Docker image builds from a Dockerfile. These Dockerfiles for the Docker images on Docker Hub are publicly available on GitHub. A Dockerfile contains a set of instructions which allows you to automate the steps you would normally manually take to create an image. Additionally, some libraries may be imported and custom software can be installed. These are all instructions in the Dockerfile. In the State of Open Source Security 2019 report, we discovered that 20% of the Docker images with vulnerabilities could have been solved by a simple rebuild of the image.

Building your image is basically a snapshot of that image at that moment in time. When you depend on a base image without a strict tag, every time a rebuild is done the base image can be different. When packages are installed using a package installer, rebuilding can change the image.

A Dockerfile containing the following can potentially have a different binary with every rebuild.FROM ubuntu:latestRUN apt-get -y update && apt-get install -y python

Any Docker image should be rebuilt regularly to prevent known vulnerabilities in your image that have already been solved. When rebuilding use the no-cache option --no-cache to avoid cache hits and to ensure a fresh download.

For example:docker build --no-cache -t myImage:myTag myPath/

In summary, follow these best practices when rebuilding your image:

Each container should have only one responsibility.

Containers should be immutable, lightweight and fast.

Don’t store data in your container (use a shared data store).

Containers should be easy to destroy and rebuild.

Use a small base image (such as Linux Alpine). eÌ Smaller images are easier to distribute.

Avoid installing unnecessary packages.

This keeps the image nice, clean and safe.

Avoid cache hits when building.

Auto-scan your image before deploying with a tool like Snyk’s container scan to avoid pushing vulnerable containers to production.

Scan your images daily both during development and production for vulnerabilities. Based on that, automate the rebuild of images if necessary.

Scanning images during development

Creating an image from a Dockerfile and even rebuilding an image can introduce new vulnerabilities in your system. Previously we saw that 68% of users believe that developers have a fair amount of responsibility in container security. Scanning your docker images during development should be part of your workflow to catch vulnerabilities as early as possible.

When thinking of shifting security left, developers should ideally be able to scan a Dockerfile and their images from their local machine before it is committed to a repository or a build pipeline.

This does not mean that you should replace CI- pipeline scans with local scans, but rather that it is preferable to scan at all stages of development, and preferably scans should be automated.Think about automated scans during build, before pushing the image to a registry and pushing an image to a production environment. Refusing that an image go into a registry or enter the production system because the automated scan found new vulnerabilities should be considered a best-practice.

Snyk’s recently released container vulnerability management scan Docker images by extracting the image layers and inspecting the package manager manifest info. We then compare every OS package installed in the image against our Docker vulnerability database. In addition to that, it is also crucial to scan the key binaries installed on the images. Snyk also supports scanning the key binaries that are often not installed by the OS package manager (dpkg, RPM and APK), but by other methods such as a RUN command.

When giving developers the tools to scan Dockerfiles and images during development on their local machines, another layer of protection is created and developers can actively contribute to a more secure system in general

Scanning containers during production

It turns out that 91% of respondents do not scan their docker images in production. Actively checking your container could save you a lot of trouble when a new vulnerability is discovered and your production system might be at risk.

Periodically (for example, daily) scanning your docker image is possible by using the Snyk monitor capabilities for containers. Snyk creates a snapshot of the image's dependencies for continuous monitoring.

Additionally, you should also activate runtime monitoring. Scanning for unused modules and packages inside your runtime gives insight into how to shrink images. Removing unused components prevents unnecessary vulnerabilities from entering both system and application libraries. This also makes an image more easily maintainable.

Continue reading:

The top two most popular Docker base images each have over 500 vulnerabilities

Take actions to improve security in your Docker images

10 best practices to containerize Node.js web applications with Docker - If you’re a Node.js developer you are going to love this step by step walkthrough, showing you how to build secure and performant Docker base images for your Node.js applications.

10 Docker Security Best Practices - details security practices that you should follow when building docker base images and when pulling them too, as it also introduces the reader to docker content trust.

Are you a Java developer? You’ll find this resource valuable:Docker for Java developers: 5 things you need to know not to fail your security