Secure at Inception: Das neue Mandat für KI-gestützte Software-Entwicklung

Daniel Berman

In der Software-Entwicklung vollzieht sich ein grundlegender Wandel. KI-Code-Assistenten sind nichts Neues mehr, sondern der neue Standard für revolutionäre Dev-Produktivität und -Innovation. Ein Trend, der sich nicht nur abzeichnet, sondern längst Realität ist: Rund die Hälfte aller Entwicklerteams hat heute GenAI-Tools im Einsatz, knapp 70 % der Engineering-Leader wenden nach eigenen Angaben weniger Zeit für klassische Entwicklungsaufgaben auf – das Zeitalter KI-gestützter Entwicklung ist also bereits in vollem Gange.

Bezahlt werden diese Agilitätsvorteile jedoch mit einer Security-Herausforderung an zwei Fronten, auf die klassische Tools nicht ausgelegt sind. Da ist auf der einen Seite die hohe Taktung, mit der KI-Tools potenziell unsicheren Code generieren. Denn Untersuchungen zufolge ist fast 50 % aller KI-Code-Outputs unsicher. Es tut sich also eine Angriffsfläche von massiver Größe in bislang ungekannter Geschwindigkeit auf. Verschärft wird dieses Risiko durch das neue Paradigma des „Vibe Coding“, den Vormarsch nicht klassisch ausgebildeter Entwickler und dadurch, dass KI-Modelle häufig mit fehlerbehafteten Daten aus öffentlichen Quellen trainiert werden. Zu dieser neuen Herausforderung kommt auf der anderen Seite ein enormer Backlog unsicheren Codes aus menschlicher Feder hinzu, der Software-Teams wie ein Bremsklotz anhaftet.

Klassische Security-Tools sind dieser Doppelbelastung nicht gewachsen. Für den neuen KI-Workflow sind sie zu langsam, für einen effizienten Abbau der Security-Altlasten nicht intelligent genug. Zurück bleibt damit das Dilemma, entweder verlangsamte Innovation zu akzeptieren – oder ein inakzeptables Risikopotenzial.

Bisheriges Modell des Shift Left stößt an seine Grenzen

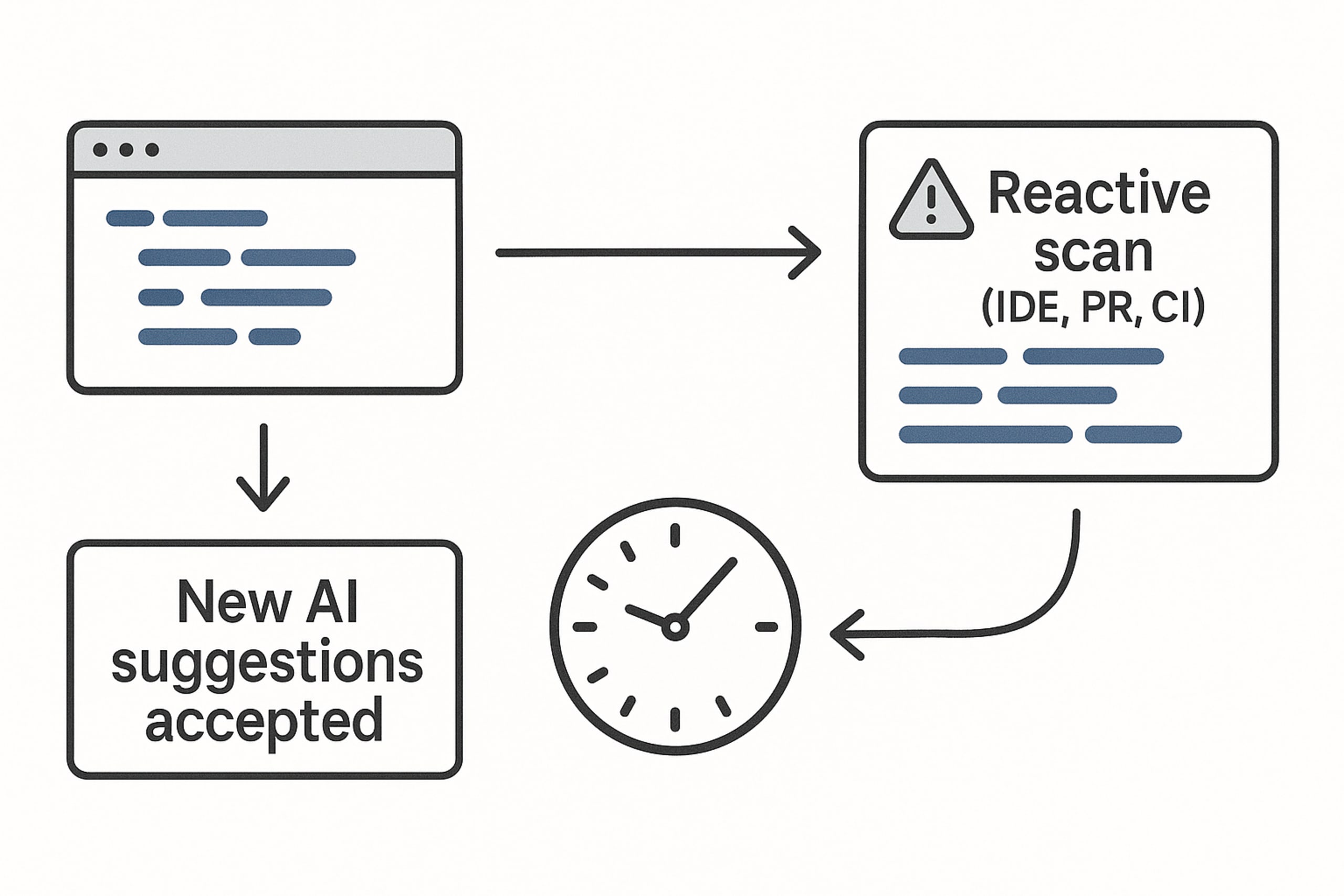

Bis dato lautete die Antwort auf AppSec-Herausforderungen stets, die Erkennung von Schwachstellen durch einen Shift Left in früheren Phasen des Dev-Lifecycle anzusetzen. Im Grundsatz gilt dies zwar auch weiterhin. Schwachstellen-Scans innerhalb von IDEs, bei Pull-Requests oder in CI/CD-Pipelines, wie sie nach der klassischen Methodik erfolgen, können mit der Release-Frequenz KI-gestützter Entwicklung jedoch nicht Schritt halten.

Denn zu diesem Zeitpunkt hat ein Entwickler womöglich bereits Dutzende Code-Vorschläge der KI akzeptiert. Darin nun Fixings einzusteuern zu müssen, wäre ein gleichermaßen lähmendes wie kostspieliges Unterfangen. Im Prinzip undenkbar, würde es die Agilität KI-gestützter Dev-Workflows doch gänzlich zunichte machen. Ohnehin sind bisherige Tools per se reaktiv: Sie spüren Probleme im Code auf, nachdem er geschrieben wurde. Sie können der KI jedoch nicht die proaktive Anleitung vermitteln, die sie benötigen würde, um von vornherein sicheren Code zu generieren.

Unerlässlich ist daher eine neue Methodik, die die bisherigen Ansätze weiterdenkt.

Die Lösung: „Secure at Inception“ als neues Paradigma

Innovation auszubremsen ist keine Option. Vielmehr gilt es, Sicherheit direkt in den KI-nativen Workflow zu integrieren. Daran setzt mit „Secure at Inception“ eine neue Methodik an, die statt auf reaktive Scans auf proaktive Anleitung des KI-Coding-Agent setzt, damit dieser vom ersten Prompt an sicheren Code generiert. Ziel ist es, Sicherheit zu einem unsichtbaren, automatischen Bestandteil der Code-Erstellung zu machen.

Snyk Studio ist das einzige Produkt für Dev-First Security, das sich dieser neuen Realität annimmt. Beide Fronten der Herausforderung für sichere Entwicklung adressieren wir damit in einer umfassenden Lösung.

An der neuen Front der KI-Code-Generierung verhilft Snyk Studio Ihnen zu „Secure at Inception“, indem unsere führenden Security-Engines direkt in den jeweils genutzten KI-Assistenten integriert werden. KI-generierter Code wird dabei nicht einfach gescannt. Vielmehr erfolgen Security-Scans und -Fixes innerhalb des agentischen Workflows Ihres Coding-Assistenten, sodass der Code vom ersten Prompt an sicher ist.

An Security-Altlasten setzt Snyk Studio mit Intelligent Remediation die Power von KI an. Backlogs lassen sich damit so schnell und skalierbar abbauen wie nie zuvor. Eine Befreiung für Entwickler, die ihren Blick nun ganz auf die Zukunft richten können.

Absicherung KI-gestützter Entwicklung in der Praxis

Problemprävention in intern entwickeltem Code

Angenommen, eine Entwicklerin eines Gesundheitsdienstleisters möchte ein Feature zur Anzeige von Patientenakten entwickeln. Hierzu nimmt sie ihren KI-Code-Assistenten zur Hand und gibt folgenden Prompt ein: „Erstelle einen API-Endpoint, der eine Patientenakte über ihre ID aus der Datenbank abruft.“

Die KI, der es einzig um die Funktionalität geht, setzt dies direkt mit einer sauberen, effizienten Funktion um, die eine recordId aus der URL übernimmt und die entsprechende Patientenakte abruft. Die erste Version des hierzu generierten Codes weist mit Insecure Direct Object Reference (IDOR) jedoch eine kritische Schwachstelle auf. Denn es fehlt eine Prüfung, ob der angemeldete Benutzer autorisiert ist, die abgerufene Patientenakte einzusehen.

Ein klassischer Security-Scanner dürfte auf dieses Problem direkt anschlagen, idealerweise innerhalb einer IDE oder im Pull-Request. Hierzu muss die Entwicklerin mit einen SAST-Scan jedoch einen Schritt ansetzen, der sich allzu oft als zu langsam und lähmend erweist.

Ganz anders sieht der Prozess mit „Secure at Inception” aus. Snyk Studio gibt dem KI-Code-Assistenten dabei eine hartcodierte Regel vor, die wie folgt lautet: „Führe auf jedes neu generierte Code-Element direkt einen Scan mit Snyk Code aus. Wenn dabei Probleme erkannt werden, steuere Fixes auf Grundlage der Ergebnisse ein und überprüfe diese durch einen erneuten Scan.“

Durch diese Regel belässt es der KI-Assistent nicht beim ursprünglichen Output, sondern scannt die generierte Funktion sofort mit Snyk Code. Die zugrunde liegende Engine von Snyk erkennt die IDOR-Schwachstelle auf Anhieb und liefert dabei auch den nötigen Kontext. Damit behebt der KI-Assistent die Schwachstelle nun autonom, indem er die entscheidende Logik zum Abgleich der ownerId der Patientenakte mit der userId der authentifizierten User-Session hinzufügt. Nach Ausführung eines erneuten Scans dieses Codes erhält er die Bestätigung von Snyk, dass die Schwachstelle nun behoben ist.

So gelangt die Entwicklerin ohne Umschweife zur finalen Version des Codes – sicher und validiert, direkt beim ersten Prompt. Dank dieser prozessgesteuerten Leitschienen wird es möglich, starke Sicherheit automatisiert und unsichtbar in den Prozess der Code-Erstellung einzupassen.

Absicherung gegen Open-Source-Schwachstellen

Sogar noch wichtiger ist proaktive Prävention für die Open-Source-Abhängigkeiten, die KI-Assistenten häufig vorschlagen. Hierbei geht es weniger um das Risiko übersehener Schwachstellen, die sich noch in älteren Paketversionen verbergen könnten. Vielmehr deuten schwerwiegende Supply-Chain-Angriffe der jüngeren Vergangenheit auf eine zunehmende Bedrohung durch Schadcode hin, der vorsätzlich in beliebte Pakete eingeschleust wird.

Gut möglich also, dass ein KI-Assistent aufgrund der enormen Mengen an öffentlich verfügbaren Daten, mit denen er trainiert wurde, kompromittierte Abhängigkeiten dieser Art unwissentlich vorschlägt. Aktive Bedrohungen würden so auf direktem Wege in Ihrer Codebase Einzug halten.

Deutlich wird dies am Beispiel des folgenden Prompts eines weiteren Entwicklers: „Erstelle mithilfe von Express einen neuen API-Endpoint zur Abwicklung eines Zahlungsprozesses.“

Hierzu könnte der KI-Assistent zum Parsen von Benutzereingaben auf das Open-Source-Paket qs@6.5.1 zurückgreifen. Der damit generierte Endpoint funktioniert zwar einwandfrei, doch die Bibliothek qs enthält in dieser Version die bekannte und schwerwiegende Schwachstelle Prototype-Pollution. Über diese wird es einem Angreifer möglich, das Anwendungsverhalten zu manipulieren und den Server zum Absturz zu bringen.

Ein klassischer Security-Scanner wird auch diese Schwachstelle in der IDE oder bei einem nachgelagerten PR-Check sehr wahrscheinlich erkennen. Der Entwickler muss jedoch noch immer seine Arbeit unterbrechen und den Kontext wechseln, um eine sichere Alternative zu finden.

Mit „Secure at Inception“ gestaltet sich der Workflow dagegen so, dass das Security-Team mit Snyk zuvor bereits eine einfache Regel dafür festgelegt hat, neue oder modifizierte Versionen anfälliger Abhängigkeiten aufzuspüren und zu beheben. Gibt der Entwickler nun denselben Prompt in den KI-Assistenten ein, ist dieser dank der Security Intelligence von Snyk darüber informiert, dass er die unsichere Version des Pakets nicht im finalen Code-Output verwenden darf. Er generiert den API-Endpoint, der wie auch der vorherige perfekt funktioniert, jedoch unter Einsatz der neuesten, gepatchten Version der qs-Bibliothek.

All dies erfolgt ganz ohne Alerts oder Kontextwechsel, die den Entwickler aus dem Konzept bringen würden. Das ist proaktive Prävention par excellence, die Sicherheit vom holprigen Hindernis zum unsichtbaren Enabler macht.

Abbau von Backlogs

Welche Bedeutung „Secure at Inception“ der Vorbeugung neuer Schwachstellen zukommt, ist offensichtlich. Doch was kann die Methodik gegen die enormen Security-Backlogs ausrichten, denen sich viele Software-Teams gegenübersehen.

Hierzu lässt sich mit Labelbox, einem Spezialisten für GenAI-Datengenerierung, ein Beispiel aus der Praxis heranführen: Aaron Bacchi, der einzige Security Engineer des Unternehmens, war mit einem Backlog schwerwiegender SAST-Probleme konfrontiert, der sich bereits seit zwei Jahren aufgestaut hatte. Im Entwicklerteam galt es jedoch, die Feature-Entwicklung voranzutreiben, und so schwelte ununterbrochen ein Risikoherd vor sich hin, für dessen Behebung es schlicht an Kapazitäten fehlte.

Daran setzte Bacchi mit einem KI-gestützten Fixing-Workflow an, für den er Snyk Studio mit seinem KI-Coding-Assistenten Cursor integrierte. Dadurch erhielt der KI-Assistent den nötigen Security-Kontext von Snyk, um Fixes für die Schwachstellen im Backlog zu generieren, zu testen und zu validieren.

Mit beachtlichen Ergebnissen: Innerhalb weniger Wochen konnte Bacchi den kompletten Backlog abarbeiten. So entledigte er sich nicht nur der schwerwiegenden, über zwei Jahre aufgestauten Probleme und ihres enormen Risikopotenzials, sondern gewann auch mehr Zeit für strategisch wichtigere Security-Initiativen. Und all dies ganz ohne das Entwicklerteam in Beschlag zu nehmen. Entsprechend eindeutig fällt sein Fazit dazu aus, wie sich Schwachstellen damit verringern lassen: „Ein absoluter Game-Changer – es ist jetzt sogar realistisch, sie komplett auf null zu reduzieren.“

Von KI als Risikofaktor zum sicheren Innovationsmotor

Mit Anbruch des Zeitalters KI-gestützter Entwicklung gilt es, Ad-hoc-Maßnahmen durch ein skalierbares Programm mit Governance und sicherem Code als Standard zu ersetzen. Der Taktung und Skalierung, mit der KI Code generiert, können die bisherigen Modelle reaktiver Scans und nachträglichem Fixing nicht gerecht werden.

Mit „Secure at Inception” erhalten Sie dagegen eine Methodik, mit der sich KI-generierte Schwachstellen proaktiv vorbeugen lassen. Im Verbund mit „Intelligent Remediation” wird es dabei möglich, auch Security-Altlasten effizient zu beseitigen. Beides vereint Snyk Studio in einer umfassenden Dev-First-Lösung, mit der Sie die Weichen für einen sicheren Weg in die Zukunft der Software-Entwicklung stellen und die Agilität von KI sorgenfrei in die Realität umsetzen.

Jetzt starten mit Secure at Inception – mit nicht mehr als ein paar Klicks.

Innovativ mit KI. Sicher mit Snyk.

Erfahren Sie, wie Snyk Studio Ihnen dazu verhilft, KI-gestützte Dev-Workflows sicher zu machen.