Detecting & Patching Vulnerabilities with Continue and Snyk MCP

Continue feels like reclaiming your development environment. Unlike proprietary AI coding assistants that abstract away control, Continue is unapologetically transparent. You see exactly what's happening, choose your own models, and keep your code private. It's like building your own lightsaber. One can sense this commitment to developer autonomy not just in the open source philosophy, but in thoughtful design decisions like MCP integration and model-agnostic flexibility that lets you run everything from GPT-4 to local LLaMA models.

Check out this workshop-in-a-box guide to integrating Snyk Studio security scanning capabilities directly into your development workflow using Continue.

Developer testimonials (in the wild)

One developer wrote on DEV Community: "I was surprised to see it supported codebase context along with a few other options to augment the chat (web crawl of a URL, specific files, etc.), and it actually worked! And all of that is completely free and open source!"

You can do lots with it: “I use continue.dev for auto-complete with local qwen 7b coder when I don't have internet.”

One user even said, “as [a] chat tool it's beautiful because of its flexibility and extensibility. I have a dozen models to use, from OpenAI o1, Gemini 1.5 and 2.0, Claude 3.5, and some others on ollama. And many pre-defined custom prompts and commands for common tasks.

Usual flow is to ask some model to implement the task with the project codebase as context, then another model to review the code, then a custom prompt with summary recommendations from ‘Clean Code’ books. Adding documentation for frameworks in use and Google search results let to have pretty good results. ”

The recurring themes in these user reviews center on freedom from vendor lock-in, privacy control, flexibility to customize, and the value proposition of being completely free and open source. Unsurprisingly, developers appreciate finally having an AI coding assistant that works for them, not the other way around.

Getting started with Continue

Continue's developer journey focuses on getting you productive quickly:

Install the extension: Available on Visual Studio Marketplace for VS Code, or as a plugin for JetBrains IDEs (IntelliJ IDEA, PyCharm, WebStorm).

Move to the right sidebar: After installation, the Continue icon appears on the left sidebar. For a better experience, though, you might want to drag it to the right sidebar.

Configure your model: Click the agent selector dropdown, then select your preferred model provider. Continue supports OpenAI, Anthropic, Ollama for local models, and many others.

Optional: Sign in to the Continue hub: For managed agent workflows and team collaboration features, sign in to Continue Hub (more details below). This is optional, though; Continue works fully offline with local models if you prefer.

Model Context Protocol (MCP) support in Continue

As a modern AI coding tool, Continue supports the Model Context Protocol (MCP). MCP enables AI models to interact with external tools, databases, and APIs in real-time, dramatically expanding what's possible beyond simple code generation.

Continue was actually the first client to offer full support for all MCP features, including Resources (data access), Prompts (templated interactions), Tools (function calling), and Sampling (AI-powered responses).

Configuring MCP servers in Continue

Continue offers two primary ways to configure MCP servers:

Workspace-based MCP configuration

(.continue/mcpServers/): Ideal for project-specific toolsets. Create this directory at the root of your workspace and add individual YAML files for each MCP server. These tools will only be active within that workspace.

Example structure:

Global MCP configuration

(~/.continue/config.yaml): For tools you want available globally across all projects, define them in your main Continue configuration file under the mcpServers block. This is perfect for security scanning tools like Snyk that you want available everywhere.

MCP transport types

Continue supports multiple transport mechanisms:

STDIO (Standard Input/Output): Ideal for local processes and command-line tools. Most MCP servers use this transport type.

Streamable HTTP: For remote/hosted MCP servers with streaming capabilities

SSE (Server-Sent Events): For real-time streaming communication with remote servers

Note that MCP as a protocol is deprecating SSE in favor of Streamable HTTP.

The YAML configuration for an MCP server is straightforward with just a few key properties:

This format is migration-friendly. If you're coming from another tool like Claude Desktop, Cursor, or Cline, you can copy their JSON MCP config files directly into your .continue/mcpServers/ directory (note the plural "Servers"), and Continue will automatically pick them up.

Adding Snyk Studio

Snyk's official MCP server, part of Snyk Studio, integrates security scanning capabilities directly into your AI coding workflow. With it, you can trigger vulnerability scans, retrieve security findings, and even request AI-assisted fixes, all without leaving your development environment. It’s actually built into the Snyk CLI.

Prerequisites

Before adding the Snyk MCP server, ensure you have:

1. Snyk CLI installed (v1.1296.2 or later): Installation guide

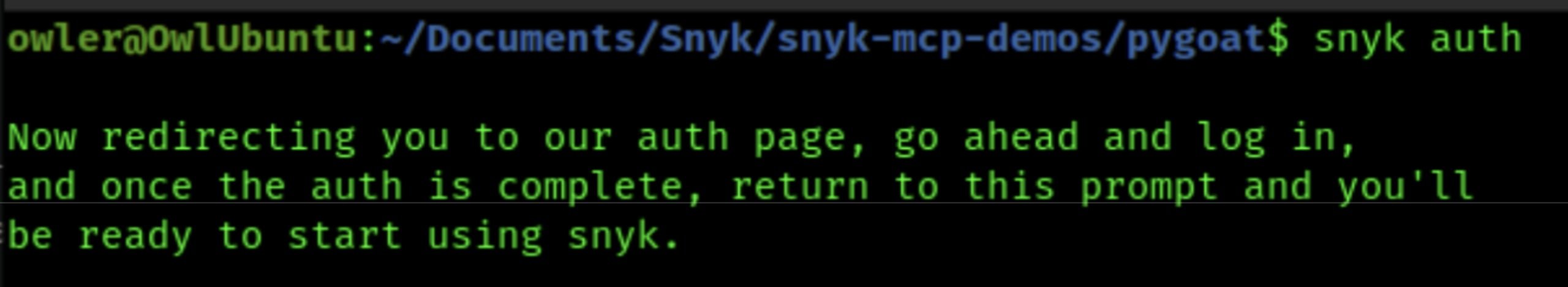

2. Snyk authentication: The MCP server needs your Snyk API token. Run:

This opens your browser to authenticate and stores your token locally.

Configuration options

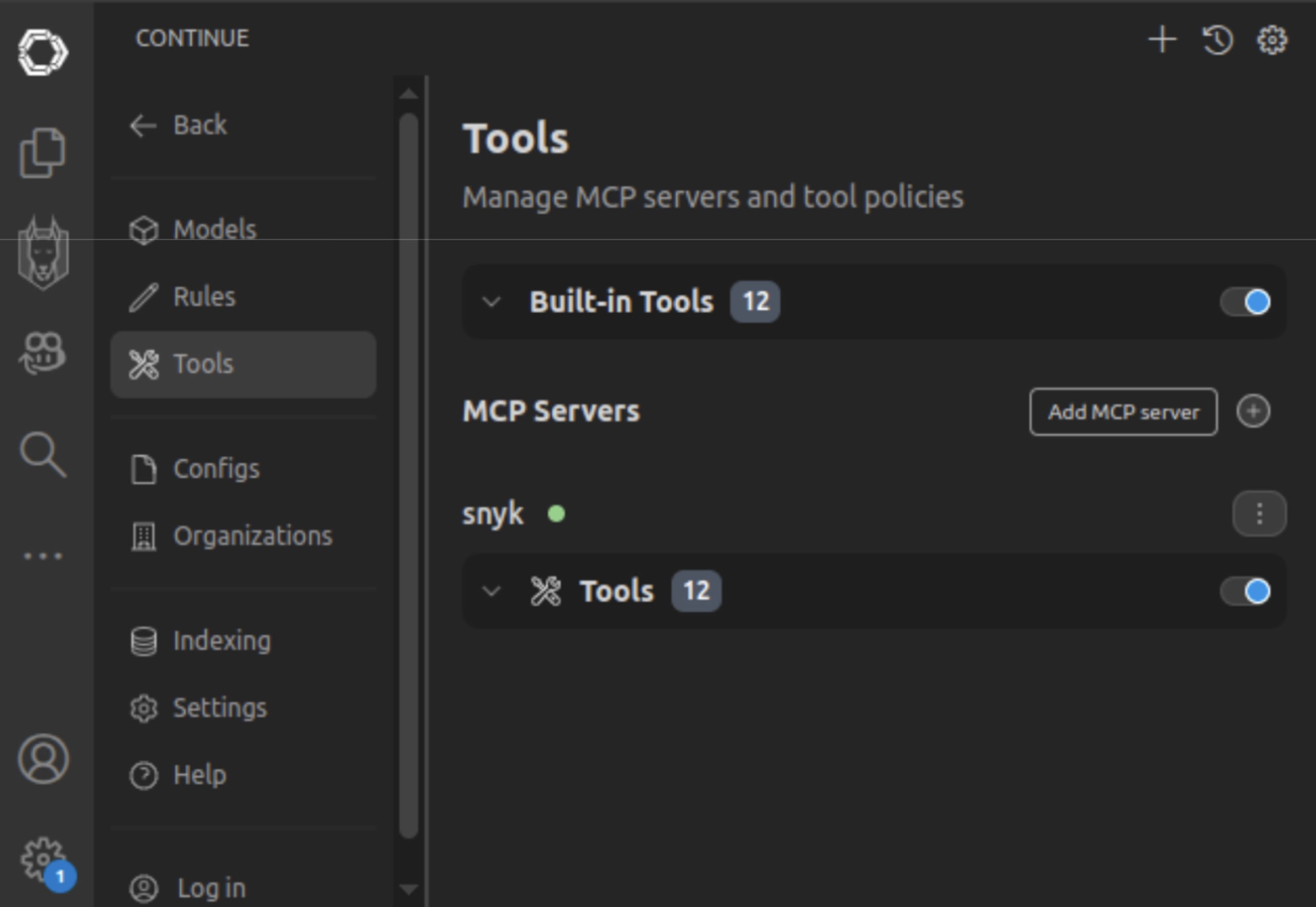

Most simply, you can add the Snyk MCP server to your installed Continue VS Code extension by:

Select the Continue icon on your left sidebar.

Select ‘Configure tools’ at the top of the user-input text box.

3. Click the ‘+’ icon to the right of ‘MCP Servers’ to add a new server.

4. This will open a new-mcp-server.yaml file in the correct spot for the project automatically.

You can add the Snyk MCP server using either npx (recommended) or direct CLI invocation.

Using npx to automatically stay up to date

Assuming you have created .continue/mcpServers/snyk-mcp.yaml in your workspace, you can define it like so:

This configuration uses npx to always fetch the latest Snyk CLI version.

Using the locally installed Snyk CLI path directly

If you've installed Snyk globally and want to use your local installation:

You can get /absolute/path/to/snyk with the output of which snyk on most Linux systems.

The Snyk MCP server might require the --experimental flag when running directly via CLI, but this is handled automatically when invoked through npx with the latest version.

Activating the Snyk MCP Server in Continue

After creating your configuration file, Continue will automatically detect and load the MCP server. To verify it's working:

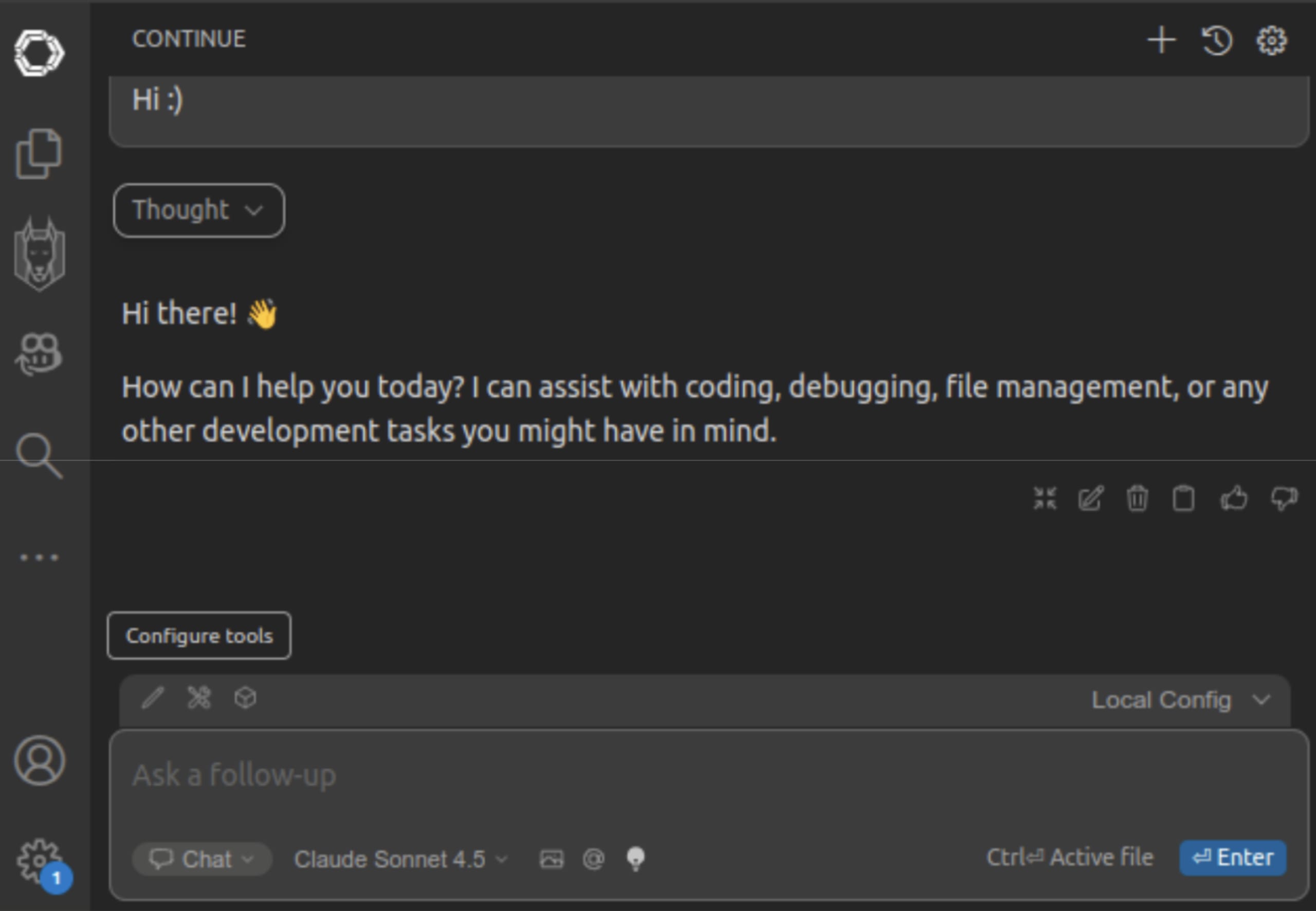

1. Enable Continue’s agent mode: MCP functionality is exclusively available in Agent Mode. Click the agent selector dropdown near the chat input and ensure Agent Mode is activated.

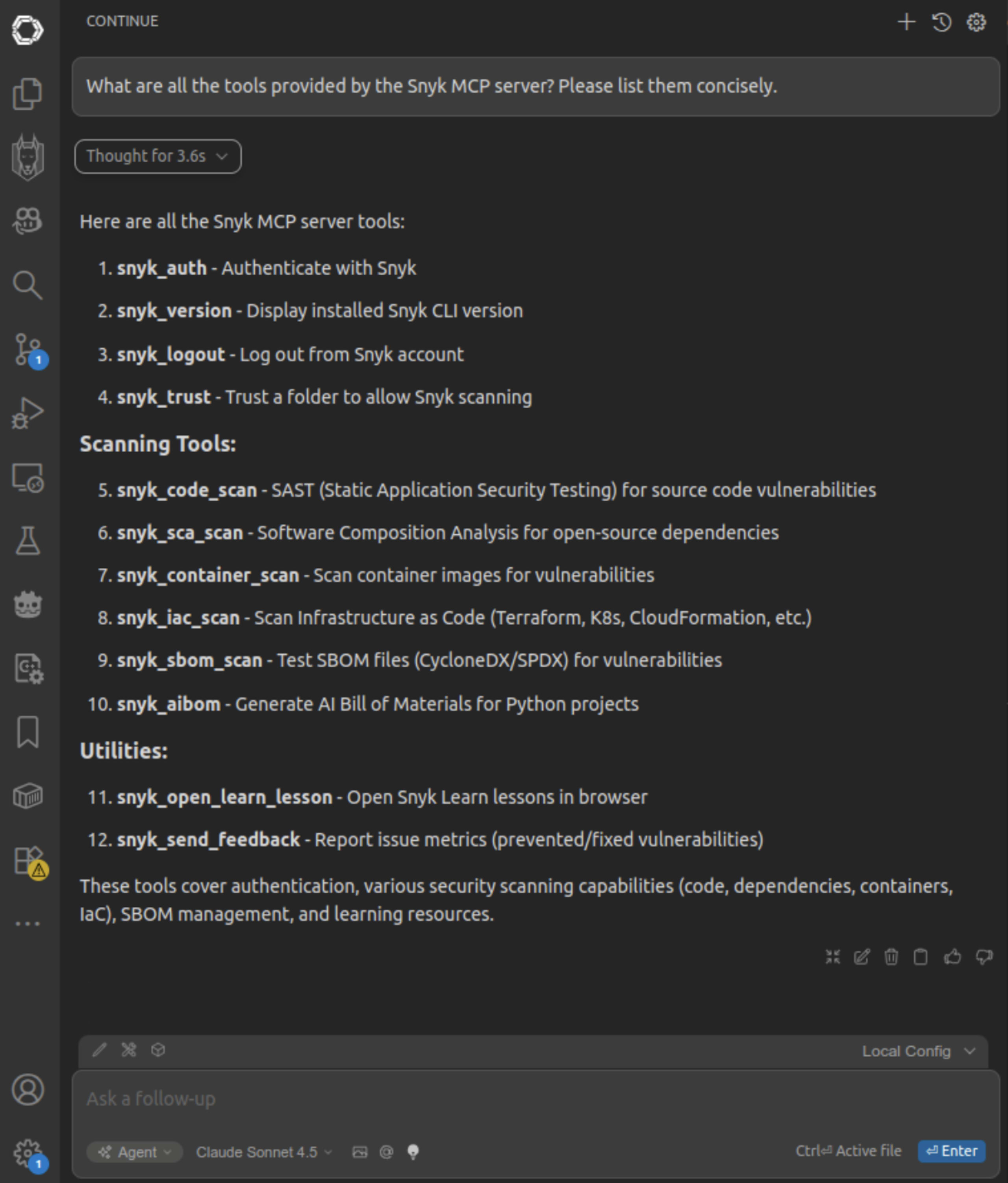

2. Verify MCP tools: You can ask your selected LLM what tools it sees available.

3. Check authentication: In the chat panel, ask Continue: "Can you check my Snyk authentication status?" The agent will use the Snyk MCP server to verify your connection (via MCP tool calls).

Using Continue to automatically detect and patch vulnerabilities

For this demonstration, we'll use OWASP Project Leader adeyosemanputra's pyGoat repository. It is an intentionally vulnerable Django application designed for security education. This project includes well-documented vulnerabilities across different severity levels, making it perfect for testing security scanning and remediation workflows.

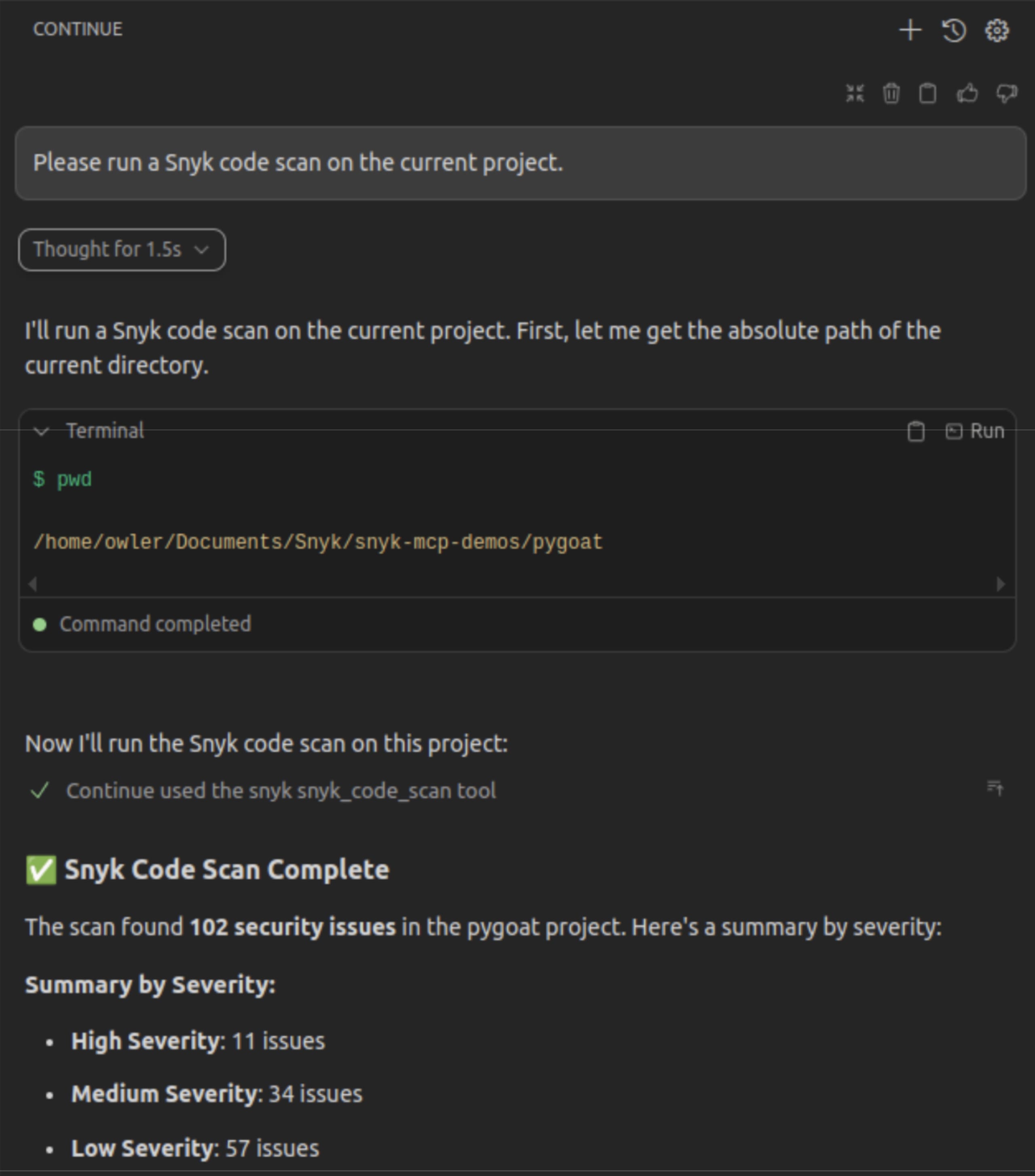

Initial security scan

First, let's see what vulnerabilities Snyk detects in the codebase. We can also ask Continue to use the Snyk MCP server to scan the project:

In the Continue chat panel (Agent Mode), we ask:

Continue's agent recognizes it has access to the Snyk MCP tools and executes the scan:

Continue then provides a helpful summary of the critical findings:

Patching a high-severity SQL injection vulnerability

Now let's ask Continue's agent to fix one of these high-priority vulnerabilities and then re-scan to verify the fix. We provide the specific vulnerability details from the scan:

Continue's agent springs into action with a clear plan:

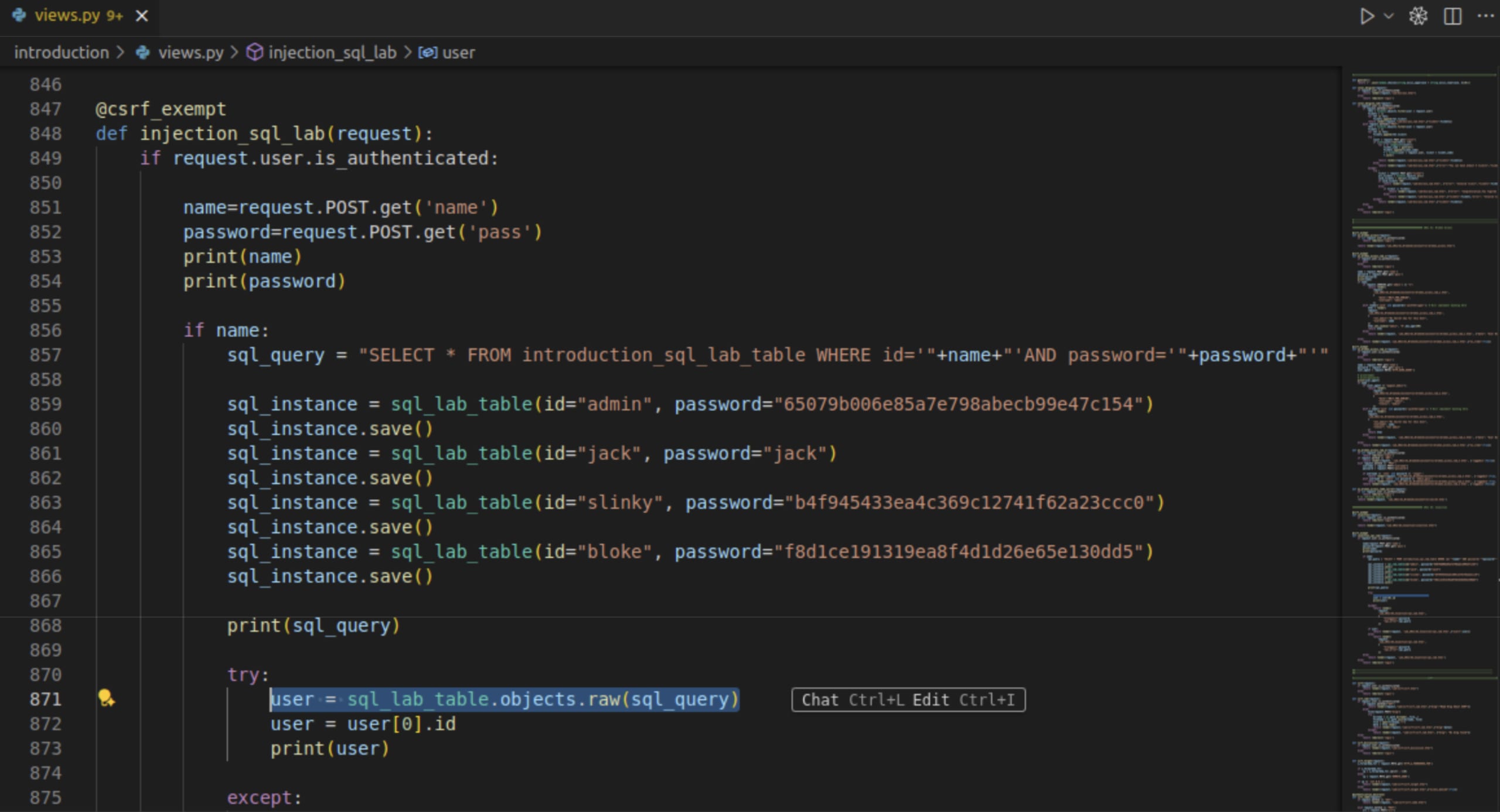

The agent first reads the vulnerable code to understand the context:

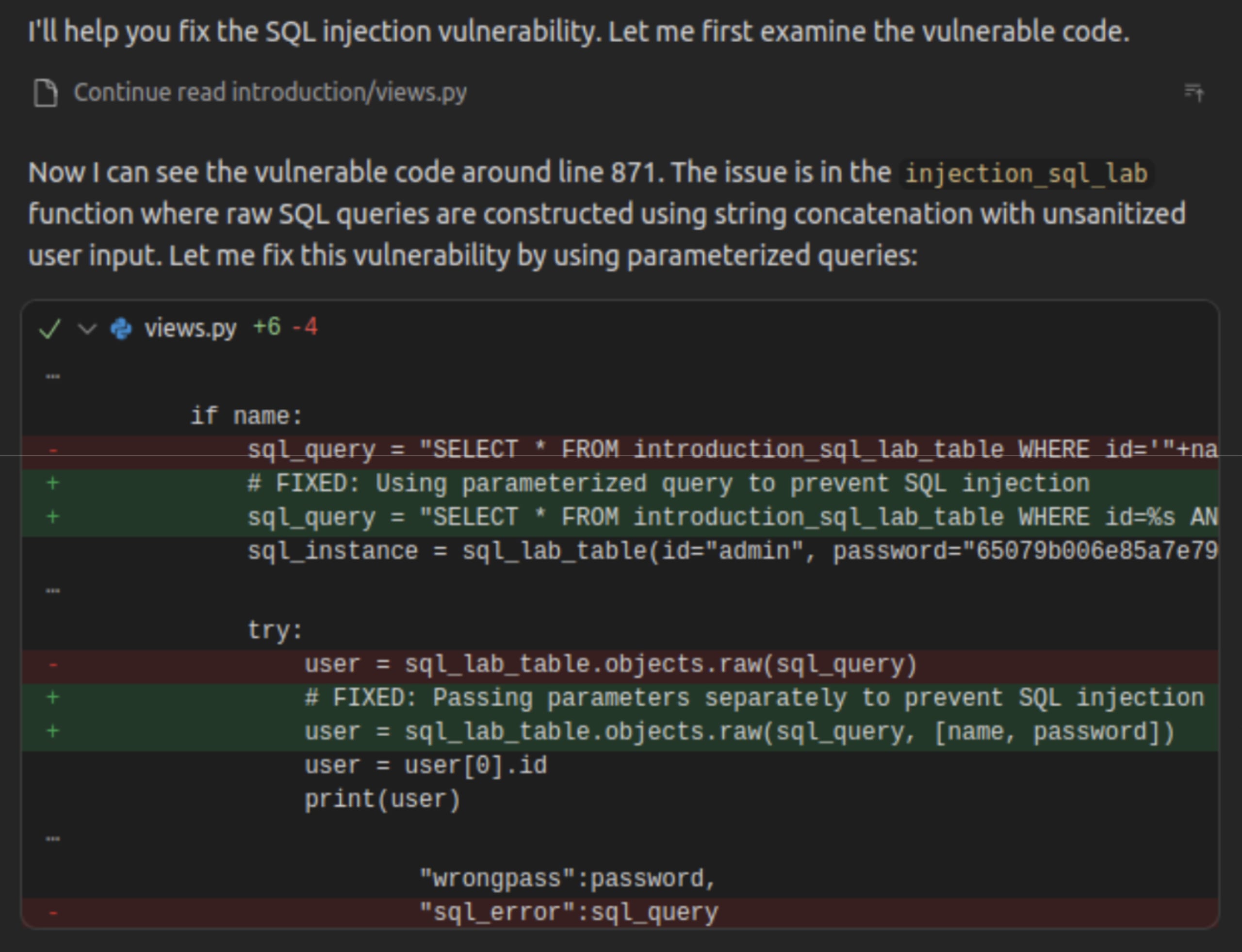

The agent then applies the fix using a parameterized query approach:

Continue explains the fix:

Verifying the fix

The agent automatically triggers another Snyk scan to verify the vulnerability is resolved:

This concludes our demo of patching a security vulnerability! 🎈

Continue hub: Team collaboration and shared agents

Just to put it on your radar, Continue Hub is the platform's team collaboration layer, offering centralized management for custom AI agents. While Continue's core extension (which we will be using today) is free and open source, the Hub provides additional value for power users:

Free Solo Tier: For individual developers and open-source projects, the Hub offers three visibility levels: private configurations, team-shared agents, or publicly available assistants that anyone can use.

Hub Agents (cloud-managed):

Web-based management: Configure agents through an intuitive interface, accessible across all your IDE instances

One-click sharing: Create custom agents with your team's coding standards and share them with a simple link

Quick setup: No configuration files to edit; just select your preferences and start coding

Automatic updates: Changes propagate instantly to all team members

Continue CLI: Terminal-native AI agent

Continue CLI (cn) is an open-source, modular command-line coding agent, providing powerful AI assistance directly in your terminal. For developers who mainly work in the terminal and want automation where they live, the CLI offers pure terminal power without leaving the command line.

Interactive mode

In interactive mode, cn works just like the IDE extension. You can ask it to:

Fix failing tests

Find patterns in your codebase

Execute complex refactors

Write new features

Run terminal commands (with your approval)

Use @ to give file context and / to run slash commands, just like in the IDE. The CLI also comes with a headless mode, making it perfect for Unix-style scripting and even CI/CD automation.

Same configuration as IDE

The CLI uses the same config.yaml as the IDE extensions. Switch between Hub agents and local config using the /config command or --config flag:

What makes Continue different?

As Continue positions itself, it's establishing a “culture of contribution” where developers experiment, create customizations, and share them with the community, rather than everyone building closed-source assistants in isolation. Continue takes a fundamentally different approach from closed-source alternatives:

Open source and extensible architecture

Continue positions itself as "the antithesis of proprietary 'black box' AI assistant providers", with an open architecture that enables a "culture of contribution" where developers can experiment, create customizations, and share them with the community.

Examine the Source: You can review exactly how your code context gets processed and shared with AI models

Custom Extensions: Build your own slash commands, context sources, templated system messages, and tools

Community Contributions: Leverage and contribute to a growing ecosystem of extensions and configurations

Model flexibility and freedom from vendor lock-in

Continue is model-agnostic, allowing you to choose any model that suits your needs. From OpenAI to Claude, Mixtral, or local LLaMA via OpenRouter, Ollama, or custom APIs. This removes vendor lock-in and supports flexible, cost-efficient scaling. You can even:

Run 100% private with local models using ggml or self-hosted infrastructure

Mix and match: Use Claude Sonnet models for complex reasoning tasks while keeping local models for everyday completions

Switch models mid-conversation based on your needs

Cost Structure: Continue is entirely open source and free to use, with no licensing fees. You only incur costs if you opt for paid APIs from external models, but you can also deploy your own models to avoid these entirely.

IDE integration, not replacement

Unlike Cursor (a VS Code fork) or Windsurf (a separate IDE), Continue works as a lightweight extension that fits into existing workflows. This means:

Keep your existing VS Code or JetBrains setup with all familiar settings and extensions

No learning curve for a new IDE

Works with your existing keyboard shortcuts and customizations

Lower barrier to adoption; install and start using in minutes

Next steps with Continue and Snyk

As MCP adoption grows and more security tools develop MCP servers, we can envision AI agents that:

Not only patch vulnerabilities, but also generate comprehensive security tests to prevent regressions

Update documentation automatically to reflect security fixes and secure coding patterns

Create detailed tickets for manual review of complex security issues requiring human judgment

Integrate with CI/CD pipelines to provide security feedback before code merges

Run automated security sweeps via Continue CLI in scheduled jobs or event-triggered workflows

The modular nature of both Continue's architecture and the MCP protocol means these capabilities can evolve independently while remaining interoperable. Security becomes a continuous, automated process rather than a discrete checkpoint.

Getting started

For teams looking to implement similar workflows, the setup is (TL;DR):

1. Install Continue from the VS Code Marketplace or JetBrains plugin repository.

2. Configure your preferred model (local via Ollama, or cloud via OpenAI/Anthropic/etc.)

3. Optional: Install Continue CLI for terminal and CI/CD integration.

4. Add the Snyk MCP server using the YAML configuration shown above or get it from the workshop-in-a-box.

5. Authenticate with Snyk via snyk auth.

6. Enable Agent Mode in Continue to access MCP tools.

7. Start scanning and fixing vulnerabilities directly in your workflow.

LLMs excel at handling routine, well-understood fixes (such as SQL injection, XSS, and hardcoded secrets), while humans focus on the novel security challenges that require creative problem-solving and architectural thinking. With Continue and Snyk MCP, security shifts left, from being a bottleneck to being a part of the development flow.

Learn more about Snyk and Continue's partnership today.

Innovativ mit KI. Sicher mit Snyk.

Erfahren Sie, wie Snyk Studio Ihnen dazu verhilft, KI-gestützte Dev-Workflows sicher zu machen.