GitHub Copilot code security: XSS in React

19. Oktober 2023

15 Min. LesezeitIn an evolving era of Artificial Intelligence (AI) and Large Language Models (LLMs), innovative tools like GitHub's Copilot are transforming the landscape of software development. In a prior article, I published about the implications of this transformation and how it extends to both the convenience offered by these intelligently automated tools and the new set of challenges it brings to maintaining robust security in our coding practices. Snyk also published a case study of security risks concerning coding with AI.

In this article, we set out to explore the security aspects of GitHub Copilot when used in a React code-base and where it autocompletes code for frontend developers in their React components JSX files. We aim to examine whether the code proposed by GitHub Copilot adheres to secure coding principles, helping developers write code that successfully circumvents potential Cross-site Scripting (XSS) vulnerabilities, particularly within the context of React development.

For those unfamiliar with the grave implications of XSS, they essentially allow attackers to inject client-side scripts into web pages viewed by other users.

Developers adopt GitHub Copilot

In the realm of AI's transformative influence on various sectors, software development holds a noteworthy spot. One of the emergent innovations in this sphere is GitHub Copilot, a developer tool that is bundled within the IDE, such as VS Code, powered by OpenAI's Codex.

GitHub Copilot is designed to function as your AI pair programmer. It is capable of providing coding suggestions, writing entire lines or blocks of code, and is compatible with several languages — a functionality that extends to popular front-end libraries and frameworks, such as React.

As Copilot becomes an increasingly relied-upon tool in our developer toolbox, we must be able to trust not just the code it suggests for syntax, but we also need to ensure that this code is secure enough to work to the standards we practice and not put users at harm.

Through JavaScript code examples and an in-depth look at JSX and React-specific security practices, this article aims to not just inform but also enable developers to evaluate and utilize these AI-augmented developer tools responsibly without compromising application security.

The danger zone: Using React’s dangerouslySetInnerHTML function

React offers an API that enables developers to set HTML directly from a React component — the `dangerouslySetInnerHTML` function.

The use of this function, as its name suggests, can be dangerous. It works by bypassing the security controls in React that perform output encoding that helps protect against cross-site scripting, allowing you to manually insert HTML into a component. This feature is useful for certain cases, such as dealing with rich text content from trusted sources. For example:

1function MyComponent() {

2 return <div dangerouslySetInnerHTML={{__html: '<h1>Hello World</h1>'}} />;

3}While this presents a fast and direct way to handle HTML content, it also exposes your application to the risk of cross-site scripting (XSS) attacks. Suppose user-provided data forms part of the HTML content set by `dangerouslySetInnerHTML`, an attacker can inject an arbitrary script that will be executed — with potentially far-reaching consequences.

1function MyComponent({userInput}) {

2 // This can expose the application to XSS risks if userInput contains a malicious script.

3 return <div dangerouslySetInnerHTML={{__html: userInput}} />;

4}Therefore, caution must be taken when using `dangerouslySetInnerHTML` in your application.

Does GitHub Copilot suggest secure code?

Can GitHub Copilot help developers with implementing some of these security controls to mitigate against XSS taking place in `dangerouslySetInnerHTML`?

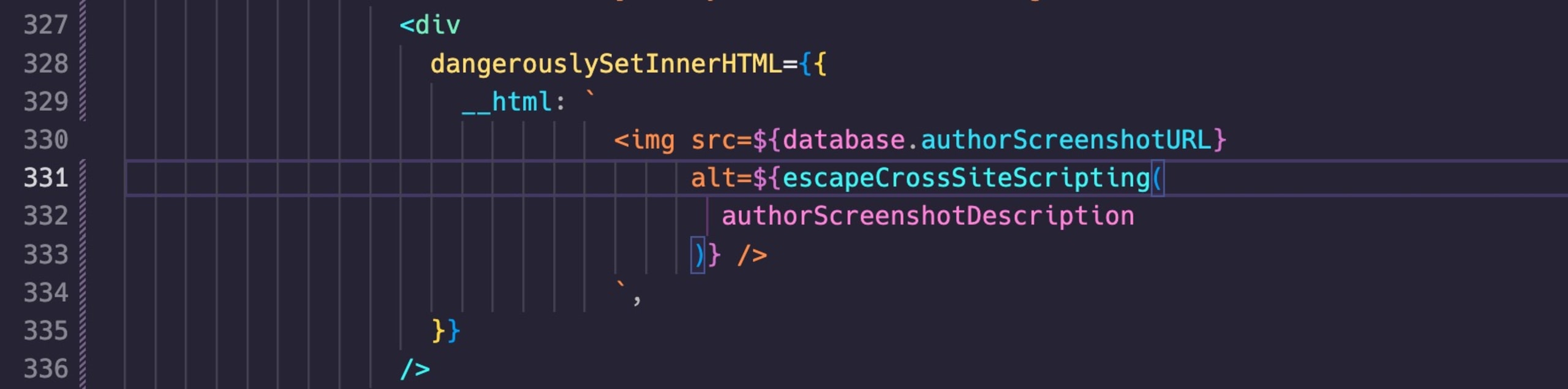

Let’s consider the following React component code that uses the `dangerouslySetInnerHTML` directive:

1 <Row className="justify-content-between">

2 <Col md="6">

3 <Row className="justify-content-between align-items-center">

4 <div

5 dangerouslySetInnerHTML={{

6 __html: `

7 <img src=${database.authorScreenshotURL}

8 alt=${

9 authorScreenshotDescription

10 } />

11 `,

12 }}

13 />

14 </Row>The variable `authorScreenshotDescription` that flows into this component is user-controlled and is used to specify the text description for the image.

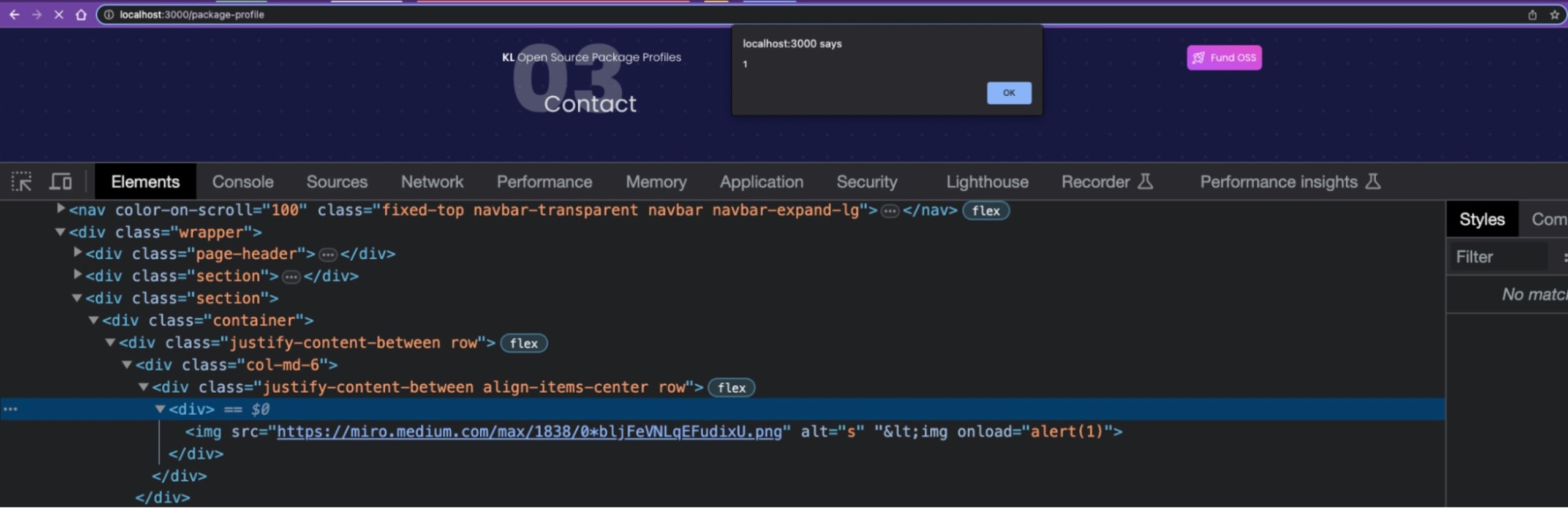

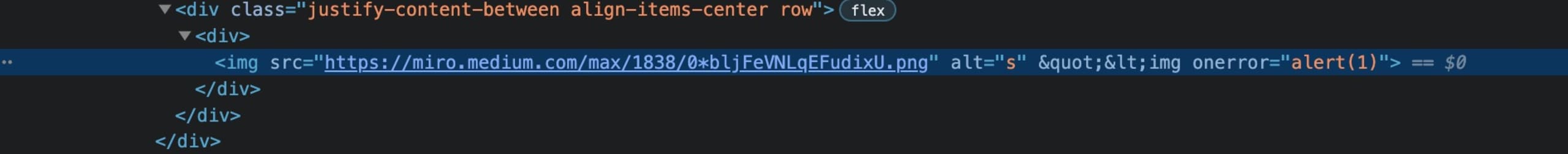

An attacker could exploit this cross-site scripting vulnerability to achieve JavaScript code execution in the browser by setting the value of the `authorScreenshotDescription` variable to the following: `\"<img src=x onError=alert(1)`

Ok, but you’re a responsible developer, and you know that:

You really shouldn’t use `dangerouslySetInnerHTML`, as the name implies — it’s dangerous! But there’s a specific use case that requires you to use it, so:

You know that if you do need to use this React API you’d have to implement secure output encoding to escape dangerous characters like `<` that will allow you to create HTML elements.

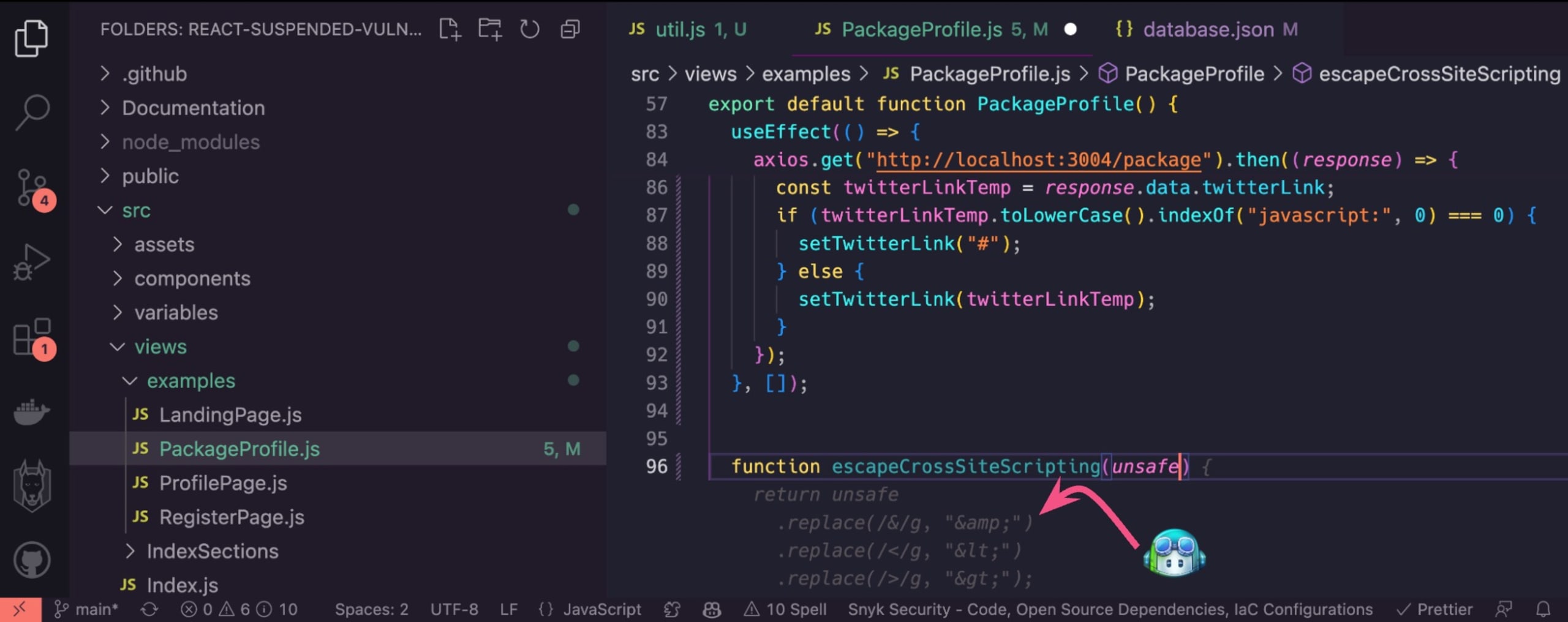

So what do you do next? You begin writing a quick cross-site scripting escaping function for this React component. Of course, GitHub Copilot is there to help you out:

You start typing the function name and its arguments, and as you’re about to begin coding the function’s body, GitHub Copilot auto-suggests the following code. Looks great. All you have to do is press TAB, and you’re good. Of course, let’s not forget to update our use of the `dangerouslySetInnerHTML` API in the component to make use of this security escaping function:

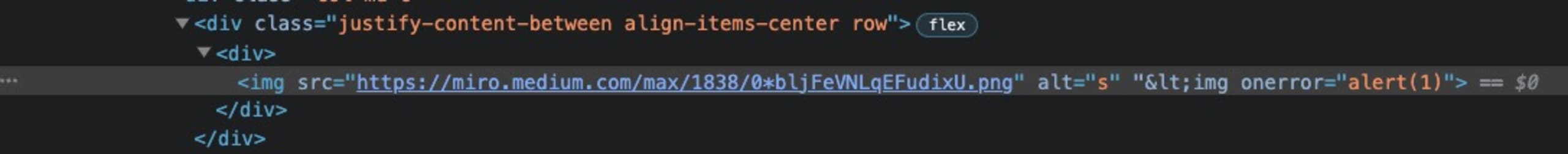

Trying the same XSS exploit that worked for the attacker before, and we discover that it fails:

Apparently, the GitHub Copilot suggested code completion for the `escapeCrossSiteScripting` function works wonders and indeed escaped the angle brackets that previously created a new `<img />` HTML element and executed JavaScript code.

We’re not out of the woods yet!

Attackers are persistent, and it costs virtually nothing for them to automate their attack payloads. As such, they may iterate through thousands of string permutations, often referred to as fuzzing, in order to find one way that works. This is where the art of computer hacking unfolds — while developers need to protect from every single point of failure, attackers only need to find one way in, as small as it is.

So, an attacker might try the following payload:

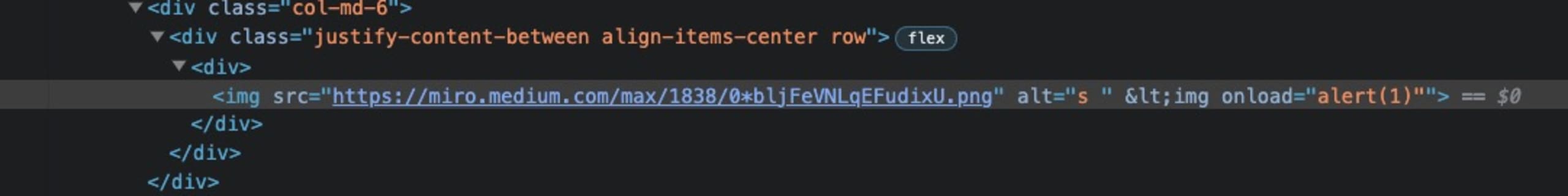

1s \"<img src=x onLoad=alert(1)In the above, we changed the `onError` attribute handler to an `onLoad` handler. Now, if we load the web page again, we’ll observe that this attack proved successful, and a popup appeared:

As a developer, you’ll wonder how this security vulnerability takes place and how you can avoid it. An experienced React developer might tell you that it is a far superior coding convention in terms of code safety to enclose an attribute’s value with quotes, so let’s do that and apply the change in the following `alt=` attribute value:

1 <div

2 dangerouslySetInnerHTML={{

3 __html: `

4 <img src=${database.authorScreenshotURL}

5 alt="${escapeCrossSiteScripting(

6 authorScreenshotDescription

7 )}" />

8 `,

9 }}

10 />Doing so and applying the attack payload that previously worked `s \"<img src=x onLoad=alert(1)`, we can observe that even when we try to escape the quote character in the payload and close the `alt` attribute value, our attack payload has an extra closing double quotes character which hinders JavaScript execution:

This looks great.

Until…

An attacker figures out a creative way to escape from that situation in the form of a shorter payload that maintains the `onLoad` special attribute abuse and injecting a trailing semicolon and a `//`string to symbolize that any trailing string is to be treated as comments.

Using the following payload:

1s \" onLoad=alert(1); //Welcome to another successful execution of cross-site scripting:

But what if we tried a different approach?

What if we didn’t surround the `alt=` attribute with double quotes inside the `dangerouslySetInnerHTML` section and instead were to try and refactor the escaping function altogether?

Let’s try that instead. So, our React component’s JSX code remains as follows:

1 <div

2 dangerouslySetInnerHTML={{

3 __html: `

4 <img src=${database.authorScreenshotURL}

5 alt=${escapeHTML(

6 authorScreenshotDescription

7 )} />

8 `,

9 }}

10 />And next, we want to refactor the existing `escapeCrossSiteScripting` to be a proper HTML escaping function that is more secure and treats encodes more characters than just `<`, `>`, and `&`.

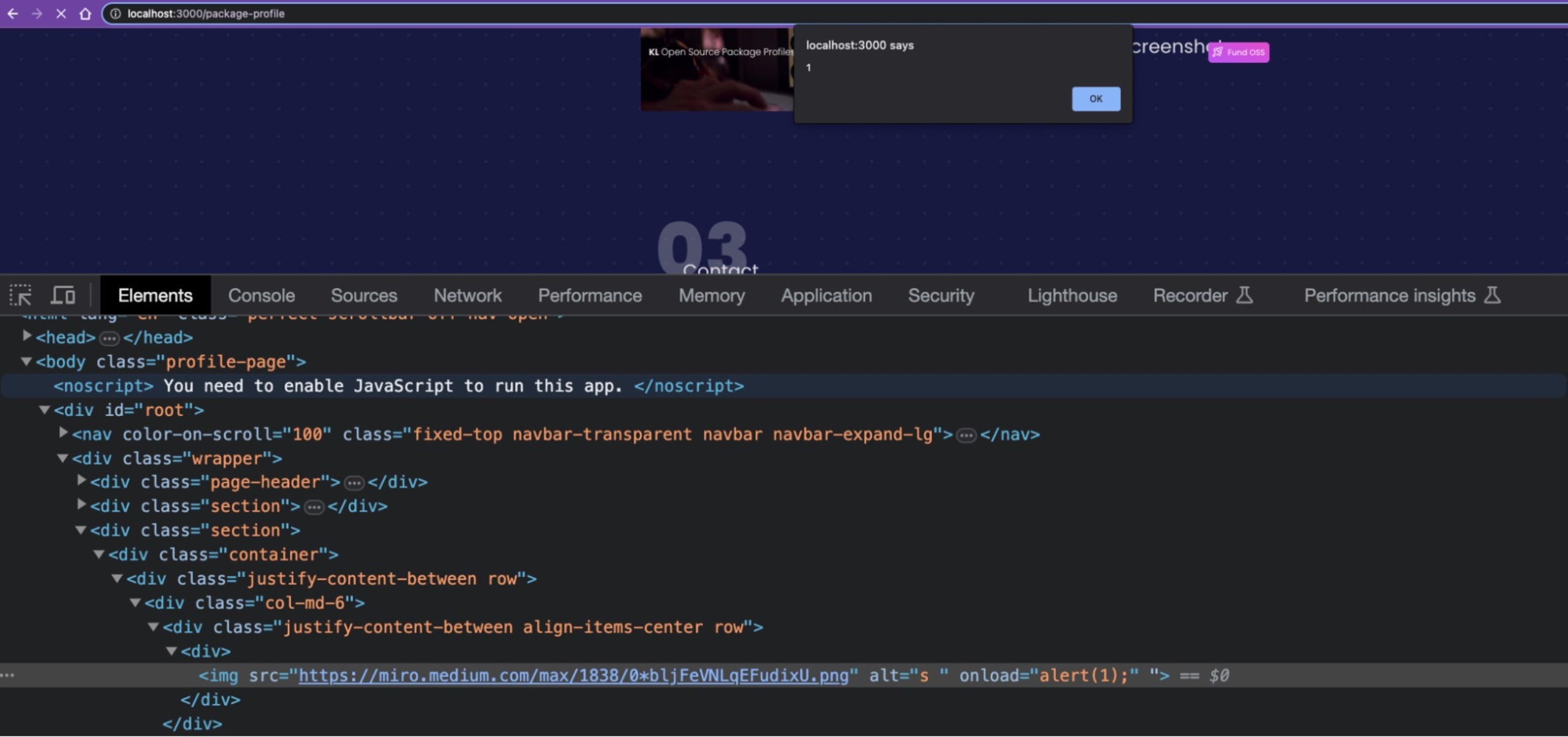

So, we start typing out the code, and of course, GitHub Copilot wakes up and provides the following suggestion:

It actually suggested the entire function body code, and I had simply prompted it to continue completing the code suggestions until we finished this sanitization. It looks like a better output encoding logic that takes into account other dangerous characters like single quotes and double quotes.

If we’d provided the application with our original payload of:

1s \"<img src=x onError=alert(1)The suggested code from GitHub Copilot would have indeed encoded all the potentially dangerous characters, including the double quote that the attack payload attempted to escape out of:

And yet, we’d be wrong again, because an attack payload as simple as the following would still trigger the cross-site scripting that would allow any JavaScript code to be executed:

1s onLoad=alert(1)Why is this happening, though?

Highlight on React XSS security issues

As comprehensive as this last attempt was for an HTML escaping function, it misses a fundamental principle of output escaping and general sanitization security logic — context is key.

The context in which data flows into the sensitive API, in this case, the attribute value is that of an HTML attribute form and not of an HTML element. The fact that we’re simply able to use a space character to communicate the end of the `alt` attribute and the beginning of the next attribute: `onLoad` is the center of the problem.

Protecting from cross-site scripting in React applications

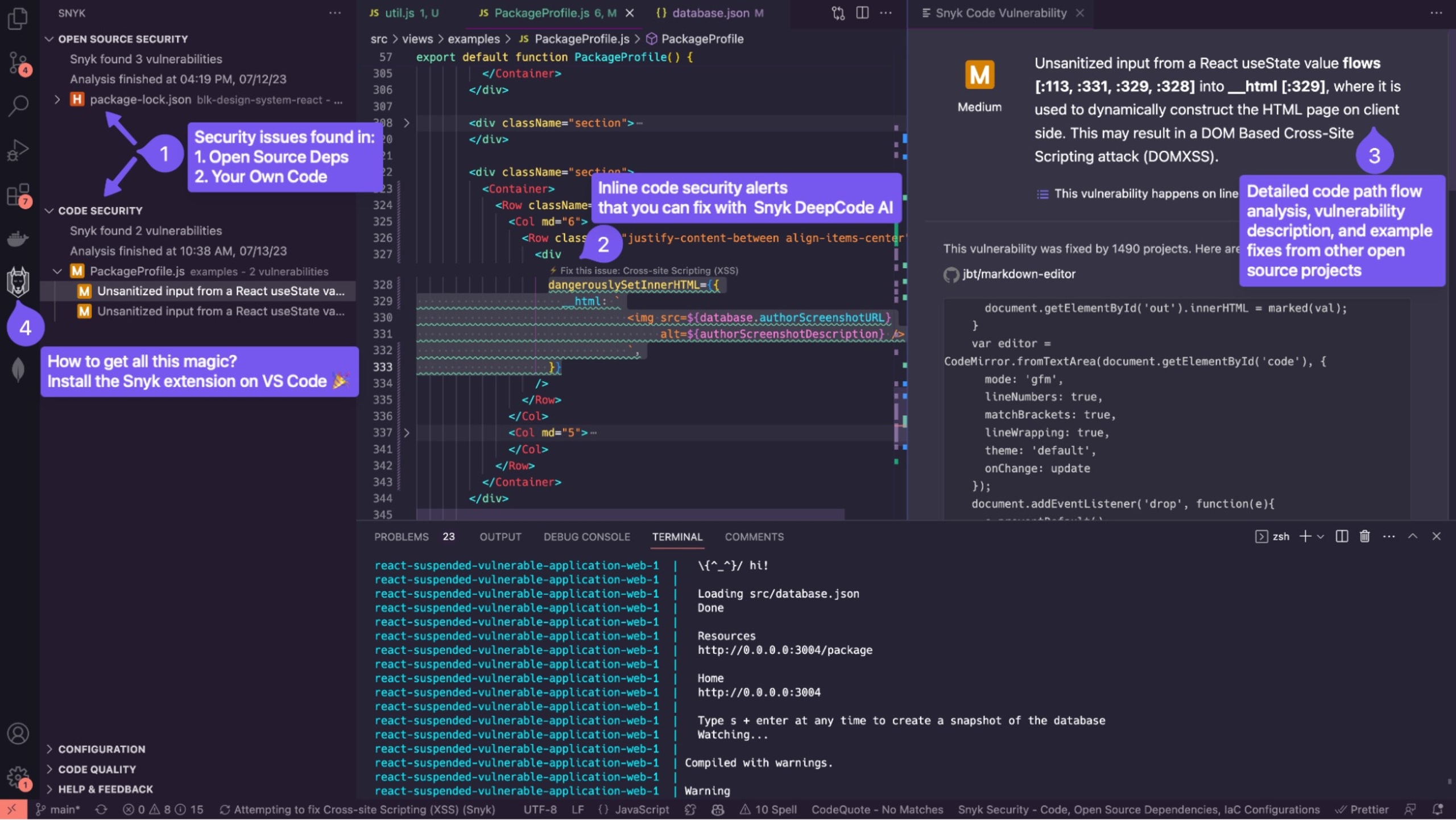

As much as tools like GitHub Copilot are revolutionizing software development, it is paramount to remember that these AI models may sometimes suggest insecure code. Therefore, having robust safety nets is essential to protect your applications. This is precisely where developer security tools like Snyk step in.

Snyk, a highly-regarded name in the world of developer-first security tools, offers a handy IDE extension. As you write your React code, Snyk can spot potential security vulnerabilities, such as those posed by unsafe use of `dangerouslySetInnerHTML` that Copilot might not catch. This real-time safeguard can be instrumental in helping you write secure React applications and prevent cross-site scripting attacks.

One of the key features that sets Snyk apart is DeepCode AI. DeepCode utilizes AI-powered findings to scan your code as you write it, identifying security, performance, and logic issues in the code. But it doesn’t just stop at identifying potential problems. DeepCode AI also offers AI Fix, which provides automatically generated fixes for identified issues, making it even easier for you to code securely and efficiently.

Snyk is a free tool designed to assist developers in making safer code from the convenience of their IDE. As you navigate the world of AI-powered coding assistance with tools like GitHub Copilot, complementary protection from tools like Snyk can be an invaluable asset.

On top of using Snyk, the following web application security practices should be followed. One of these security controls relates to performing secure output encoding.

Mitigating such security risks in frontend web applications involves diligent sanitizing of user input that flows into sensitive APIs such as `dangerouslySetInnerHTML`. User inputs should always be treated as untrusted; therefore, any HTML content coming from the user needs to go through a robust HTML sanitizing process. For this purpose, several third-party libraries, such as DOMPurify, can be leveraged:

1import DOMPurify from "dompurify";

2

3function MyComponent({userInput}) {

4 const cleanInput = DOMPurify.sanitize(userInput);

5 return <div dangerouslySetInnerHTML={{__html: cleanInput}} />;

6}Also, if you're simply looking to render text content, consider alternatives to `dangerouslySetInnerHTML`, such as using React's text content handling using curly braces `{}`.

Closing

In conclusion, while AI suggestions can accelerate your coding process, human vigilance and robust security tools remain integral to creating secure applications.

Also, keep in mind that even though `dangerouslySetInnerHTML` provides a way to handle HTML in React, it's critical to understand its potential security implications. Being cautious with the use and sanitizing data flowing into it can help keep your React applications secure from XSS vulnerabilities.

I highly recommend that you give Snyk a try and see how its real-time vulnerability assessments and auto-fixes can enhance the security of your React applications and, moreover, your productivity as an engineer who appreciates building secure software. As the adage goes, prevention is better than a cure, especially when it comes to secure coding.

Beginnen Sie mit Capture the Flag

Lernen Sie, wie Sie Capture the Flag-Herausforderungen lösen, indem Sie sich unseren virtuellen 101-Workshop auf Abruf ansehen.