Timing out synchronous functions with regex

2023年4月6日

0 分で読めますHow hard can it be to support custom container image tags? Turns out… quite! I know this because my team has been busy at work on our new custom base image support for Snyk Container, and we were tasked with the following problem: Given a tag, parse its parts to be able to compare it to other similar tags. It was a fun problem to solve, and we'd love to share how we got to our final solution!

Regular expressions to the regex-scue?

Our idea was to use regex, specifically the named grouping feature. For example, if we want to match this:

We could use this regex (apologies in advance to anyone that has the occasional regex nightmare):

At this point some red flags 🚩 may have been raised by you. We all know regexes might be dangerous, and letting the user decide both the input string and the regex is just asking to get attacked!

A user could easily create a base image called:

And give us the string:

This would have caused our service to completely stop processing any other requests! No way any AppSec team would ever let someone do this.

Does that mean we need to go back to the drawing board? Thankfully not! There happens to be a library (regex parsing engine) specifically designed to prevent such attacks. The library is RE2, and is developed by Google. It's important to note that some regex features aren’t supported but they wouldn’t be used anyway.

Now the fun begins!

We would rather not have our only safeguard be an external dependency, prone to bugs out of our control, and possible software supply chain attacks. Due to this, we wanted a way to time out regexes that take too long.

Since Node is single-threaded, it’s not easy to time out code that can’t run concurrently. Here’s the solution we came up with:

Threads 🧵!

This is probably the first thing that popped into your head — it was ours as well. In addition to being able to kill a thread, we get the added advantage of not blocking the main event loop!

Alright, let’s write some code:

Main thread

Worker script

Pretty simple right? How about I make sure the code runs…

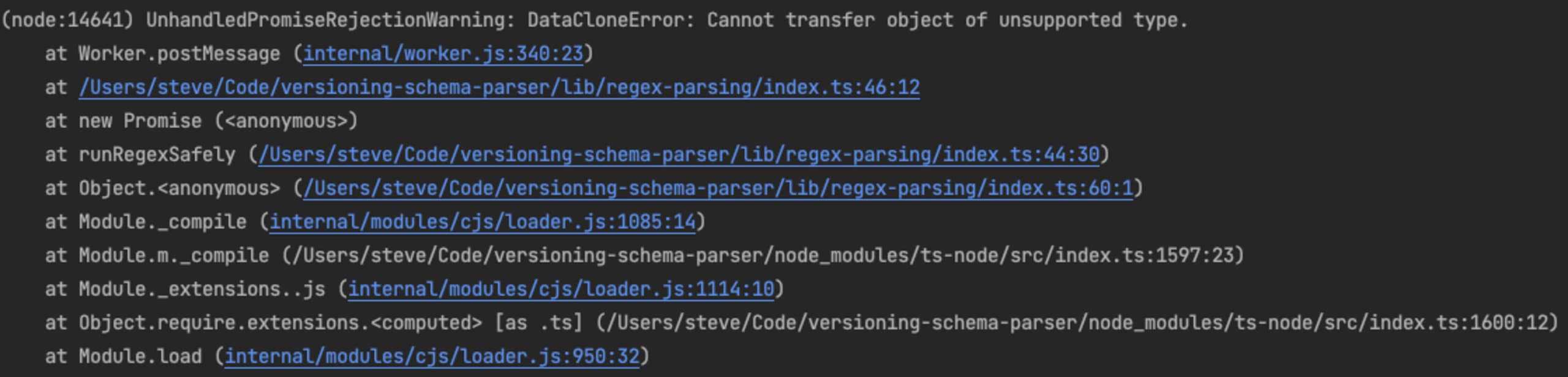

Hmmm, I’ve never seen this error before. Let’s see what MDN says:

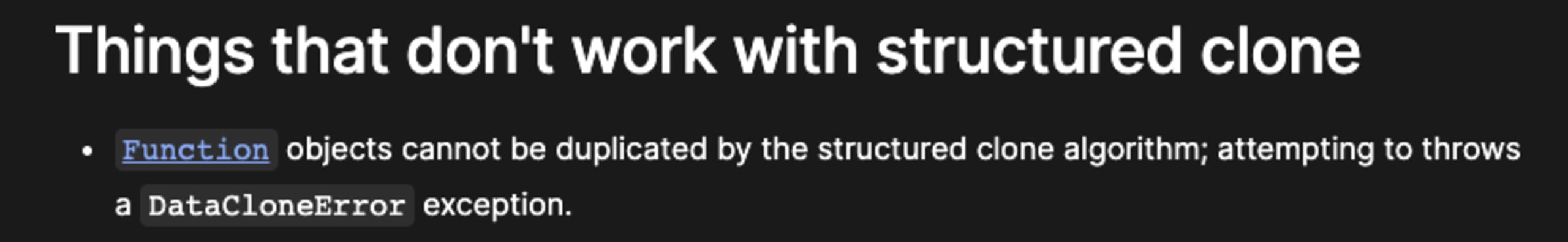

And: may not contain native (C++ backed) objects

Turns out objects aren’t passed by reference, but cloned. And since RE2 is a function (class), which is just a binding to a C++ backed object, we can’t even pass it as an argument.

We could just create it inside the thread, and pass the regex as a string. This is another instance where a red flag 🚩 appeared. There might be a non-negligent effect on performance if we need to reconstruct the RE2 object for every match call.

Let’s not speculate, we can actually test it out. We used benchmark.js.

Benchmarking performance

⚠️ It’s not easy to run micro benchmarks like these. V8 is very smart with optimizations, so take these tests with a grain of salt. Here’s a great YouTube video about the topic of microbenchmarking

As a baseline, RE2.match can run 500k times/sec.

Since RegExp objects are clone-able, we can send it to the thread as is instead of RE2 and see what the performance is. Turns out running RegExp.match inside a worker thread (awaiting the result) can run 50k times/sec. A 90% decrease! 🤩

Creating the RE2 object in the thread results in an even worse 17k times/sec. A 97% performance penalty. 😭

Maybe threads aren’t the most optimal solution, and thankfully, there’s another way!

The vm module

Node has a module called vm that allows you to run scripts in a separate context. The advantage of this is that it comes with a built in timeout option.

Here’s some example code:

We don’t need to create the script and context every time we want to run a match, so we can just create aclosure and return it.

Final code

Let's benchmark again

Let’s run that in the benchmark and see the performance. Running the following inside the vm gave a much more acceptable 200k/sec. 👍

Oh wait! We forgot to add a timeout, so let’s just run the benchmark again with a timeout of 100 milliseconds:

Shouldn’t have much of an impact since we never actually time out…

Hmmmmmmmmmmmmmm😭😭😭

Why did adding a timeout that we never actually hit result in WORSE performance than the threaded approach!?

Some Google searches lead to a GitHub issue that lead to a PR that lead to a note, hidden at the bottom of the documentation page stating:

⚠️ Using the timeout or

breakOnSigintoptions will result in new event loops and corresponding threads being started, which have a non-zero performance overhead.

Argghh!

Conclusions

Due to the fact that a worker thread is more complicated (harder to read, needs more resources, requires all functions that use it to change their signatures to async) than the vm solution, and they have similar performance, the vm solution was chosen. Optimizations can be made if it becomes a bottleneck in the future.

Now that I've explained how we went about implementing this functionality, we'd love to have you give our new custom base image recommendations a try. If you like it, let us know @snyksec on Twitter.

開発者ファーストのコンテナセキュリティ

Snyk は、コンテナイメージと Kubernetes ワークロードの脆弱性を検出して、自動的に修正します。