Breaking out of message brokers

Adam Goldschmidt

2020年8月5日

0 分で読めますI recently reported two vulnerabilities in Apache Airflow—an open-source library that allows developers to programmatically author, schedule, and monitor workflows. Both of the vulnerabilities allow the attacker to change scope and gain privileges for a different machine, and they both rely on the attacker gaining access to the message broker before performing the attack.

In this blog post, I aim to show you why message brokers can’t be trusted and how I was able to exploit Apache Airflow in order to gain privileges to machines that are supposed to be protected. However, before diving into this, let’s first cover the basics on message brokers.

What is a message broker?

A message broker is a software that enables services to communicate with each other and exchange information. It may sound similar to an API in nature, but the message broker usually does this by implementing a queue that the different services can write to or read from. This allows these services to asynchronously talk with one another, even if they were written in different languages or implemented on different platforms.

Message brokers can serve as a bridge between applications, allowing senders to publish messages without knowing where the receivers are or how many of them there are. As mentioned above, this is accomplished by a component called a message queue, that stores the messages until a consuming service processes them. These queues also allow asynchronous type of programming—Since the queues are the ones responsible for delivering the messages, the sender can continue performing different tasks.

Exploring message brokers use cases

Message brokers are widely used in software development. They’re useful whenever reliable multi-services communication, assured message delivery, or asynchronous features are required.

The use cases of message brokers vary. Here goes my shot of pinpointing the popular ones:

Payment processing: It’s important that payments are sent once and only once. Handling these transactions using message brokers ensures that payment information will neither be lost nor duplicated and provides proof of receipt.

Asynchronous tasks: It’s possible to decouple heavy-weight processing from a live user request, so the response will be instant and the user won’t be blocked.

Routing messages to one or more destinations: It’s easier and more maintainable to publish messages to a single source which multiple services can read from.

Now that you’ve got a grasp of what message brokers are and what they are used for, let’s see why and how they can be vulnerable.

Over-trusting message brokers

Every developer knows that databases can be breached. We usually take extra security measures—such as encrypting passwords, so attackers will have to work harder even if somehow the database has been breached.

Well, logic says it should be exactly the same in message brokers, right? The data being transferred eventually reaches different machines and it should be treated with extra caution, not only by encrypting sensitive information but by protecting the machines that interact with it.

Unfortunately, this is not the case. Our security team discovered a few examples of unsafe usage of message brokers in the wild—one of which is Apache Airflow. This unsafe usage consists of putting too much trust in the message brokers, for example, storing commands inside of them and then executing them without sanitization. This could eventually lead to command injections or remote code executions on the machines that communicate with the brokers.

Exploiting Apache Airflow

What is Apache Airflow?

Apache Airflow, as briefly mentioned above, is a full-blown workflow management system. With Airflow, workflows are architected and expressed as DAGs (Directed Acyclic Graph), with each step of the DAG defined as a specific task. It is a code-first platform that allows you to iterate on your workflows quickly and efficiently.

Airflow contains a task scheduler, which is responsible for scheduling and executing the DAGs. The scheduler uses a component, called an executor, in order to execute the DAGs.

Finding a zero day in Airflow

It all started when I was searching for deserialization vulnerabilities of open source software which uses Celery—a Python distributed task queue, that implements a message broker, as a dependency.

I found out that Airflow uses Celery (as an executor) with pickle as a default - And I was aware that there is a known vulnerability in Python’s pickle module, which Celery once used as a default.

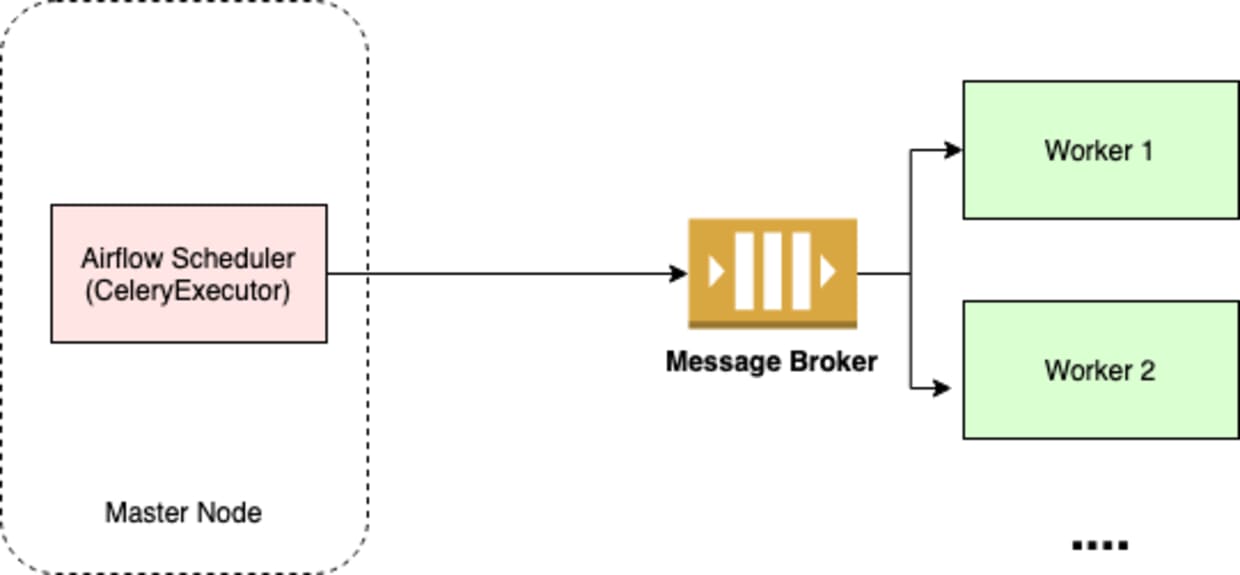

Below is a diagram showing roughly how Airflow works with Celery:

You have the Airflow scheduler which uses celery as an executor, which in turn stores the tasks and executes them in a scheduled way.

Celery uses the message broker (Redis, RabbitMQ) for storing the tasks, then the workers read off the message broker and execute the stored tasks.

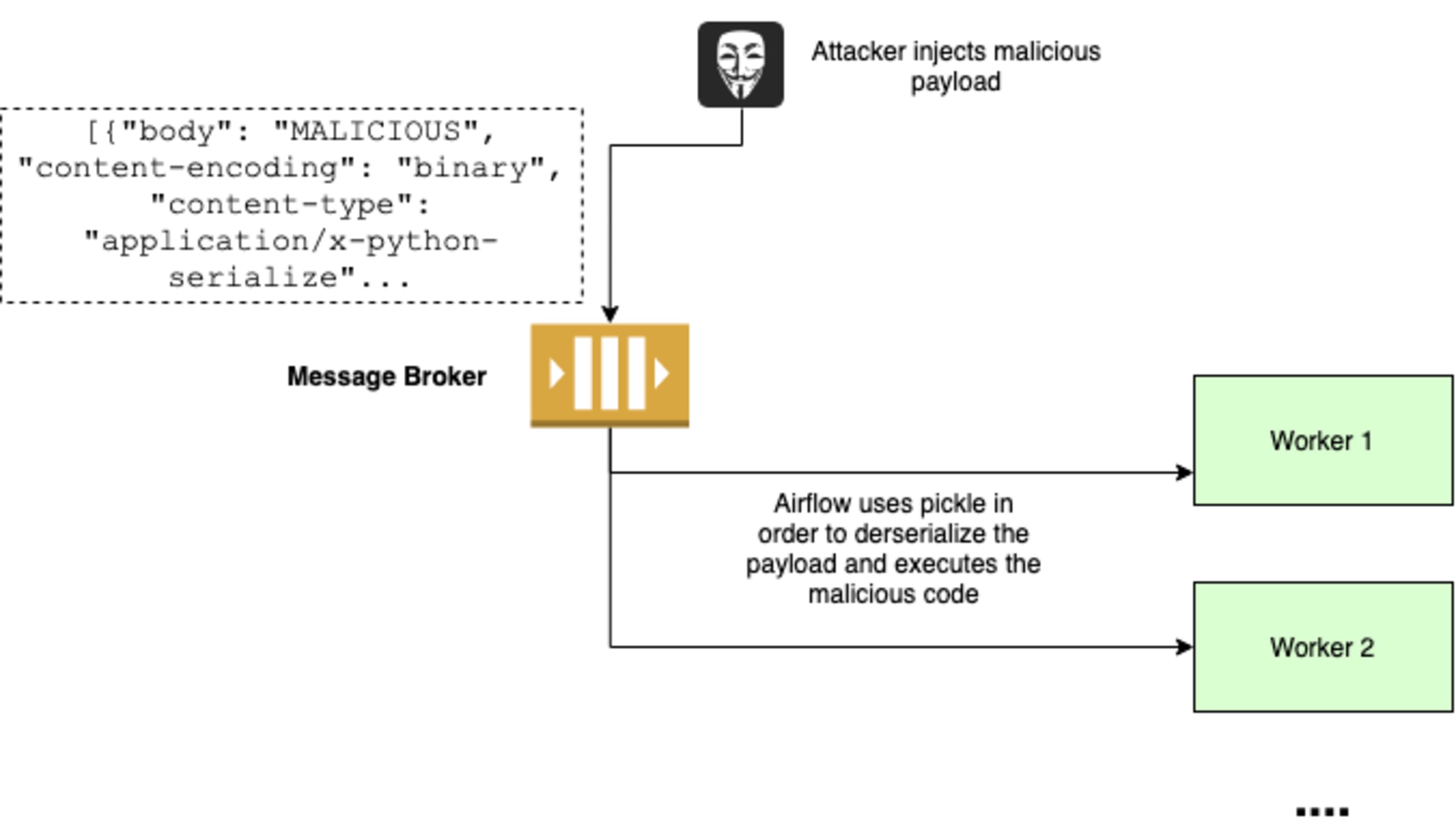

The Airflow Celery workers deserialize pickle data that is stored in the message broker—Meaning that if I can get access to the message broker, I can achieve remote code execution inside the workers by a deserialization attack. This vulnerability was assigned CVE-2020-11982.

Wait, what is a deserialization attack?

Serialization is a process of converting an object into a sequence of bytes that can be persisted to a disk or database or can be sent through streams. The reverse process of creating an object from a sequence of bytes is called deserialization. Serialization is commonly used for communication (sharing objects between multiple hosts) and persistence (store the object state in a file or a database).

Deserialization attack is when the application deserializes data without sufficiently verifying that the resulting data will be safe, letting the attacker control the state or the flow of the execution. I am not explicitly saying that message brokers must not store serialized data—There are many use cases where this is mandatory. I do think though that this is something to think about when implementing this sort of design, and it’s worth considering implementing extra security measures in these cases.

If you’d like to learn more about deserialization attacks, check out this article.

Forcing Airflow to deserialize my tasks with pickle

My first step was to create a new Airflow task and observe its structure. I added a DAG (collection of tasks), assigned it to a queue named “test”, set the Celery broker to be Redis, and fired up the queue:

airflow worker -q test

I dug through Redis messages structure, and I discovered that the messages are stored in a Redis hash table called unacked. The values stored in this hash table are structured like the following:

The interesting parts are the body value, content-type and content-encoding. The body value decoded is:

Note that the actual commands are stored here! This will be important for the second vulnerability. I also found a set named unacked_index—I assumed that the elements in the set are the same values as the keys of the hash table, so I made sure. First, I retrieved the keys of the hash table:

Then, I went on to display all elements of unacked_index:

As you can see, they are indeed the same values, just in a different order. At that point, I was pretty confident that unacked_index is used to store the tasks IDs to be executed, and the hash table is there to match the task ID to the actual task. This meant that in order to add a new custom task, I needed to add an arbitrary value to unacked_index, and then create a new element in the hash table with the same value as a key, and a malicious payload as a value.

In order to carry on with a deserialization attack, I needed a malicious payload. I quickly created a Python script to output a payload that after deserializing with pickle, would create a new file named malicious:

You can read more about exploiting pickle in Python here.So, in order to force the queue to pick up the malicious value and deserialize it with pickle, I needed to change the content-type to application/x-python-serialize (pickle) and content-encoding to binary. This is a PoC of adding a malicious task:

As explained above, these are the steps I took:

Added an arbitrary value to

unacked_indexas a task IDAdded a new element to

unacked: the ID from before as a key, and a malicious payload as a value: The malicious payload included the base64 encoded payload as thebodyvalue.

The following visual illustrates the process:

I ran the test queue, and viola! A new file called malicious was created on the worker that ran the queue—a complete change of scope. The implications of this vulnerability will be explained right after we review this next vulnerability!

Command injection vulnerability

On to the second vulnerability, a command injection assigned CVE-2020-11981.

While digging through Airflow source code, I found out that the commands from the message broker are executed without any sanitization. So, after the initial “YAY!” shout that usually comes after successfully finding a vulnerability, I went back to Redis and found that I can inject the following payload into the body key:

Following the same logic as before, this is a proof of concept (no need to change content-type or content-encoding here, as we don’t need any pickle operations):

Afterwards, I found out that it’s also possible to inject this payload into RabbitMQ from the RabbitMQ dashboard—RabbitMQ does not implement the same structure as Redis, so it’s enough to just use the plain JSON list without base64 encoding.

The impact of this vulnerability is quite similar to the previous one—complete control over worker machines. To further explain this, imagine that up until exploiting this vulnerability, the attacker only had access to a single machine—the message broker. Furthermore, on some installations, it might be possible to inject messages into the message broker with an API, without even gaining access to the message broker machine.

After gaining full control over the worker machine, it might be possible to achieve secret exposure, denial of service, and even gain access to more machines in the same infrastructure. Vulnerabilities like this which allow for attackers to escalate their attack scope and permissions inside a breached network, can be the difference between a minor security incident and a full-blown breach.

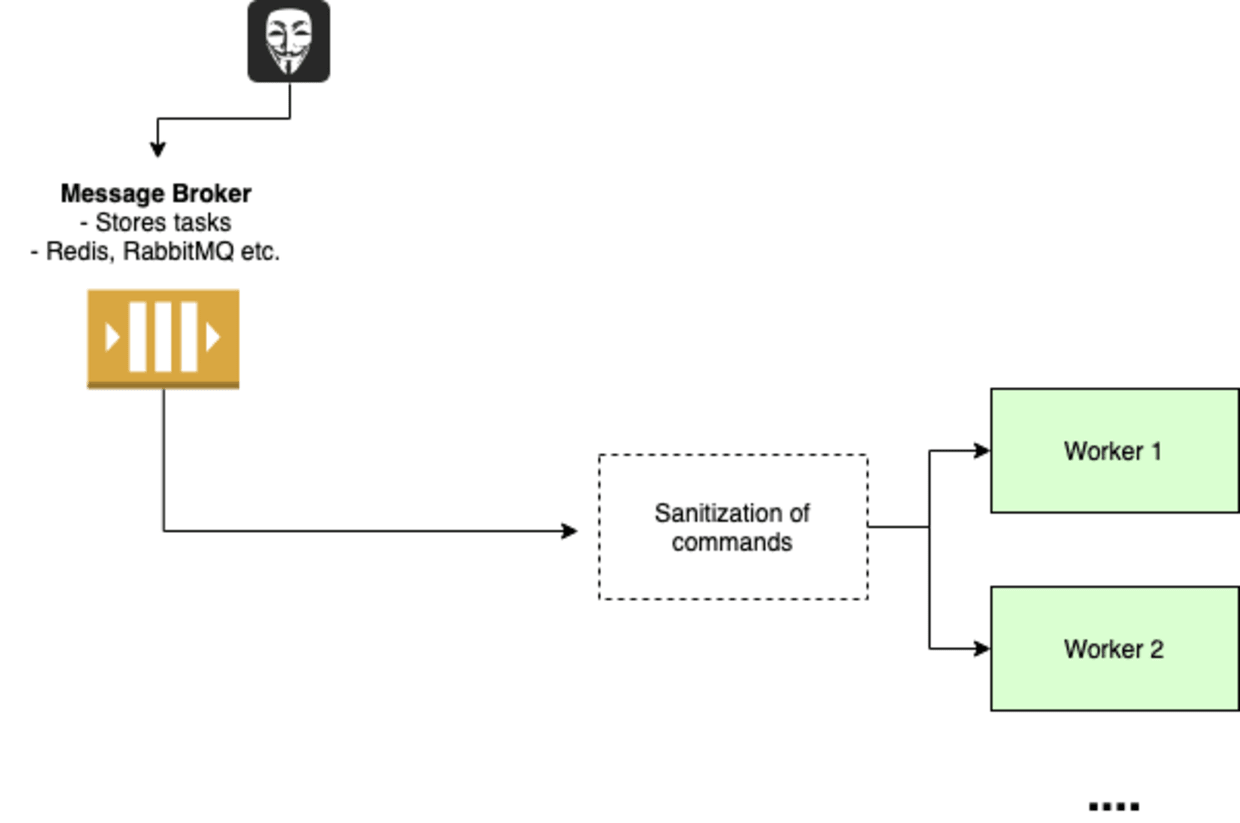

Remediation

If you do choose to store commands in your message broker, remediation could look along the lines of the visual above. This way, even if an attacker controls the message broker, the worker only runs known commands and would sanitize any unexpected input. This is the remediation method that Apache decided to go with, because the package logic requires that the commands are stored in the message broker.

As for infrastructure security, you should add security measures to your message broker, such as proper authentication and TLS, so it would be harder for attackers to access it in the first place.

Conclusion

These two vulnerabilities show how I was able to execute code and commands on the queue server (or worker), just by gaining access to the message broker. It’s important to note that Redis, in its initial configuration, does not require a password.

The Apache team was quick to acknowledge these vulnerabilities and to fix them. While the Snyk Security Team does not think they are super high severity, since they both require an initial access to the infrastructure, they are still dangerous. It’s not unusual for a vulnerability to require initial privileges or more vulnerabilities (some sort of a chain) to be exploited.

The main lesson I would like you to take from this post is this: Don’t blindly trust your message brokers. Think about the design and architecture of the brokers and how you can minimize risks using them.

脆弱性の自動検出および修正

Snyk は、コード、依存関係、コンテナ、およびクラウドインフラのワンクリック修正 PR と対策アドバイスを提供します。