10 modern Node.js runtime features to start using in 2024

2024年5月29日

0 分で読めます

The server-side JavaScript runtime scene has been packed with innovations, such as Bun making strides with compatible Node.js APIs and the Node.js runtime featuring a rich standard library and runtime capabilities.

As we enter into 2024, this article is a good opportunity to stay abreast of the latest features and functionalities offered by the Node.js runtime. Staying updated isn't just about “keeping with the times” — it's about leveraging the power of modern APIs to write more efficient, performant, and secure code.

This post will explore 10 modern Node.js runtime features that every developer should start using in 2024. We'll cover everything from fresh off-the-press APIs to the compelling features offered by new kids on the block like Bun and Deno.

Prerequisite: Node.js LTS version

Before you start exploring these modern features, ensure you're working with the Node.js LTS (long-term support) version. At the time of writing this article, the latest Node.js LTS version is v20.14.0.

To check your Node.js version, use the command:

If you're not currently using the LTS version, consider using a version manager like fnm or nvm to easily switch between different Node.js versions.

What’s new in Node.js 20?

In the following sections, we’ll cover some new features introduced in recent versions of Node.js. Some are stable, others are still experimental, and a few have been supported even before, but you might not have heard of them just yet.

We’ll visit the following topics:

1. The native Node.js test runner

What did we have before Node.js introduced a test runner in the native runtime? Up until now, you probably used one of the popular options, such as node-tap, jest, mocha, or vitest.

Let’s learn how to leverage the Node.js native test runner in your development workflow. To begin, you need to import the test module from Node.js into your test file, as shown below:

Now, let's walk through the different steps of using the Node.js test runner.

Running a single test with node:test

To create a single test, you use the test function, passing the name of the test and a callback function. The callback function is where you define your test logic.

To run this test, you use the node --test command followed by the name of your test file:

The Node.js test runner can automatically detect and run test files in your project. By convention, these files should end with .test.js but not strictly to this filename convention.

If you omit the test file positional argument, then the Node.js test runner will apply some heuristics and glob pattern matching to find test files, such as all files in a test/ or tests/ folder or files with a test- prefix or a .test suffix.

For example, glob matching test files:

Using test assertions with node:assert

Node.js test runner supports assertions through the built-in assert module. You can use different methods like assert.strictEqual to verify your tests.

Test suites & test hooks with the native Node.js test runner

The describe function is used to group related tests into a test suite. This makes your tests more organized and easier to manage.

Test hooks are special functions that run before or after your tests. They are useful for setting up or cleaning up test environments.

You can also choose to skip a test using the test.skip function. This is helpful when you want to ignore a particular test temporarily.

In addition, Node.js test runner provides different reporters that format and display test results in various ways. You can specify a reporter using the --reporter option.

Should you ditch Jest?

While Jest is a popular testing framework in the Node.js community, it has certain drawbacks that make the native Node.js test runner a more appealing choice.

By installing Jest, even as merely a dev dependency, you add 277 transitive dependencies of various licenses, including MIT, Apache-2.0, CC-BY-4.0, and 1 unknown license. Did you know that?

Jest modifies globals, which can lead to unexpected behaviors in your tests.

The

instanceofoperator doesn't always work as expected in Jest.Jest introduces a large dependency footprint to your project, making it harder to stay up to date with third-party dependencies, and having to needlessly manage security issues and other concerns for dev-time dependencies.

Jest can be slower than the native Node.js test runner due to its overhead.

Other great features of the native Node.js test runner include running subtests and concurrent tests. Subtests allow each test() callback to receive a context argument that allows you to create nested tests via context.test. Concurrent tests are a great feature if you know how to work well with them and avoid racing conditions. Simply pass a concurrency: true potential object as the 2nd argument to the describe() test suite.

What is a test runner?

A test runner is a software tool that allows developers to manage and execute automated tests on their code. The Node.js test runner is a framework that is designed to work seamlessly with Node.js, providing a rich environment for writing and running tests on your Node.js applications.

2. Node.js native mocking

Mocking is one strategy developers employ to isolate code for testing. The Node.js runtime has introduced native mocking features, which are essential for developers to understand and use effectively.

You’ve probably used mocking features from other test frameworks such as Jest’s jest.spyOn, or mockResolvedValueOncel. They’re useful for when you want to avoid running actual code in your tests, such as HTTP requests or file system APIs, and change these operations with stubs and mocks that you can inspect later.

Unlike other Node.js runtime features like the watch and coverage functionality, mocking isn’t declared as experimental. However, it is subject to receive more changes as it’s a new feature that was only introduced in Node.js 18.

Node.js native mocking with import { mock } from 'node:test'

Let's look at how we can use the Node.js native mocking feature in a practical example. The test runner and module mocking feature is now available in Node.js 20 LTS as a stable feature.

We'll work with a utility module, dotenv.js, which loads environment variables from a .env file. We'll also use a test file, dotenv.test.js, which tests the dotenv.js module.

Here’s our very own in-house dotenv module:

In the dotenv.js file, we have an asynchronous function, loadEnv, which reads a file using the fs.readFile method and splits the file content into key-value pairs. As you can see, it uses the Node.js native file system API fs.

Now, let's see how we can test this function using the native mocking feature in Node.js.

In the test file, we import the mock method from node:test, which we use to create a mock implementation of fs.readFile. In the mock implementation, we return a string, "PORT=3000\n", regardless of the file path passed.

We then call the loadEnv function, and using the assert module, we check two things:

The returned object has a

PORTproperty with a value of"3000".The

fs.readFilemethod was called exactly once.

By using the native mock functionality in Node.js, we're able to effectively isolate our loadEnv function from the file system and test it in isolation. Mocking capabilities with Node.js 20 also include support for mocking timers.

What is mocking?

In software testing, mocking is a process where the actual functionalities of specific modules are replaced with artificial ones. The primary goal is to isolate the unit of code being tested from external dependencies, ensuring that the test only verifies the functionality of the unit and not the dependencies. Mocking also allows you to simulate different scenarios, such as errors from dependencies, which might be hard to recreate consistently in a real environment.

3. Node.js native test coverage

What is test coverage?

Test coverage is a metric used in software testing. It helps developers understand the degree to which the source code of an application is being tested. This is crucial because it reveals areas of the codebase that have not been tested, enabling developers to identify potential weaknesses in their software.

Why is test coverage important? Well, it ensures the quality of software by reducing the number of bugs and preventing regressions. Additionally, it provides insights into the effectiveness of your tests and helps guide you toward a more robust, reliable, and secure application.

Utilizing native Node.js test coverage

Starting with version 20, the Node.js runtime includes native capabilities for test coverage. However, it's important to note that the native Node.js test coverage is currently marked as an experimental feature. This means that while it's available for use, there might be some changes in future releases.

To use the native Node.js test coverage, you need to use the --experimental-test-coverage command-line flag. Here's an example of how you can add a test:coverage entry in your package.json scripts field that runs your project tests:

In the example above, the test:coverage script utilizes the --experimental-test-coverage flag to generate coverage data during test execution.

After running npm run test:coverage, you should see an output similar to this:

This report displays the percentage of statements, branches, functions, and lines covered by the tests.

The Node.js native test coverage is a powerful tool that can help you improve the quality of your Node.js applications. Even though it's currently marked as an experimental feature, it can provide valuable insights into your test coverage and guide your testing efforts. By understanding and leveraging this feature, you can ensure that your code is robust, reliable, and secure.

4. Node.js watch mode

The Node.js watch mode is a powerful developer feature that allows for real-time tracking of changes to your Node.js files and automatic re-execution of scripts.

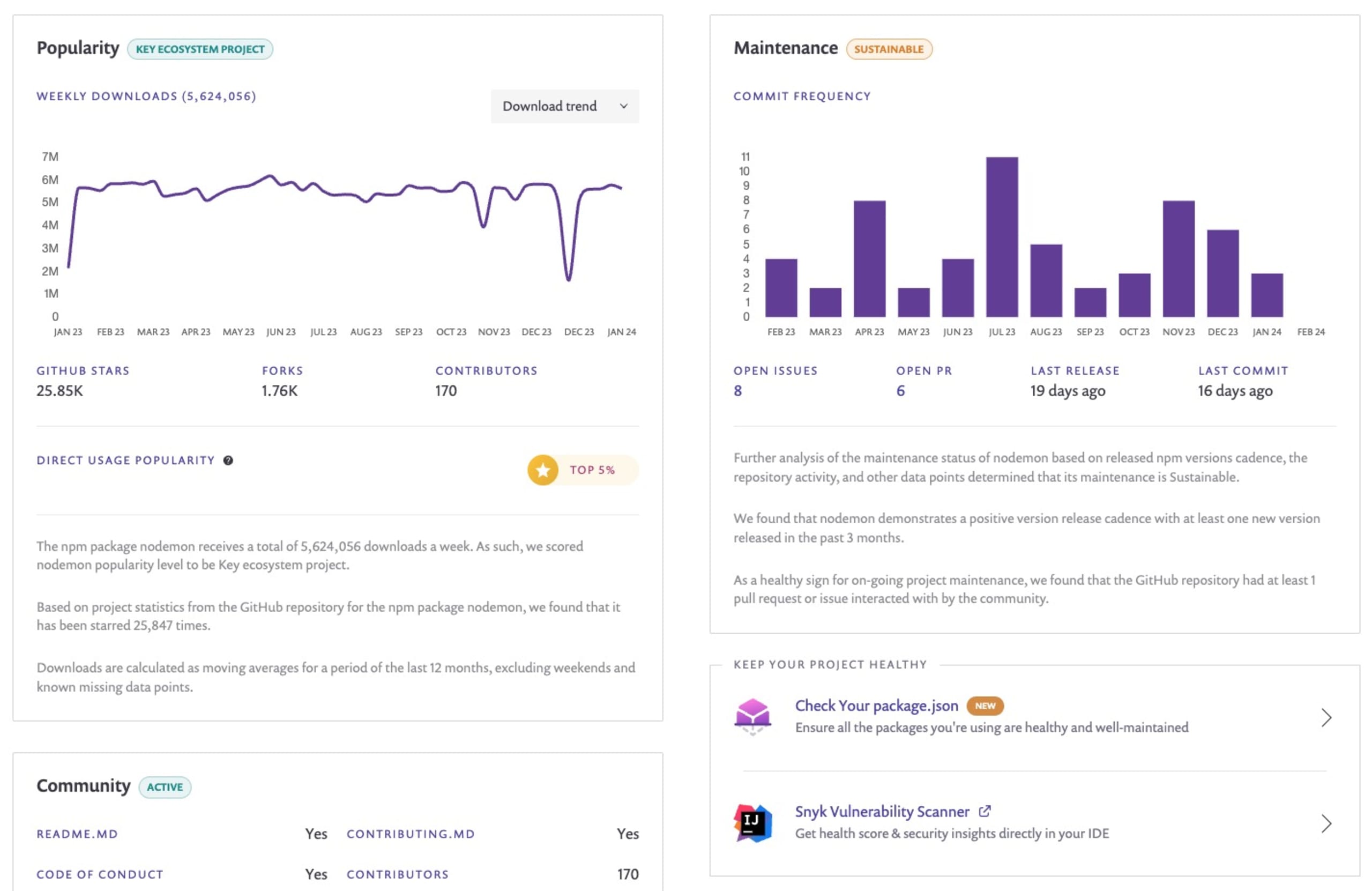

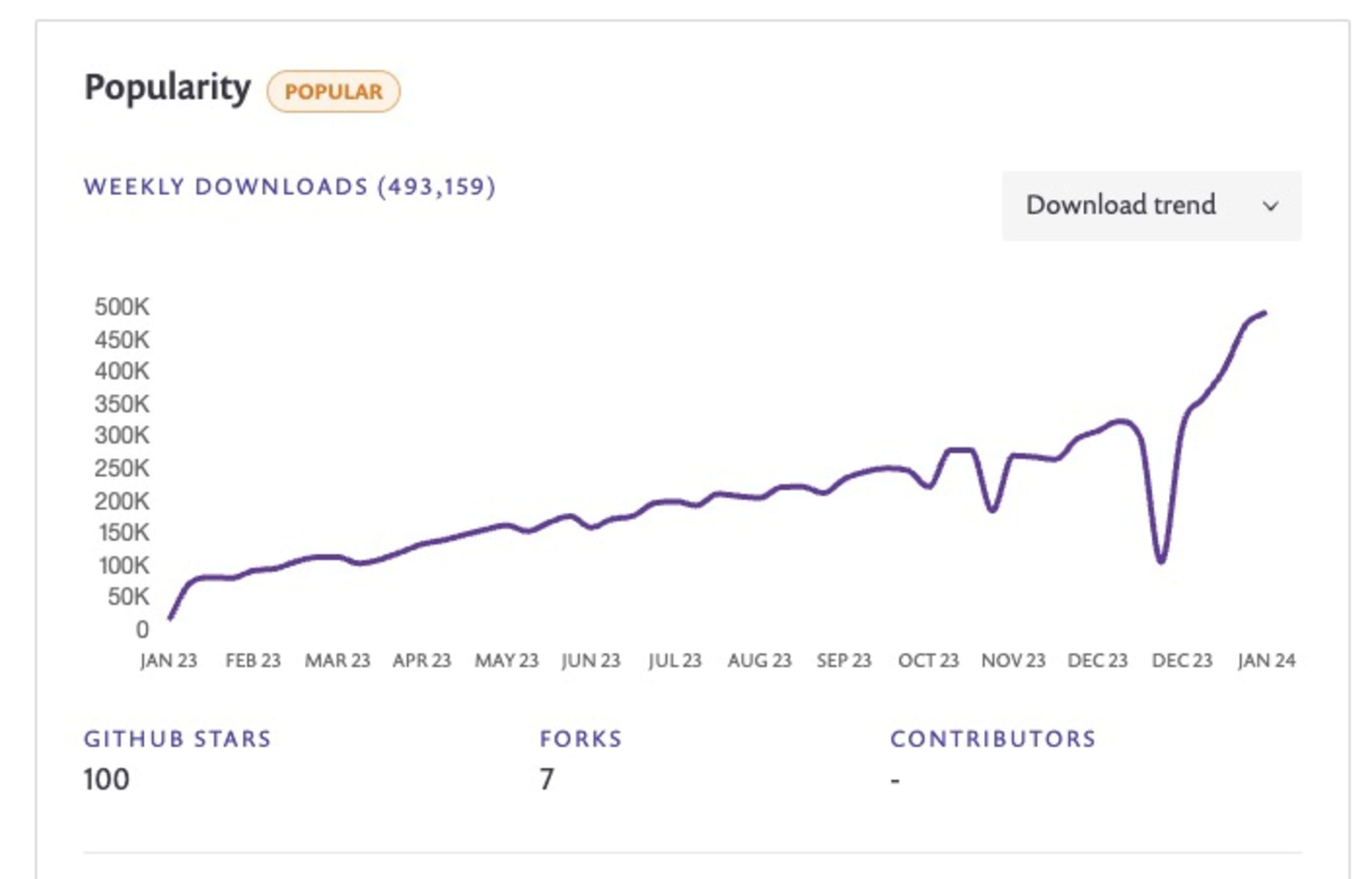

Before diving into Node.js's native watch capabilities, it's essential to acknowledge nodemon, a popular utility that helped fill this need in earlier versions of Node.js. Nodemon is a command-line interface (CLI) utility developed to restart the Node.js application when any change is detected in the file directory.

This feature is particularly useful during the development process. It saves time and improves productivity by eliminating the need for manual restarts each time a file is modified.

With advancements in Node.js itself, the language now provides built-in functionality to achieve the same results. This negates the need to install extra third-party dependencies in your projects like nodemon.

Before we dive into the tutorial, it's important to note that the native watch mode feature in Node.js is still experimental and may be subject to changes. Always ensure you're using a Node.js version that supports this feature.

Using Node.js 20 native watch capabilities

Node.js 20 introduces native file watch capabilities using the --watch command line flag. This feature is straightforward to use and can even match glob patterns for more complex file-watching needs.

To use the --watch command, append it to your Node.js script in the command line as shown below:

In the case of glob patterns, you can use the --watch flag with a specific pattern to watch multiple files or directories. This is particularly useful when you want to watch a group of files that match a specific pattern:

The --watch flag can also be used in conjunction with --test to re-run tests whenever test files change:

This combination can significantly speed up your test-driven development (TDD) process by automatically running your tests every time you make a change.

It's important to note that as of Node.js 20, the watch mode feature is still marked as experimental. This means that while the feature is fully functional, it may not be as stable or as optimized as other non-experimental features.

In practice, you might encounter some quirks or bugs when using the --watch flag.

5. Node.js Corepack

Node.js Corepack is an intriguing feature that is worth exploring. It was introduced in Node.js 16 and is still marked as experimental. This makes it even more exciting to take a look at what it offers and how it can be leveraged in your JavaScript projects.

What is Corepack?

Corepack is a zero-runtime-dependency project that acts as a bridge between Node.js projects and the package managers they are intended to use. When installed, it provides a program called corepack that developers can use in their projects to ensure they have the right package manager without having to worry about its global installation.

Why use Corepack?

As JavaScript developers, we often deal with multiple projects, each potentially having its own preferred package manager. You know how it is, one project manages its dependencies with pnpm and another project with yarn, so you end up having to jump around different versions of package managers too.

This can lead to conflicts and inconsistencies. Corepack solves this problem by allowing each project to specify and use its preferred package manager in a seamless way.

Moreover, Corepack provides isolation between your project and the global system, ensuring that your project will stay runnable even if global packages get upgraded or removed. This increases the consistency and reliability of your project.

Installing and using Corepack

Installing Corepack is quite straightforward. Since it is bundled with Node.js starting from version 16, you only need to install or upgrade Node.js to that version or later.

Once installed, you can define the package manager for your project in your package.json file like this:

Then, you can use Corepack in your project like this:

If you type yarn in the project directory and you don’t have Yarn installed, then Corepack will automatically detect and install the right version for you.

This will ensure that Yarn version 2.4.1 is used to install your project's dependencies, regardless of the global Yarn version installed on the system.

If you want to install Yarn globally or use a specific version, you can run:

Corepack: Still an experimental feature

Despite its introduction in Node.js 16, Corepack is still marked as experimental. This means that while it's expected to work well, it's still under active development, and some aspects of its behavior might change in the future.

That said, Corepack is easy to install, simple to use, and provides an extra layer of reliability to your projects. It's definitely a feature worth exploring and incorporating into your development workflow.

6. Node.js .env loader

Application configuration is crucial, and as a Node.js developer, I’m sure this has met your needs to manage API credentials, server port numbers, or database configurations.

As developers, we need a way to provide different settings for different environments without changing the source code. One popular way to achieve this in Node.js applications is by using environment variables stored in .env files.

The dotenv npm package

Before Node.js introduced native support for loading .env files, developers primarily used the dotenv npm package. The dotenv package loads environment variables from a .env file into process.env, which are then available throughout the application.

Here is a typical usage of the dotenv package:

This worked well, but it required adding an additional dependency to your project. With the introduction of the native .env loader, you can now load your environment variables directly without needing any external packages.

Introducing native support in Node.js for loading .env files

Starting from Node.js 20, the runtime now includes a built-in feature to load environment variables from .env files. This feature is under active development but has already become a game-changer for developers.

To load a .env file, we can use the --env-file CLI flag when starting our Node.js application. This flag specifies the path to the .env file to be loaded.

This will load the environment variables from the specified .env file into process.env. The variables are then available within your application just like before.

Loading multiple .env files

The Node.js .env loader also supports loading multiple .env files. This is useful when you have different sets of environment variables for different environments (e.g., development, testing, production).

You can specify multiple --env-file flags to load multiple files. The files are loaded in the order they are specified, and variables from later files overwrite those from earlier ones.

Here's an example:

In this example, ./.env.default contains the default variables, and ./.env.development contains the development-specific variables. Any variables in ./.env.development that also exist in ./.env.default will overwrite the ones in ./.env.default.

The native support for loading .env files in Node.js is a significant improvement for Node.js developers. It simplifies configuration management and eliminates the need for an additional package. Start using the --env-file CLI flag in your Node.js applications and experience the convenience first-hand.

7. Node.js import.meta support for __dirname and __file

If you’re coming from CommonJS module conventions for Node.js, then you’re used to working with filename and __dirname as a way to get the current file’s directory name and file path. However, until recently, these weren’t easily available on ESM, and you had to come up with the following code to extract the __dirname:

Or if you’re a Matteo Collina fan, you might have sorted out to use Matteo’s desm npm package.

Node.js continually evolves to offer developers more efficient ways to handle file and path operations. One significant change that will benefit Node.js developers has been introduced in Node.js v20.11.0 and Node.js v21.2.0 with built-in support for import.meta.dirname and import.meta.filename.

Using Node.js import.meta.filename and import.meta.dirname

Thankfully, with the introduction of import.meta.filename and import.meta.dirname, this process has become much easier. Let's look at an example of loading a configuration file using the new features.

Assume there is a YAML configuration file in the same directory as your JavaScript file that you need to load. Here's how you can do it:

In this example, we use import.meta.dirname to get the directory name of the current file and assign it to the __dirname variable for CommonJS convenience of cod conventions.

8. Node.js native timers promises

Node.js, a popular JavaScript runtime built on Chrome’s V8 JavaScript engine, has always striven to make the lives of developers easier with constant updates and new features.

Despite Node.js introducing support for natively using timers with a promises syntax way back in Node.js v15, I admit I haven’t been regularly using them.

JavaScript's setTimeout() and setInterval() timers: A brief recap

Before diving into native timer promises, let's briefly recap the JavaScript setTimeout() and setInterval() timers.

The setTimeout() API is a JavaScript function that executes a function or specified piece of code once the timer expires.

In the above code, "Hello World!" will be printed to the console after 3 seconds (3000 milliseconds).

setInterval(), on the other hand, repeatedly executes the specified function with a delay between each call.

In the above code, "Hello again!" will be printed to the console every 2 seconds (2000 milliseconds).

The old way: Wrapping setTimeout() with a promise

In the past, developers would often have to artificially wrap the setTimeout() function with a promise to use it asynchronously. This was done to allow the use of setTimeout() with async/await.

Here is an example of how it was done:

This would print "Taking a break...", wait for two seconds, and then print "Two seconds later...".

While this worked, it added unnecessary complexity to the code.

Node.js Native timer promises: A simpler way

With Node.js Native timer promises, we no longer need to wrap setTimeout() in a promise. Instead, we can use setTimeout() directly with async/await. This makes the code cleaner, more readable, and easier to maintain. Here is an example of how to use Node.js native timer promises:

In the above code, setTimeout() is imported from node:timers/promises. We then use it directly with async/await. It will print "Taking a break...", wait for two seconds, and then print "Two seconds later...".

This greatly simplifies asynchronous programming and makes the code easier to read, write, and maintain.

9. Node.js permissions model

Rafael Gonzaga, now on the Node.js TSC, revived the work on Node.js permission module, which, similarly to Deno, provides a process-level set of configurable resource constraints.

In the world of supply-chain security concerns, malicious npm packages, and other security risks, it’s becoming increasingly crucial to manage and control the resources your Node.js applications have access to for security and compliance reasons.

In this respect, Node.js has introduced an experimental feature known as the permissions module, which is used to manage resource permissions in your Node.js applications. This feature is enabled using the --experimental-permission command-line flag.

Node.js resource permissions model

The permissions model in Node.js provides an abstraction for managing access to various resources like file systems, networks, environment variables, and worker threads, among others. This feature is particularly useful when you want to limit the resources a certain part of your application can access.

Common resource constraints you can set with the permissions model include:

File system read and write with

--allow-fs-read=*and--allow-fs-write=*, and you can specify directories and specific file paths, as well as provide multiple resources by repeating the flagsChild process invocations with

--allow-child-processWorker threads invocations with

--allow-worker

The Node.js permissions model also provides a runtime API via process.permission.has(resource, value) to allow querying for specific access.

If you try accessing resources that aren’t allowed, for example, to read the .env file, you’ll see an ERR_ACCESS_DENIED error:

Node.js permission model example

Consider a scenario where you have a Node.js application that handles file uploads. You want to restrict this part of your application so that it only has access to a specific directory where the uploaded files are stored.

Enable the experimental permissions feature when starting your Node.js application with the --experimental-permission flag.

We also want to specifically allow the application to read 2 trusted files, .env and setup.yml, so we need to update the above to this:

In this way, if the application attempts to access file-based system resources for write purposes outside of the provided upload path, it will halt with an error.

See the following code example for how to wrap a resource access via try/catch as well as using the Node.js permissions runtime API as another way of ensuring access without an error exception thrown:

It's important to note that the permissions functionality in Node.js is still experimental and subject to changes.

On this topic of permissions and production-grade conventions for security, you can find more information on how to build secure Node.js applications, check out these blog posts by Snyk:

These posts provide a comprehensive guide on building secure container images for Node.js web applications, which is critical in developing secure Node.js applications.

10. Node.js policy module

The Node.js policy module is a security feature designed to prevent malicious code from loading and executing in a Node.js application. While it doesn't trace the origin of the loaded code, it provides a solid defense mechanism against potential threats.

The policy module leverages the --experimental-policy CLI flag to enable policy-based code loading. This flag takes a policy manifest file (in JSON format) as an argument. For instance, --experimental-policy=policy.json.

The policy manifest file contains the policies that Node.js adheres to when loading modules. This provides a robust way to control the nature of code that gets loaded into your application.

Implementing Node.js policy module: A step-by-step guide

Let's walk through a simple example to demonstrate how to use the Node.js policy module:

1. Create a policy file. The file should be a JSON file specifying your app's policies for loading modules. Let's call it policy.json.

For instance:

This policy file specifies that moduleA.js and moduleB.js should have specific integrity values to be loaded.

However, generating the policy file for all of your direct and transitive dependencies isn’t straightforward. A few years back, Bradley Meck created the node-policy npm package, which provides a CLI to automate the generation of the policy file.

2. Run your Node.js application with the --experimental-policy flag:

This command tells Node.js to adhere to the policies specified in policy.json when loading modules in app.js.

3. To guard against tampering with the policy file, you can provide an integrity value for the policy file itself using the --policy-integrity flag:

This command ensures that the policy file's integrity is maintained, even if the file is changed on disk.

Caveats with Node.js integrity policy

There are no built-in capabilities by the Node.js runtime to generate or manage the policy file and it could potentially introduce difficulties such as managing different policies based on production vs development environments as well as dynamic module imports.

Another caveat is that if you already have a malicious npm package in its current state, it’s too late to generate a module integrity policy file.

I personally advise you to watch for updates in this area and slowly attempt a gradual adoption of this feature.

For more information on Node.js policy module, you can check out the article on introducing experimental integrity policies to Node.js, which provides a more detailed step-by-step tutorial on working with Node.js policy integrity.

Wrapping-up

As we have traversed through the modern Node.js runtime features that you should start using in 2024, it becomes clear that these capabilities are designed to streamline your development process, enhance application performance, and reinforce security. These features are not just trendy. They have substantial potential to redefine the way we approach Node.js development.

Strengthen your Node.js security with Snyk

While these Node.js features can significantly enhance your development process and application performance, it is essential to remain vigilant about potential security threats. Snyk can be your ally in this endeavor. This powerful tool helps you find and fix known vulnerabilities in your Node.js dependencies and maintain a secure development ecosystem.

To take advantage of what Snyk has to offer, sign up here for free and begin your journey towards more secure Node.js development.