How to choose a security tool for your AI-generated code

9 de janeiro de 2024

0 minutos de leitura“Not another AI tool!” Yes, we hear you. Nevertheless, AI is here to stay and generative AI coding tools, in particular, are causing a headache for security leaders. We discussed why recently in our Why you need a security companion for AI-generated code post.

Purchasing a new security tool to secure generative AI code is a weighty consideration. It needs to serve both the needs of your security team and those of your developers, and it needs to have a roadmap to avoid obsolescence. So, how do you even begin to select the right security tool to secure your generative code reliably and keep your developers happy, amidst the AI tools mushrooming everywhere?

Snyk has been providing security solutions that sit furthest left in the software development cycle for eight years, ahead of other security solutions on the market. Today, we will use our experience to share our views on the top five considerations for purchasing a security tool.

AI CODE SECURITY

Buyer's Guide for Generative AI Code Security

Learn how to secure your AI-generated code with Snyk's Buyer's Guide for Generative AI Code Security.

1. Real-time analysis within your IDE

A developer is focused on building, and a good security tool needs to allow developers to do just that by securing applications reliably and without interrupting the development workflows. This is especially important for the long-term, successful adoption of any security tool and this is why it is crucial for your chosen security tool to keep up with developers’ new AI-enabled speed, and not disrupt their work.

Our proprietary DeepCode AI technology is the machine that powers Snyk Code. This underpinning technology is why Snyk is faster, taking just seconds, not minutes or hours, to review code and suggest corrections in real time, running through your source code unobtrusively in the IDE to blend seamlessly with your developers’ normal workflow.

Instead of interrupting developers while they’re working and making them wait while testing the code outside of their work environment, Snyk runs in tandem alongside them as they code to catch mistakes as they happen, providing real-time feedback and actionable items. And Snyk can scan code an average of 2.4x faster than other solutions, so it can keep up with AI code generators.

2. Accurate and avoidant of AI hallucinations

As we’ve discussed in a recent blog post, the very nature of the technology means that all generative AI produces hallucinations. This is why it helps to have a security tool that is built just for risk management, by a team of security experts. Security experts well-versed in AI know that generative AI output cannot be assumed to be safe and should be accompanied by security checks that can keep pace with the former’s speed.

Snyk understands this flaw in generative AI, but also sees the vast benefits of it. So, with our strong background in security, we’ve crafted a robust approach to risk management from years of battle-testing our software. Snyk is purpose-built to secure code, with different levels of checks and one of the biggest, most reliable database of vulnerable code patterns and fixes that continues to grow over time, to stay on top of the latest coding patterns. In our unique DeepCode AI, we’ve specifically engineered a combination of symbolic and generative AI, and several machine learning methods, coupling our technology with human oversight.

Snyk has the generative AI half that creates code fixes, as well as the rigid, regulated symbolic AI half with a best-in-class knowledge base and tailored rules, that tethers and double-checks what the generative AI half produces. While DeepCode AI monitors hundreds of thousands of open source repositories, human security experts work with our AI to rapidly spot and learn new insecure patterns, fine-tuning and maintaining our database and rules to both drive down false positives and keep you ahead of the latest flaws, because machines can’t think! This marriage of hybrid AI and human cognition is what ensures a high level of accuracy without hallucinations, for companies like Snowflake, Intuit, and Neiman Marcus.

3. Thorough interfile analysis

Many AI security tools and elements simply scan isolated code blocks, snippets, or single source code files. However, this is not enough, as any security person knows.

Reviewing an entire application and all its source code files is important. This is because an application is made up of a multitude of source code files, far beyond the single file that’s open and its dependent files. The way that data flows through the application, interacting with all the source code, differs each time new code is created. To understand how a new block of code affects the application, a security tool must understand this entire structure and the functions within to determine if the AI-generated code is safe.

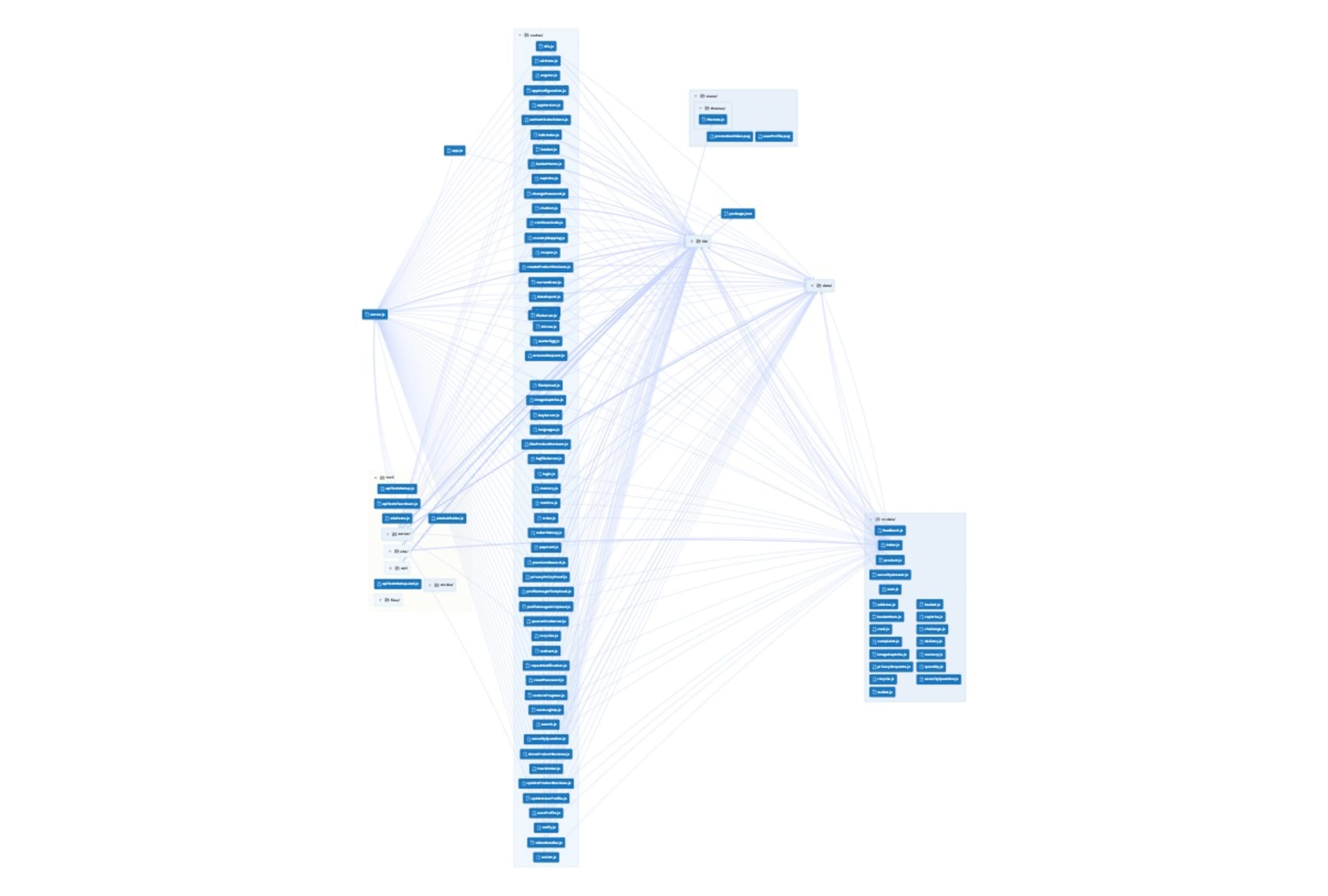

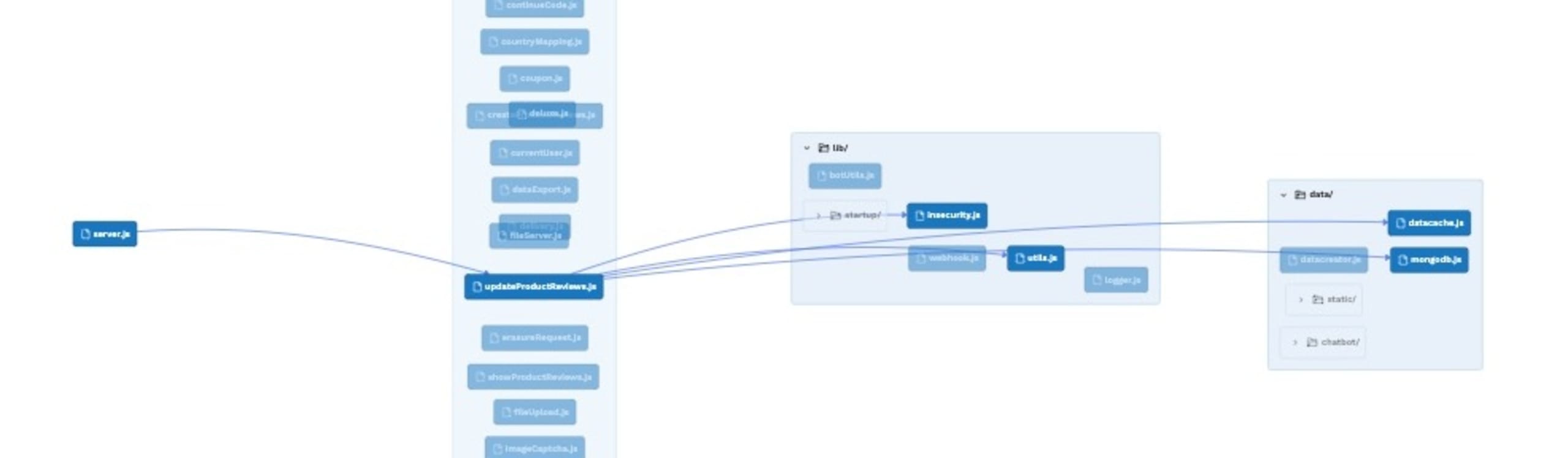

The code shown in the IDE is from an application, and the entire code structure of an application is visualized at the file level in the above diagram, using CodeSee.

Most SAST tools do not run in the IDE because this process is very difficult to implement, or if they do run in the IDE, they look at a limited view of the application. In the example below, the updateProductReviews file is open for editing, and most IDE-based SAST scanners just look at this one file, or maybe the files directly connected to this file, which misses over 90% of the application.

Snyk specializes in understanding the way that security teams and would-be bad actors think. We at Snyk understand that real-world applications are sprawling and complex, with plenty of legacy code and different dependencies. Snyk doesn’t just review code snippets or blocks in isolation — we review the application in its entirety each time that new code is added to it, understanding how each block of code interacts with all the other code throughout the application, following how the data flows through the entire application.

In particular, Snyk’s interfile analysis uncovers interactions between a code snippet and all the files it touches within an application, to filter out the noise and surface all relevant threats. This enables Snyk to provide more accurate and meaningful results and share its reasoning transparently with your developers, so they understand the problem and how to fix it. Basically, Snyk triages risks, minimizes false results, and then generates appropriate fixes (that have been assessed to not create new vulnerabilities) for you, so you and your developers can work more efficiently and easily.

4. Automated reporting from within the platform

All security leaders will have audit and compliance requirements to contend with, and generating reports can be tedious. This is why you need a pragmatic security tool that prioritizes risks and summarizes a centralized overview of risk findings in-platform. After all, to make any meaningful strategic decisions, track your progress, or assess your overall risk, you’d need cross-organizational visibility of security weaknesses and trends, and you wouldn’t want to pull together piecemeal bits of data yourself.

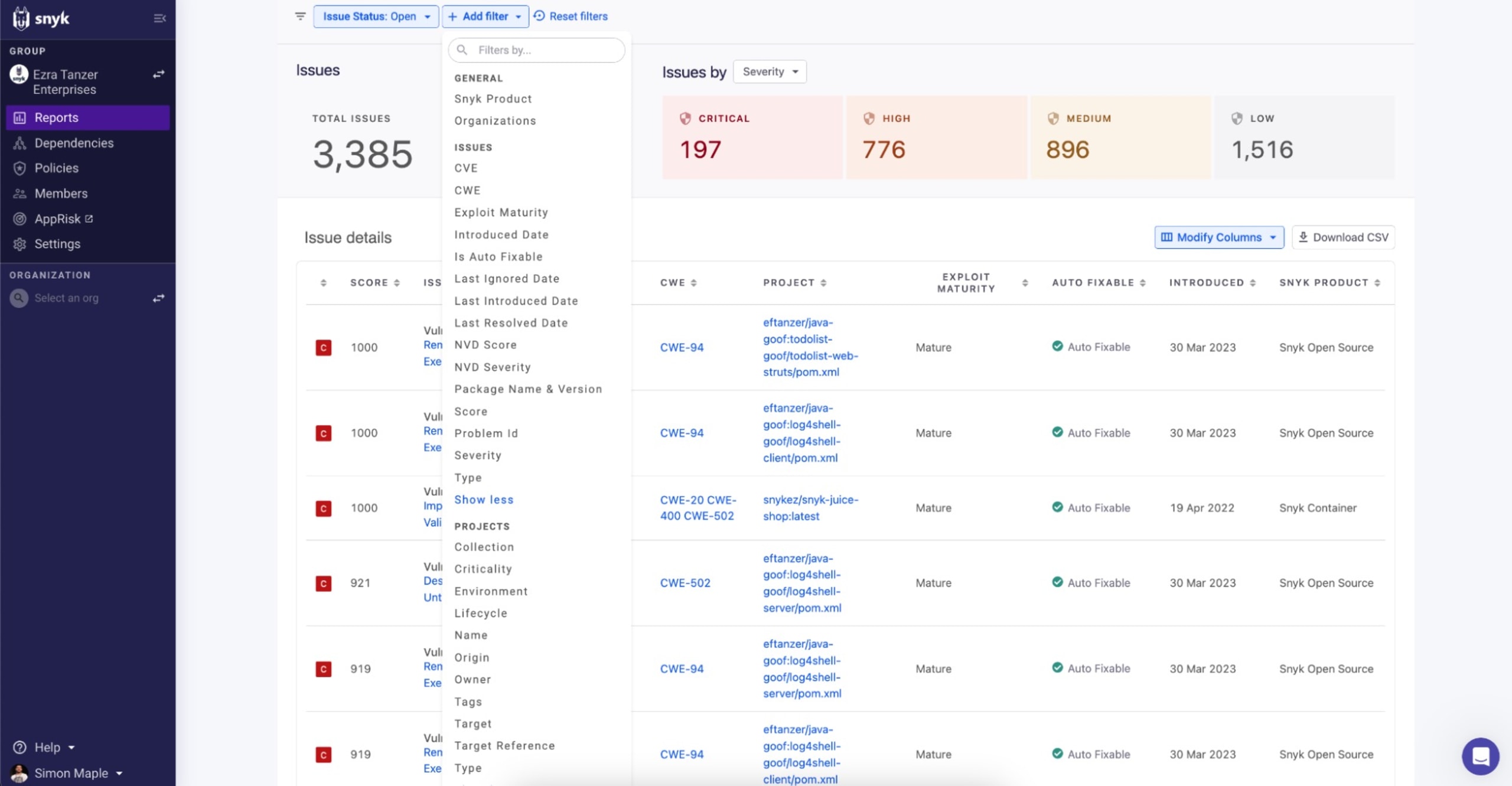

Snyk’s reporting function is native and thus optimized to give you full insight into your security data. This function is also centralized across all views and teams, allowing for more granular prioritizing with its filters, such as exploit maturity, fixability, and Risk Score, which includes items like reachability, project criticality, and production versus development projects. These options enable more efficient compliance through automated and customized reporting, all from within your platform for a seamless experience.

5. Independent and able to evolve with your changing needs

In this rapidly changing AI landscape, your AI coding assistants today may not be the ones you want to use, a year from now. It therefore makes sense to keep your options open by choosing a security tool that doesn’t lock you into using specific AI coding assistant.

We understand this and it’s why Snyk is not restricted to any one AI coding tool — we can integrate with the AI tools that your developers love, today or tomorrow.

And one final, crucial element for best practice and governance — it’s a good idea to ensure that the company generating your code is not affiliated with the company protecting your code. To do otherwise removes a very necessary set of checks and balances. Snyk only does security, so we are independent of AI coding tools and laser-focused on helping you to manage risk.

Gartner Leader for Application Security Testing

Security is crucial, that’s why security experts take years to train. The importance of security is underlined by the duty of care placed on security leaders, with punitive legal measures sometimes imposed on security leaders in the wake of serious security breaches. This is why you need to deliberately choose your security tool, rather than go with the most conveniently or cheaply available choice.

Snyk’s platform is an amalgamation of years of security experience, academic study, and refinement of our technology and methods. We have very consciously created a tool that balances both security and developer needs.

“As a security leader, my foremost responsibility is to ensure that all of the code we create, whether AI-generated or human-written, is secure by design. By using Snyk Code's AI static analysis and its latest innovation, DeepCode AI Fix that provides security tested fixes in the IDE to be automatically implemented, our development and security teams can now ensure we're both shipping software faster as well as more securely.”

-Steve Pugh, CISO of NYSE/ICE

Snyk was also recently named a “Leader” in the 2023 Gartner Magic Quadrant for Application Security Testing, a “Leader” in the 2023 Forrester Wave for Software Composition Analysis, and Snyk was the 2022 Gartner Peer Insights Customers’ Choice for Application Security Testing.

Download Snyk's Buyer's Guide for Generative AI Code Security

As a starting point for selecting AI coding assistant security guardrails, your chosen security tool should run security scans rapidly and as far left as possible, preferably on source code in your IDE, it should leverage multiple AI models to produce more accurate results, it should be thorough in its analyses and review code with full application context, it should boast native reporting capabilities that are optimized for full interaction with your security data, and it should be tool-agnostic to give your developers flexibility with their toolkits.

Even with all the noise in AI right now, having a framework of key characteristics or considerations can help you to significantly narrow down the choices of security tools for your AI-generated code.

For more guidance on how to buy a security tool for your AI-generated code, download our Buyer’s Guide for Generative AI Code Security or book an expert demo today.