Dans cette section

How To Run Models (LLM) Locally with Docker

When developers meet Large Language Models (LLMs), they often do so in a context highly affiliated with foundational models such as GPT-4o by OpenAI, Gemini 2.5 Pro by Google, and Anthropic’s Claude Sonnet 4. However, some generative AI use cases can be achieved entirely locally on your own developer machine.

In this quick-start guide, I’ll give you a fast rundown of how you can set up models to run locally on your laptop (or inference if you have the GPUs for it) without having to dwell on machine learning terminology, Python tools, or other expertise that may seem out of reach for you as a software developer.

Why run LLM models locally on your own machine?

Whether it be model cost, privacy and security concerns, or company policy and external regulations, some use cases and requirements will call to run models locally in your own environment (on-premise data center) or physically on your own PC or laptop.

How to run models with Docker

Docker has made its name as a technology that abstracts many hurdles of wrapping applications and enabling them to run seamlessly on different platforms. Docker is now doing the same with LLMs.

Requirements for this tutorial are:

Docker Engine or Docker Desktop, versions 4.41+, so you’re advised to update your local installation for a successful tutorial.

Consider consulting Docker Model Runner documentation for more details.

Step 1: Enable Docker Model Runner

If you’ve updated to the latest Docker version this will, most probably, be enabled, but you’ll also need to enable the host-side TCP support so that we can connect to the model from program code like with Vercel’s AI SDK. Otherwise, you’ll only be able to interact with the model runner via the docker CLI.

Open Docker Desktop Settings, Go to Beta features, and from there enable these two options:

“Enable Docker Model Runner”

“Enable host-side TCP support”

Step 2: Get an LLM Model

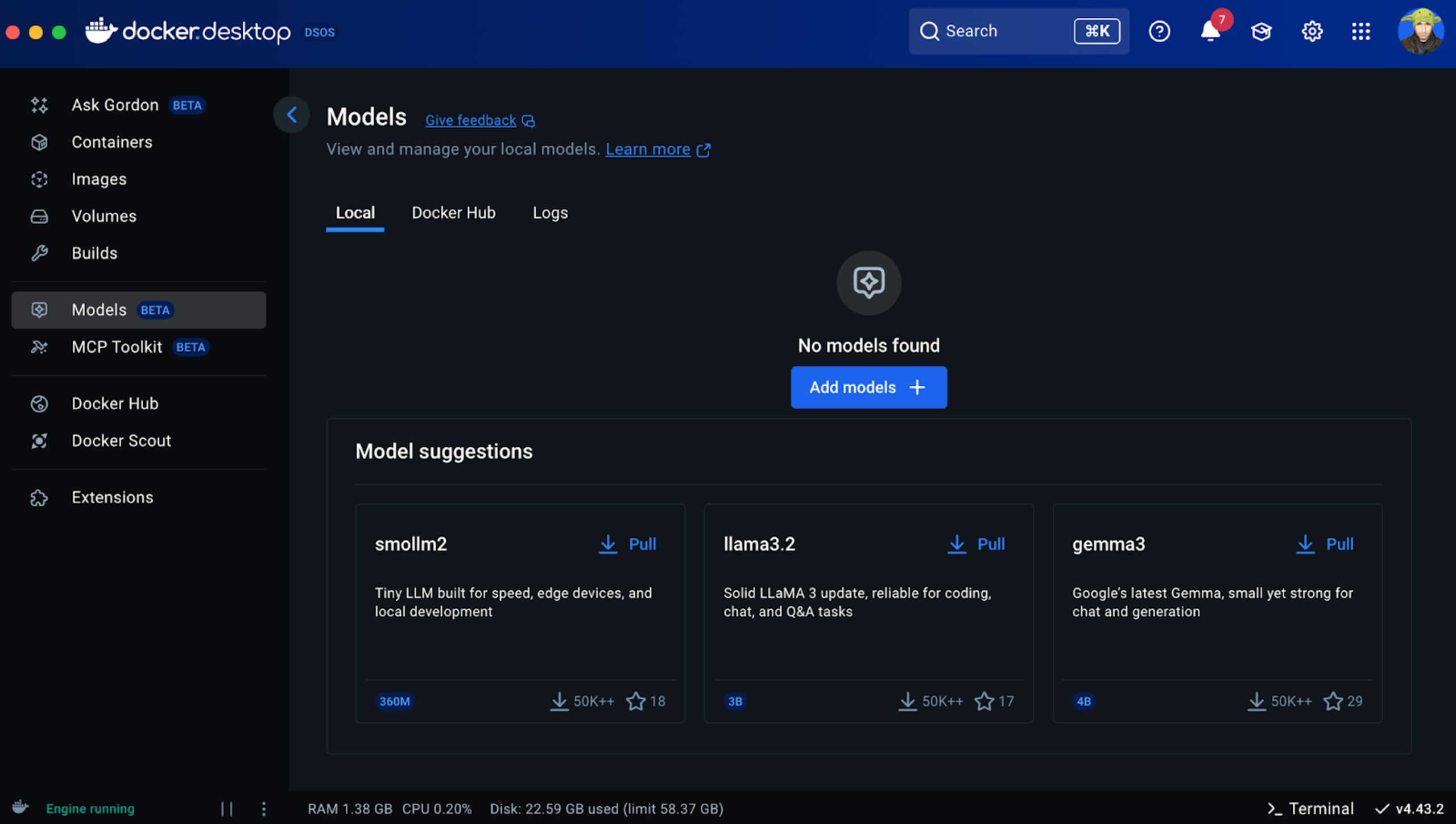

Next, you can open the Docker Desktop dashboard and from the left sidebar menu choose Models to see all available models:

You can also find models on the web from Docker Hub.

We’ll go with the smollm2 model which is at a sub 300 megabytes of disk space. However, for more performant models I’d recommend either the 3B to 4B family such as gemma3 or the next level at either 7 to 8B parameter models, or giving Microsoft’s 14B parameters a try with phi4.

Note that model performance is highly dependent on your local CPU and GPU, and the inference engine available locally to leverage these resources. Docker makes it easy to experiment locally, but the out-of-the-box default configuration is likely not tuned for the best performance.

Now that we’ve decided on a local model to run, we can simply execute this command from the terminal:

docker model run ai/smollm2As you likely don’t have the model installed in your environment, Docker will pull the model from the registry and then run it:

You can now interact with the model in an interactive session from the CLI.

Step 3: Use the local LLM from an AI SDK

The Vercel AI SDK is typically used with hosted model endpoints like OpenAI or Anthropic. However, it can be easily adapted to work with a self-hosted model, such as one running via the Docker Model Runner.

Typically, LLM prompting involves creating a custom fetcher using the SDK’s createStreamableUI() and streamText() primitives. Below is a TypeScript code example that shows how we set it up as if it was an OpenAI endpoint.

First, make sure you have the AI SDK installed:

npm install aiSet up environment variables:

OPENAI_API_KEY=docker

OPENAI_BASE_URL=http://localhost:12434/engines/llama.cpp/v1Note that the OPENAI_API_KEY is indeed set to docker and is not a general placeholder.

Then we can use an API route definition as follows:

import { OpenAIStream, StreamingTextResponse } from 'ai';

import OpenAI from 'openai';

// or 'nodejs' depending on your environment

export const runtime = 'edge';

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY!,

baseURL: process.env.OPENAI_BASE_URL!,

});

export async function POST(req: Request) {

const { messages } = await req.json();

const response = await openai.chat.completions.create({

model: 'ai/smollm2',

messages,

stream: true,

});

const stream = OpenAIStream(response);

return new StreamingTextResponse(stream);

}

Similarly, you can use the official OpenAI SDK and seed it with our own local Docker Model Runner values for the OPENAI_BASE_URL and OPENAI_API_KEY.

More on Docker, AI, and security

Now that you have a locally running LLM you might be interested in taking steps to ensure that LLMs are generating secure code, ensuring you’re aware of prompt injection attacks and potentially exploring the MCP space.

If you’re curious to explore more about any of the above, I suggest the following articles:

Given that you already have Docker for this local LLM tutorial, you might want to explore how to run MCP servers with Docker.

Learn how prompt injection can be exploited via invisible PDF text and fool LLM-based logic.

If you’re new to MCP, you should read a guide on visually understanding the MCP architecture.

On MCPs, consult some popular MCP servers for developers.

Sécurisez votre code généré par l’IA

Créez un compte Snyk gratuitement pour sécuriser votre code généré par l’IA en quelques minutes. Vous pouvez également demander une démonstration avec un expert pour déterminer comment Snyk peut répondre à vos besoins en matière de sécurité des développeurs.