Kubernetes Operators: automating the release process

Arthur Granado

20 novembre 2020

0 minutes de lectureSnyk helps our customers to integrate security into their CI/CD pipelines, so we spend a lot of time thinking about automation. When it comes to releasing our own software, we're always looking to adopt best practices for test and release.

In this blog, I'll talk about the release process for our Kubernetes Operator, and show how we've automated deploying the release across multiple repository targets.

What is a Kubernetes Operator?

An Operator is a "... method of packaging, deploying and managing a Kubernetes application..", Operators basically encapsulate application lifecycle actions in code, allowing us to control them using native Kubernetes API.

Why are Kubernetes Operators important?

The operator pattern provides us with a way of controlling applications once they are running, using Kubernetes API’s. If our applications need to do more than just start or stop, this gives us the ability to control them during operation in the same declarative way that we manage all the rest of our Kubernetes resources.

What is application release automation?

With the rise of online catalogs that we deploy our software to for consumption by our users, the process by which we distribute a released version of our software can become very complicated. We may have many steps that need to be completed to distribute our artefacts into different repositories, and we may need to provide different types of metadata to allow our application to be consumed in different ways. Automating these processes is vital to ensure that each release is distributed error-free, and to allow our users to consume new versions as soon as they are available.

Let's dig in

A few months ago the team behind Container Scanning released Snyk Operator, an Operator for Snyk Controller to make it easy to deploy in both native Kubernetes and OpenShift clusters.

Snyk Controller is a Snyk application that is deployed in the user’s cluster and will scan images of containers running in the cluster. You can learn more about this amazing tool here and how it can help you to get vulnerabilities in your images and applications here.

There are many different ways to build operators for Kubernetes. One of the most common is the Operator framework, which originated at CoreOS and is now a CNCF project. As well as providing an SDK for operator development, the operator framework also provides a repository for community developed operators.

The Snyk Operator is built using this framework, and we distribute to the upstream repositories for every release. When we release a new Operator version, there are a lot of steps we need to complete, which could be error-prone if done manually.

First of all, let's understand what the release process for Snyk Controller is and how we include the Operator build into it.

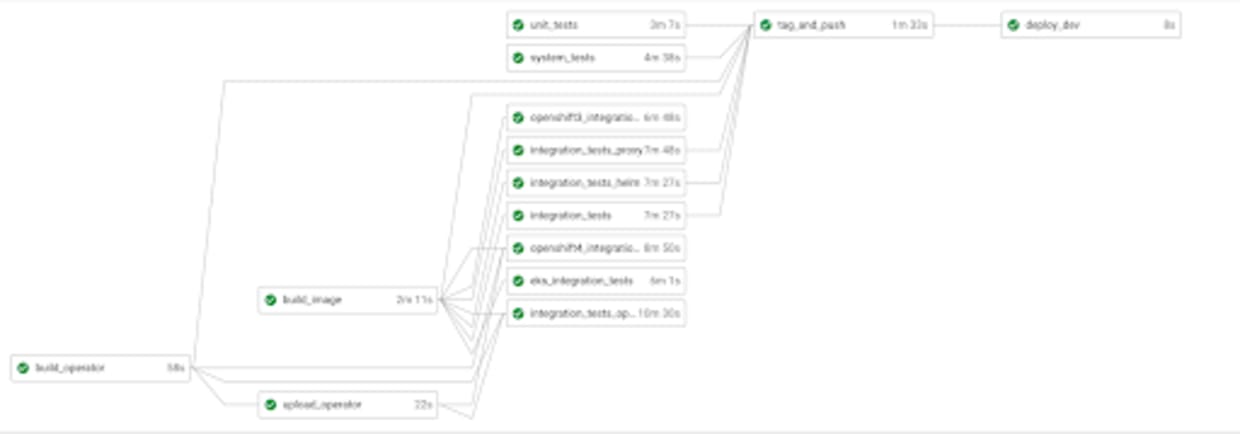

Once we have a Pull Request merged by any of the contributors to our Snyk Controller repository, our continuous integration, and continuous deployment pipeline will start. This will build the operator and its image, run the unit tests, system tests, and all of the integration tests to test the operator in different environments.

There is a wide variety of different environments we need to test in, including different versions of OpenShift, and different versions of upstream Kubernetes. In all of these environments, we test with Operator Lifecycle Manager, which is part of the Operator Framework and extends Kubernetes to provide a declarative way to install, manage, and upgrade Operators and their dependencies in a cluster.

Once a release of Snyk Operator has been tested, we need to distribute the final release version. Currently, we release the Snyk Operator to Operator Hub, which allows users to install the Snyk Operator in any Kubernetes cluster running Operator Lifecycle Manager, as well as releasing to the OpenShift catalog.

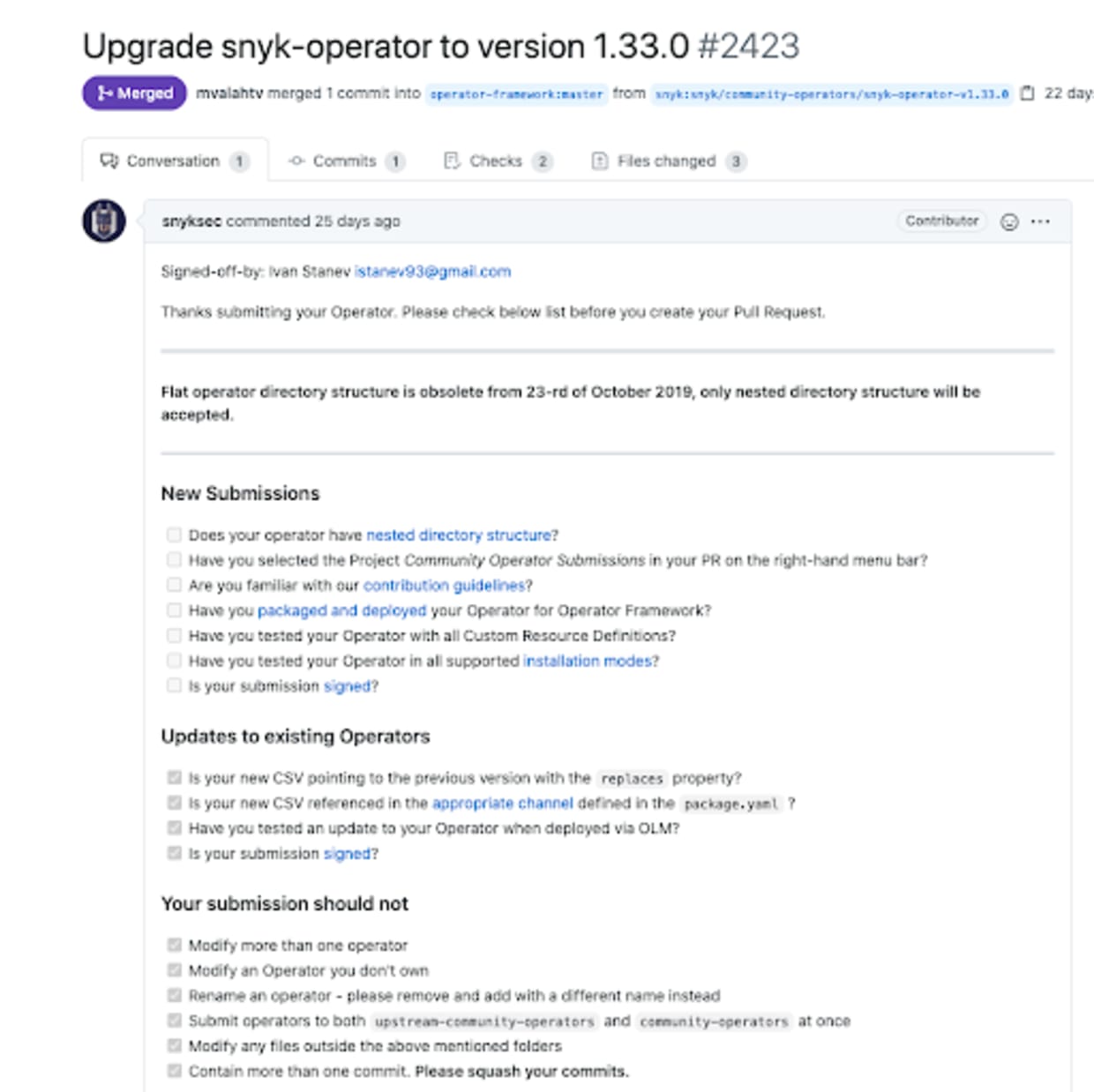

This release process involves two distinct Pull Requests, which would add a lot of overhead if we did this manually—one to include the Snyk Operator into the community-operators folder, and one to include the Snyk Operator in the upstream-community-operators folder. The first one will release the Snyk operator on OperatorHub.io, and the second one will release the Snyk Operator into the OpenShift catalog.

In order to remove the overhead of these steps, we want to fully automate the release process to both of these repositories, and so we scripted all of this in our CircleCI pipeline.

First of all, we copy all the files we need to the snyk-operatorfolder replacing the application version with the newest one. We download the operator-sdk tool and use it to build the Snyk Operator image. Once this is completed, we push the image to our public registry on Docker Hubs.

Source: GitHub

This image allows us to run the integration tests in the pipeline and make sure that we're releasing something that works in OpenShift clusters for version 3.x and 4.x and in Kubernetes clusters with OLM. Here, we use a trick to help us to run the integration tests. We push the operator image to Quay registry and create an operator-source.yaml and then install it in the cluster applying this install.yaml.

Our intention here is to mimic the same process that happens when users install the Operator through the UI. We think of it as a black box and follow some steps that OpenShift would take when the user clicks "install" in the UI.

source: GitHub

Source: GitHub

Source: GitHub

Finally, with the green light for all the tests, we can move on to release the Snyk Operator, which requires us to create some additional metadata. This adds the ClusterServiceVersion and CustomerResource Definitions which are used to populate the Snyk Controller page in the Operator Hub, and allow integration with the Operator Lifecycle Manager. OLM allows a user to subscribe to an operator, which unifies installation and updates in a single step.

Because this metadata doesn't change too often, we use templating to just change the version to match the newest Snyk Operator version.

Source: GitHub

Having completed all of these steps, we can finally create our commits, one to include the new Operator metadata in the folder community-operators, and one for the folder upstream-community-operators. For each commit we're going to open a Pull Request to the community operators repository, placing the requested message in the Pull Request.

Source: GitHub

We can see examples of these automated PR’s here and here.

Once the PR’s are created, automated testing will take place in the OperatorHub and OpenShift, and eventually the maintainers of those repositories will merge in our new version.

Wrapping up

By fully automating the release process for our operator, we're able to save significant time and resources in the engineering team and continue to provide new versions of our software as quickly and efficiently as possible.

The Snyk Controller integrates Snyk scanning into your Kubernetes clusters, enabling you to import and test your running workloads and identify vulnerabilities in their associated images and configurations that might make those workloads less secure. Find out more here and start using Snyk by registering for a free account.

Cap sur la capture du drapeau

Découvrez comment résoudre les défis de capture du drapeau en regardant notre atelier virtuel à la demande.